Maedeh Jamali

A Parametric Rate-Distortion Model for Video Transcoding

Apr 13, 2024Abstract:Over the past two decades, the surge in video streaming applications has been fueled by the increasing accessibility of the internet and the growing demand for network video. As users with varying internet speeds and devices seek high-quality video, transcoding becomes essential for service providers. In this paper, we introduce a parametric rate-distortion (R-D) transcoding model. Our model excels at predicting transcoding distortion at various rates without the need for encoding the video. This model serves as a versatile tool that can be used to achieve visual quality improvement (in terms of PSNR) via trans-sizing. Moreover, we use our model to identify visually lossless and near-zero-slope bitrate ranges for an ingest video. Having this information allows us to adjust the transcoding target bitrate while introducing visually negligible quality degradations. By utilizing our model in this manner, quality improvements up to 2 dB and bitrate savings of up to 46% of the original target bitrate are possible. Experimental results demonstrate the efficacy of our model in video transcoding rate distortion prediction.

Robust Watermarking using Diffusion of Logo into Autoencoder Feature Maps

May 24, 2021

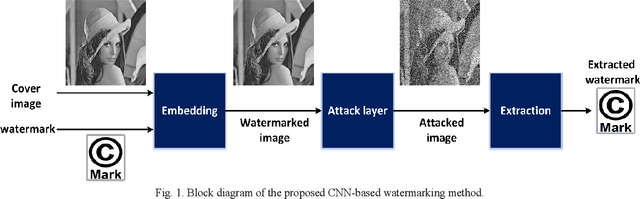

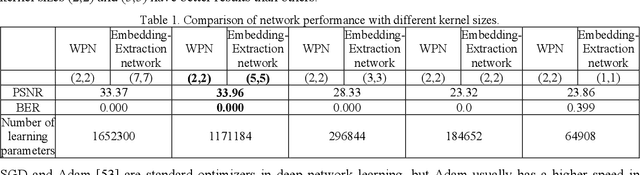

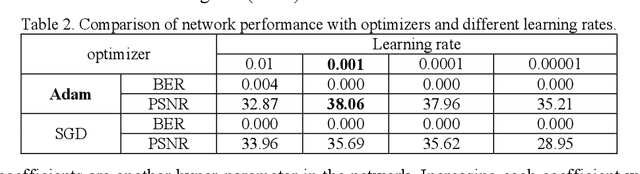

Abstract:Digital contents have grown dramatically in recent years, leading to increased attention to copyright. Image watermarking has been considered one of the most popular methods for copyright protection. With the recent advancements in applying deep neural networks in image processing, these networks have also been used in image watermarking. Robustness and imperceptibility are two challenging features of watermarking methods that the trade-off between them should be satisfied. In this paper, we propose to use an end-to-end network for watermarking. We use a convolutional neural network (CNN) to control the embedding strength based on the image content. Dynamic embedding helps the network to have the lowest effect on the visual quality of the watermarked image. Different image processing attacks are simulated as a network layer to improve the robustness of the model. Our method is a blind watermarking approach that replicates the watermark string to create a matrix of the same size as the input image. Instead of diffusing the watermark data into the input image, we inject the data into the feature space and force the network to do this in regions that increase the robustness against various attacks. Experimental results show the superiority of the proposed method in terms of imperceptibility and robustness compared to the state-of-the-art algorithms.

Weighted Fuzzy-Based PSNR for Watermarking

Jan 21, 2021

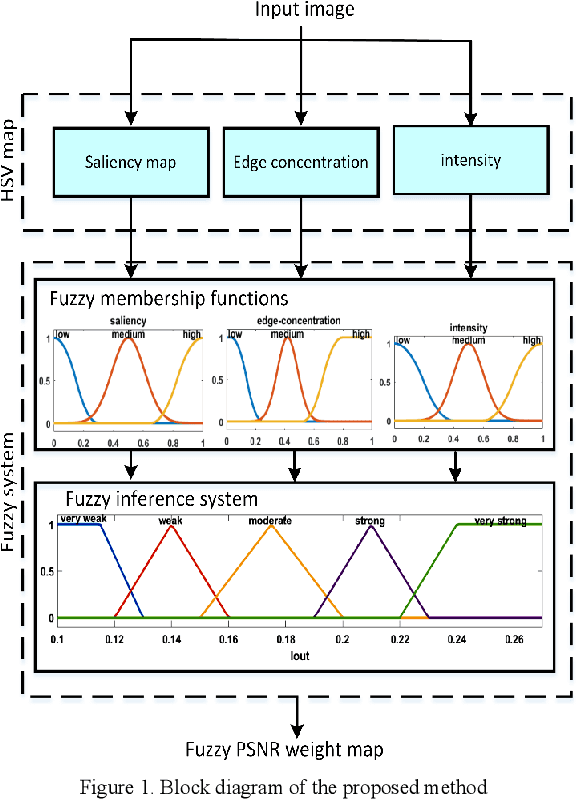

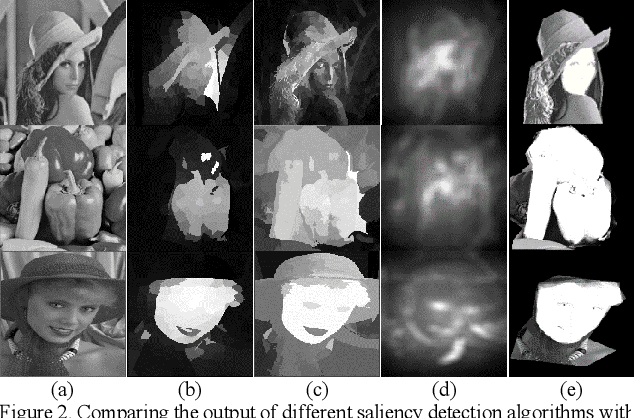

Abstract:One of the problems of conventional visual quality evaluation criteria such as PSNR and MSE is the lack of appropriate standards based on the human visual system (HVS). They are calculated based on the difference of the corresponding pixels in the original and manipulated image. Hence, they practically do not provide a correct understanding of the image quality. Watermarking is an image processing application in which the image's visual quality is an essential criterion for its evaluation. Watermarking requires a criterion based on the HVS that provides more accurate values than conventional measures such as PSNR. This paper proposes a weighted fuzzy-based criterion that tries to find essential parts of an image based on the HVS. Then these parts will have larger weights in computing the final value of PSNR. We compare our results against standard PSNR, and our experiments show considerable consequences.

Saliency Based Fire Detection Using Texture and Color Features

Dec 20, 2019

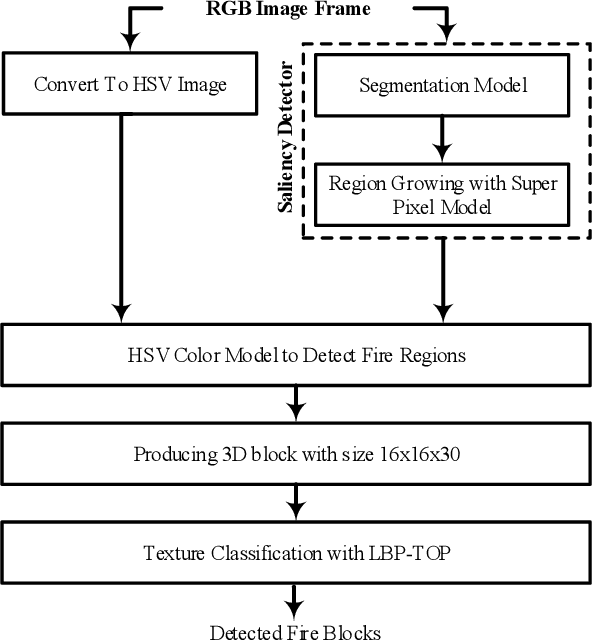

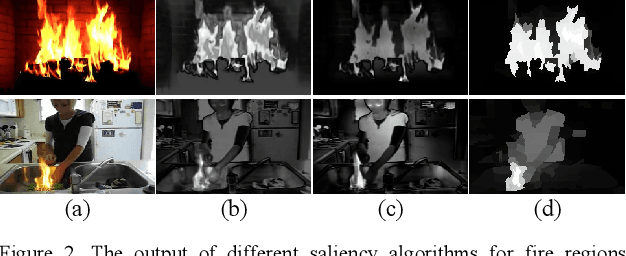

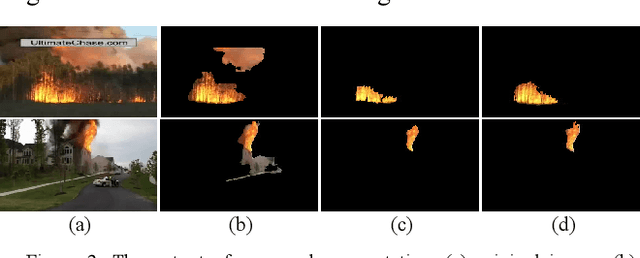

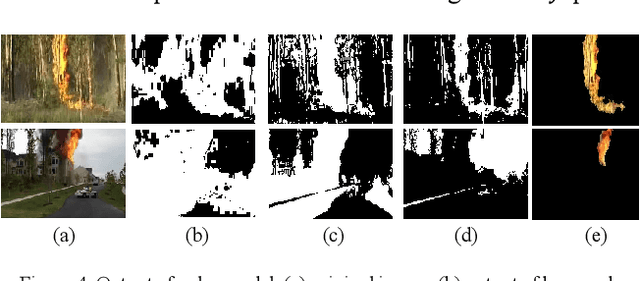

Abstract:Due to industry deployment and extension of urban areas, early warning systems have an essential role in giving emergency. Fire is an event that can rapidly spread and cause injury, death, and damage. Early detection of fire could significantly reduce these injuries. Video-based fire detection is a low cost and fast method in comparison with conventional fire detectors. Most available fire detection methods have a high false-positive rate and low accuracy. In this paper, we increase accuracy by using spatial and temporal features. Captured video sequences are divided into Spatio-temporal blocks. Then a saliency map and combination of color and texture features are used for detecting fire regions. We use the HSV color model as a spatial feature and LBP-TOP for temporal processing of fire texture. Fire detection tests on publicly available datasets have shown the accuracy and robustness of the algorithm.

Robustness and Imperceptibility Enhancement in Watermarked Images by Color Transformation

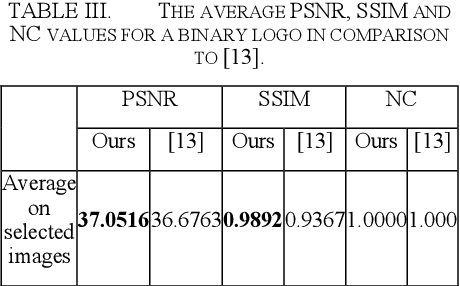

Nov 02, 2019

Abstract:One of the effective methods for the preservation of copyright ownership of digital media is watermarking. Different watermarking techniques try to set a tradeoff between robustness and transparency of the process. In this research work, we have used color space conversion and frequency transform to achieve high robustness and transparency. Due to the distribution of image information in the RGB domain, we use the YUV color space, which concentrates the visual information in the Y channel. Embedding of the watermark is performed in the DCT coefficients of the specific wavelet subbands. Experimental results show high transparency and robustness of the proposed method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge