Madeleine Waller

Identifying Reasons for Bias: An Argumentation-Based Approach

Oct 26, 2023

Abstract:As algorithmic decision-making systems become more prevalent in society, ensuring the fairness of these systems is becoming increasingly important. Whilst there has been substantial research in building fair algorithmic decision-making systems, the majority of these methods require access to the training data, including personal characteristics, and are not transparent regarding which individuals are classified unfairly. In this paper, we propose a novel model-agnostic argumentation-based method to determine why an individual is classified differently in comparison to similar individuals. Our method uses a quantitative argumentation framework to represent attribute-value pairs of an individual and of those similar to them, and uses a well-known semantics to identify the attribute-value pairs in the individual contributing most to their different classification. We evaluate our method on two datasets commonly used in the fairness literature and illustrate its effectiveness in the identification of bias.

Bias Mitigation Methods for Binary Classification Decision-Making Systems: Survey and Recommendations

May 31, 2023

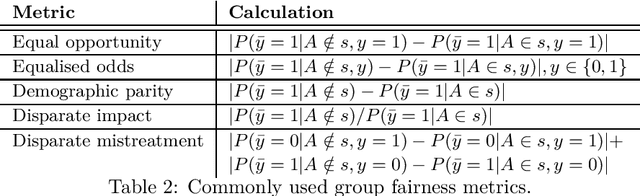

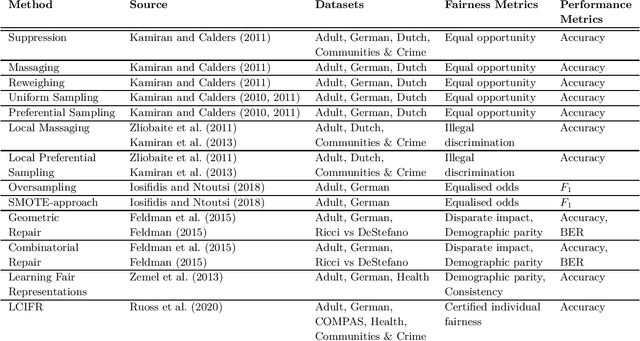

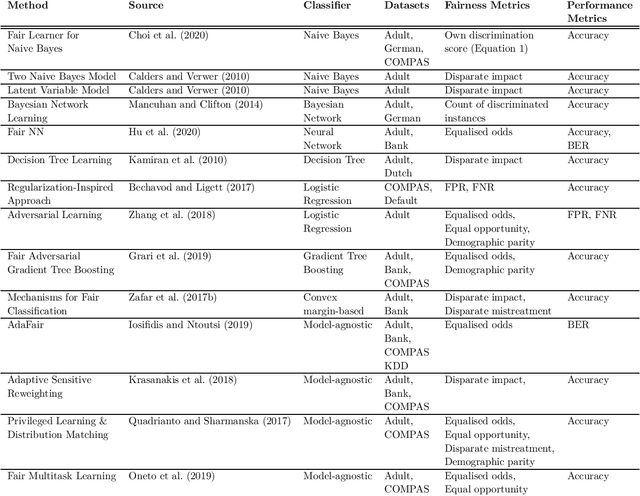

Abstract:Bias mitigation methods for binary classification decision-making systems have been widely researched due to the ever-growing importance of designing fair machine learning processes that are impartial and do not discriminate against individuals or groups based on protected personal characteristics. In this paper, we present a structured overview of the research landscape for bias mitigation methods, report on their benefits and limitations, and provide recommendations for the development of future bias mitigation methods for binary classification.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge