Ma Bin

Multi-Modal Hybrid Deep Neural Network for Speech Enhancement

Jun 15, 2016

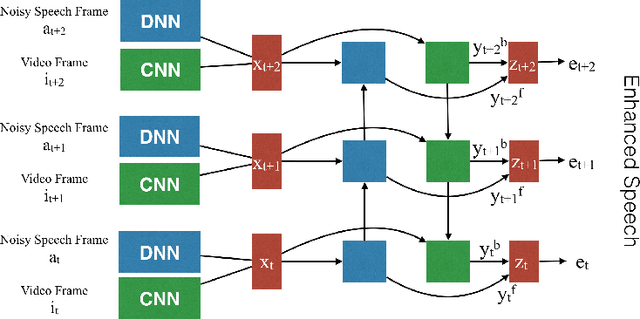

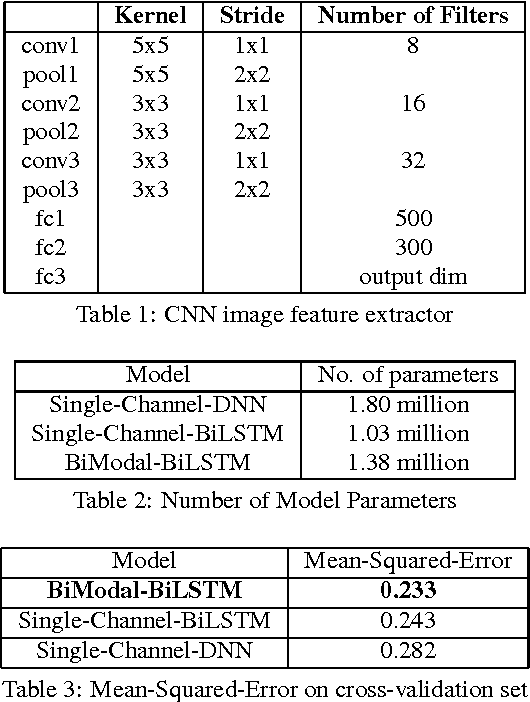

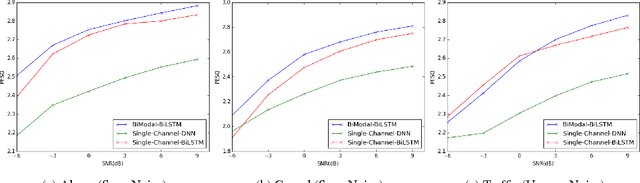

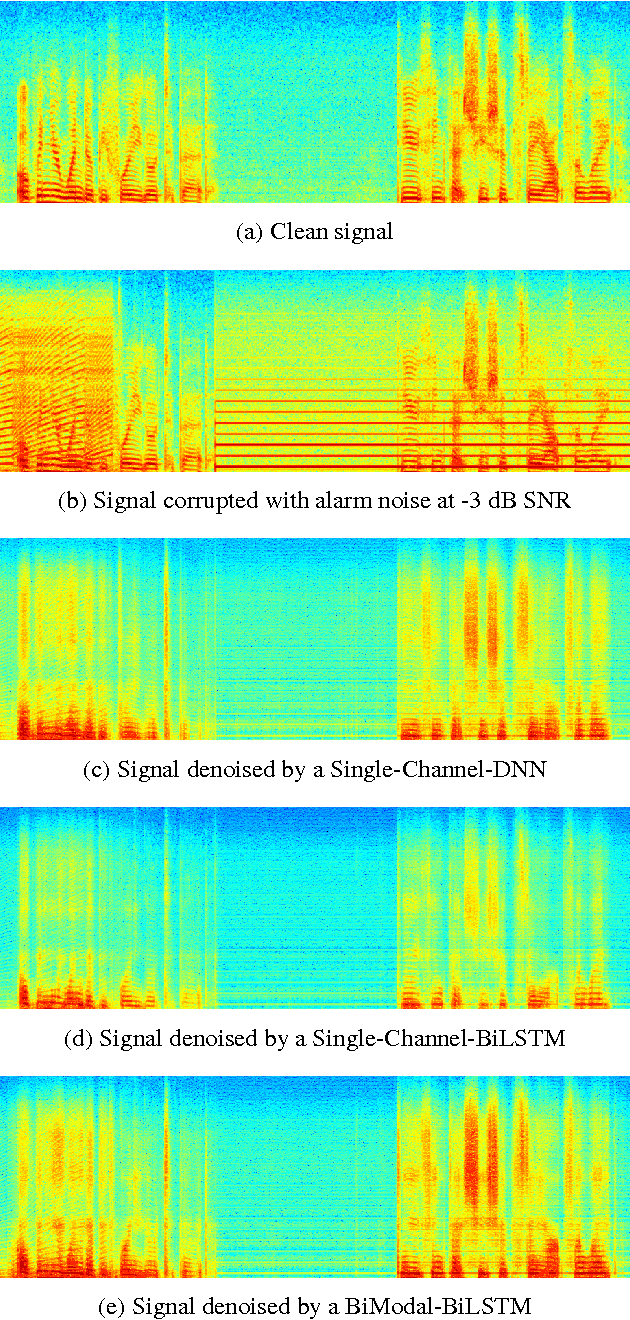

Abstract:Deep Neural Networks (DNN) have been successful in en- hancing noisy speech signals. Enhancement is achieved by learning a nonlinear mapping function from the features of the corrupted speech signal to that of the reference clean speech signal. The quality of predicted features can be improved by providing additional side channel information that is robust to noise, such as visual cues. In this paper we propose a novel deep learning model inspired by insights from human audio visual perception. In the proposed unified hybrid architecture, features from a Convolution Neural Network (CNN) that processes the visual cues and features from a fully connected DNN that processes the audio signal are integrated using a Bidirectional Long Short-Term Memory (BiLSTM) network. The parameters of the hybrid model are jointly learned using backpropagation. We compare the quality of enhanced speech from the hybrid models with those from traditional DNN and BiLSTM models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge