Míriam Antón-Rodríguez

A Deep Learning Approach for Brain Tumor Classification and Segmentation Using a Multiscale Convolutional Neural Network

Feb 04, 2024

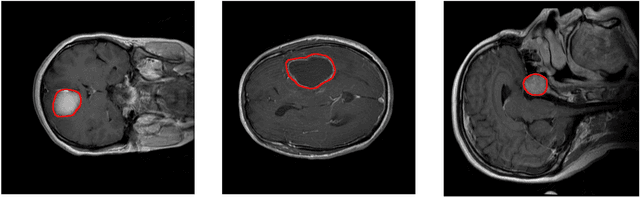

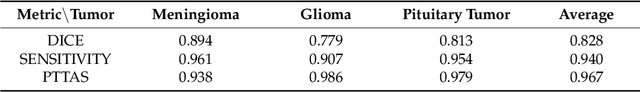

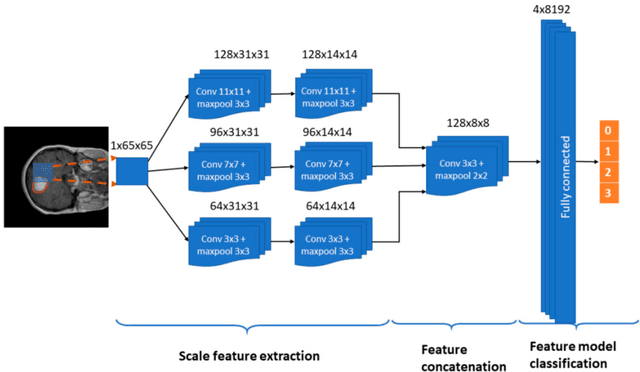

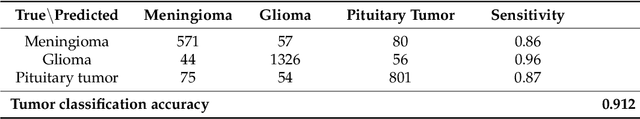

Abstract:In this paper, we present a fully automatic brain tumor segmentation and classification model using a Deep Convolutional Neural Network that includes a multiscale approach. One of the differences of our proposal with respect to previous works is that input images are processed in three spatial scales along different processing pathways. This mechanism is inspired in the inherent operation of the Human Visual System. The proposed neural model can analyze MRI images containing three types of tumors: meningioma, glioma, and pituitary tumor, over sagittal, coronal, and axial views and does not need preprocessing of input images to remove skull or vertebral column parts in advance. The performance of our method on a publicly available MRI image dataset of 3064 slices from 233 patients is compared with previously classical machine learning and deep learning published methods. In the comparison, our method remarkably obtained a tumor classification accuracy of 0.973, higher than the other approaches using the same database.

* 14 pages

Comparative Analysis of Kinect-Based and Oculus-Based Gaze Region Estimation Methods in a Driving Simulator

Feb 04, 2024Abstract:Driver's gaze information can be crucial in driving research because of its relation to driver attention. Particularly, the inclusion of gaze data in driving simulators broadens the scope of research studies as they can relate drivers' gaze patterns to their features and performance. In this paper, we present two gaze region estimation modules integrated in a driving simulator. One uses the 3D Kinect device and another uses the virtual reality Oculus Rift device. The modules are able to detect the region, out of seven in which the driving scene was divided, where a driver is gazing at in every route processed frame. Four methods were implemented and compared for gaze estimation, which learn the relation between gaze displacement and head movement. Two are simpler and based on points that try to capture this relation and two are based on classifiers such as MLP and SVM. Experiments were carried out with 12 users that drove on the same scenario twice, each one with a different visualization display, first with a big screen and later with Oculus Rift. On the whole, Oculus Rift outperformed Kinect as the best hardware for gaze estimation. The Oculus-based gaze region estimation method with the highest performance achieved an accuracy of 97.94%. The information provided by the Oculus Rift module enriches the driving simulator data and makes it possible a multimodal driving performance analysis apart from the immersion and realism obtained with the virtual reality experience provided by Oculus.

* 25 pages

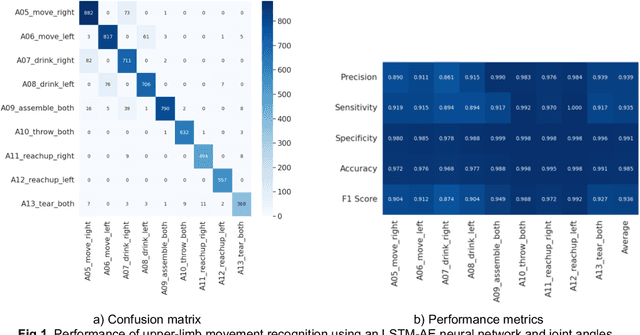

A comparative study on wearables and single-camera video for upper-limb out-of-thelab activity recognition with different deep learning architectures

Feb 04, 2024

Abstract:The use of a wide range of computer vision solutions, and more recently high-end Inertial Measurement Units (IMU) have become increasingly popular for assessing human physical activity in clinical and research settings. Nevertheless, to increase the feasibility of patient tracking in out-of-the-lab settings, it is necessary to use a reduced number of devices for movement acquisition. Promising solutions in this context are IMU-based wearables and single camera systems. Additionally, the development of machine learning systems able to recognize and digest clinically relevant data in-the-wild is needed, and therefore determining the ideal input to those is crucial.

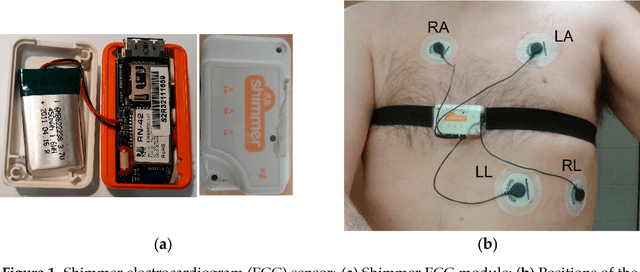

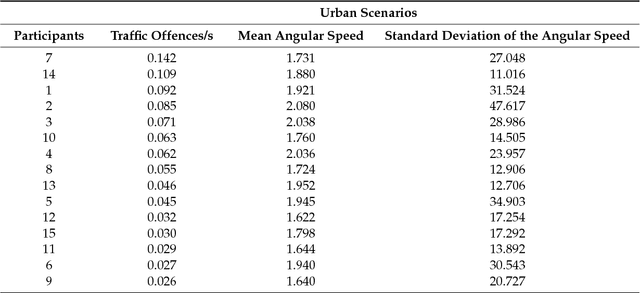

A Physiological Sensor-Based Android Application Synchronized with a Driving Simulator for Driver Monitoring

Feb 04, 2024

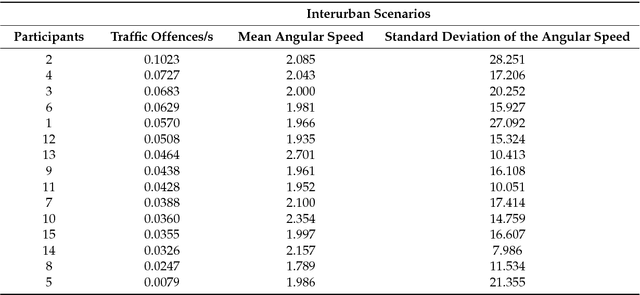

Abstract:In this paper, we present an Android application to control and monitor the physiological sensors from the Shimmer platform and its synchronized working with a driving simulator. The Android app can monitor drivers and their parameters can be used to analyze the relation between their physiological states and driving performance. The app can configure, select, receive, process, represent graphically, and store the signals from electrocardiogram (ECG), electromyogram (EMG) and galvanic skin response (GSR) modules and accelerometers, a magnetometer and a gyroscope. The Android app is synchronized in two steps with a driving simulator that we previously developed using the Unity game engine to analyze driving security and efficiency. The Android app was tested with different sensors working simultaneously at various sampling rates and in different Android devices. We also tested the synchronized working of the driving simulator and the Android app with 25 people and analyzed the relation between data from the ECG, EMG, GSR, and gyroscope sensors and from the simulator. Among others, some significant correlations between a gyroscope-based feature calculated by the Android app and vehicle data and particular traffic offences were found. The Android app can be applied with minor adaptations to other different users such as patients with chronic diseases or athletes.

* 28 pages

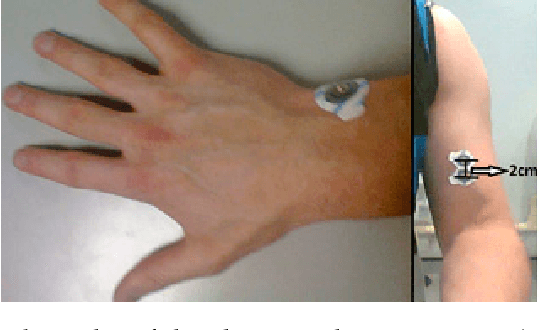

VIDIMU. Multimodal video and IMU kinematic dataset on daily life activities using affordable devices

Mar 27, 2023Abstract:Human activity recognition and clinical biomechanics are challenging problems in physical telerehabilitation medicine. However, most publicly available datasets on human body movements cannot be used to study both problems in an out-of-the-lab movement acquisition setting. The objective of the VIDIMU dataset is to pave the way towards affordable patient tracking solutions for remote daily life activities recognition and kinematic analysis. The dataset includes 13 activities registered using a commodity camera and five inertial sensors. The video recordings were acquired in 54 subjects, of which 16 also had simultaneous recordings of inertial sensors. The novelty of VIDIMU lies in: i) the clinical relevance of the chosen movements, ii) the combined utilization of affordable video and custom sensors, and iii) the implementation of state-of-the-art tools for multimodal data processing of 3D body pose tracking and motion reconstruction in a musculoskeletal model from inertial data. The validation confirms that a minimally disturbing acquisition protocol, performed according to real-life conditions can provide a comprehensive picture of human joint angles during daily life activities.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge