Luzie Helfmann

On Hyperparameter Search in Cluster Ensembles

Mar 29, 2018

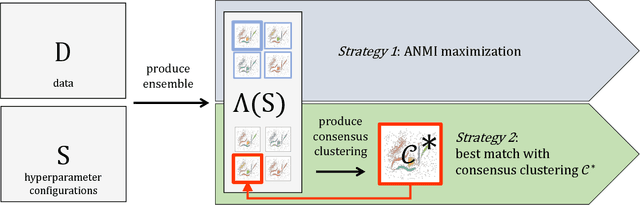

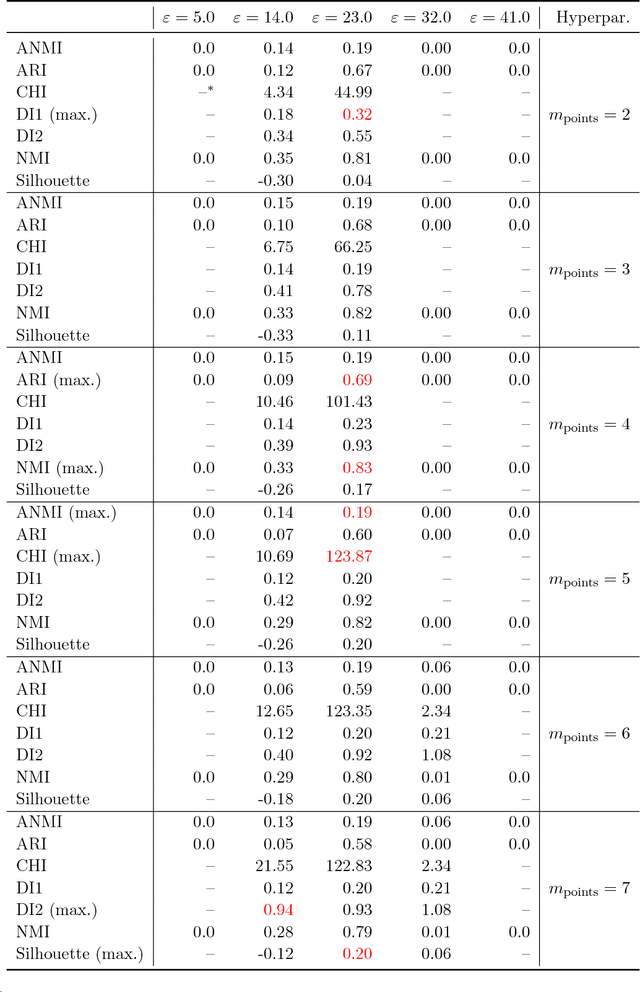

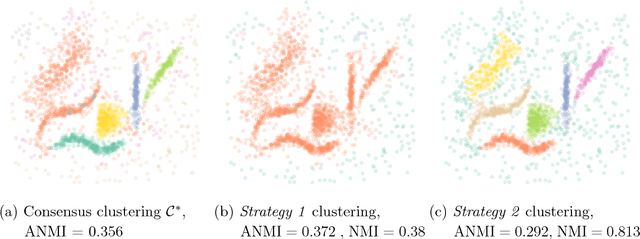

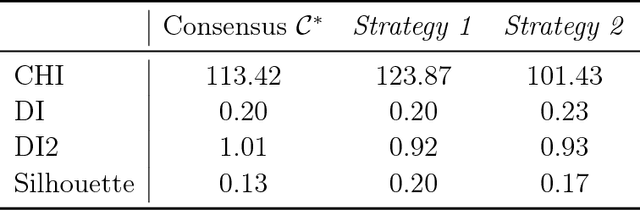

Abstract:Quality assessments of models in unsupervised learning and clustering verification in particular have been a long-standing problem in the machine learning research. The lack of robust and universally applicable cluster validity scores often makes the algorithm selection and hyperparameter evaluation a tough guess. In this paper, we show that cluster ensemble aggregation techniques such as consensus clustering may be used to evaluate clusterings and their hyperparameter configurations. We use normalized mutual information to compare individual objects of a clustering ensemble to the constructed consensus of the whole ensemble and show, that the resulting score can serve as an overall quality measure for clustering problems. This method is capable of highlighting the standout clustering and hyperparameter configuration in the ensemble even in the case of a distorted consensus. We apply this very general framework to various data sets and give possible directions for future research.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge