Lulua Rakla

Pedestrian Behavior Maps for Safety Advisories: CHAMP Framework and Real-World Data Analysis

May 08, 2023

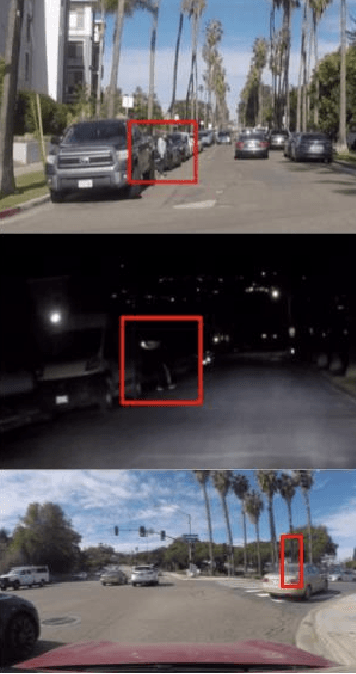

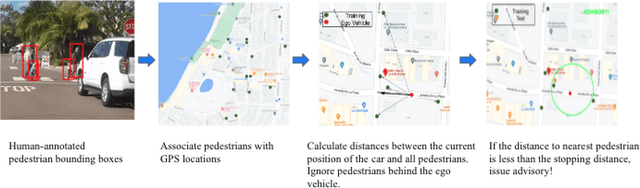

Abstract:It is critical for vehicles to prevent any collisions with pedestrians. Current methods for pedestrian collision prevention focus on integrating visual pedestrian detectors with Automatic Emergency Braking (AEB) systems which can trigger warnings and apply brakes as a pedestrian enters a vehicle's path. Unfortunately, pedestrian-detection-based systems can be hindered in certain situations such as night-time or when pedestrians are occluded. Our system addresses such issues using an online, map-based pedestrian detection aggregation system where common pedestrian locations are learned after repeated passes of locations. Using a carefully collected and annotated dataset in La Jolla, CA, we demonstrate the system's ability to learn pedestrian zones and generate advisory notices when a vehicle is approaching a pedestrian despite challenges like dark lighting or pedestrian occlusion. Using the number of correct advisories, false advisories, and missed advisories to define precision and recall performance metrics, we evaluate our system and discuss future positive effects with further data collection. We have made our code available at https://github.com/s7desai/ped-mapping, and a video demonstration of the CHAMP system at https://youtu.be/dxeCrS_Gpkw.

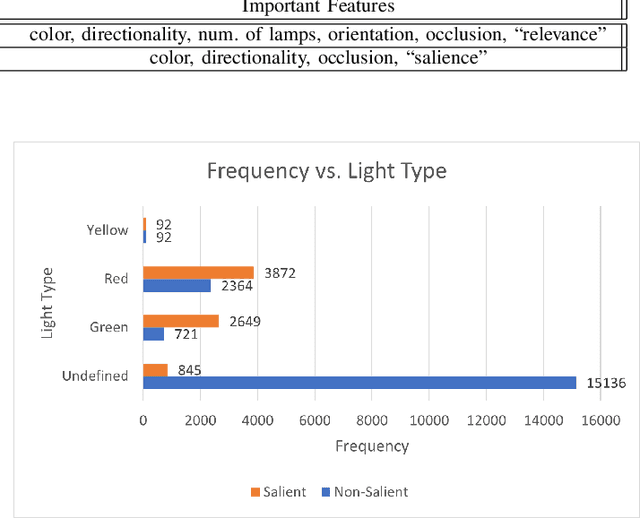

Robust Traffic Light Detection Using Salience-Sensitive Loss: Computational Framework and Evaluations

May 08, 2023

Abstract:One of the most important tasks for ensuring safe autonomous driving systems is accurately detecting road traffic lights and accurately determining how they impact the driver's actions. In various real-world driving situations, a scene may have numerous traffic lights with varying levels of relevance to the driver, and thus, distinguishing and detecting the lights that are relevant to the driver and influence the driver's actions is a critical safety task. This paper proposes a traffic light detection model which focuses on this task by first defining salient lights as the lights that affect the driver's future decisions. We then use this salience property to construct the LAVA Salient Lights Dataset, the first US traffic light dataset with an annotated salience property. Subsequently, we train a Deformable DETR object detection transformer model using Salience-Sensitive Focal Loss to emphasize stronger performance on salient traffic lights, showing that a model trained with this loss function has stronger recall than one trained without.

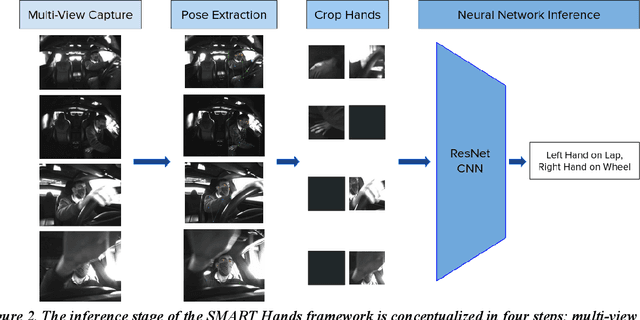

Multi-View Ensemble Learning With Missing Data: Computational Framework and Evaluations using Novel Data from the Safe Autonomous Driving Domain

Jan 30, 2023

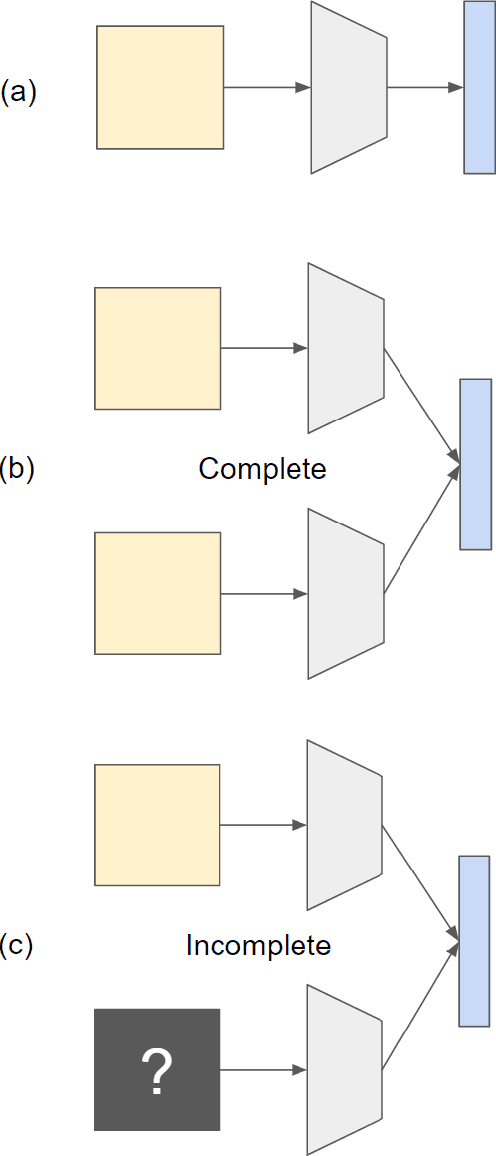

Abstract:Real-world applications with multiple sensors observing an event are expected to make continuously-available predictions, even in cases where information may be intermittently missing. We explore methods in ensemble learning and sensor fusion to make use of redundancy and information shared between four camera views, applied to the task of hand activity classification for autonomous driving. In particular, we show that a late-fusion approach between parallel convolutional neural networks can outperform even the best-placed single camera model. To enable this approach, we propose a scheme for handling missing information, and then provide comparative analysis of this late-fusion approach to additional methods such as weighted majority voting and model combination schemes.

CHAMP: Crowdsourced, History-Based Advisory of Mapped Pedestrians for Safer Driver Assistance Systems

Jan 18, 2023

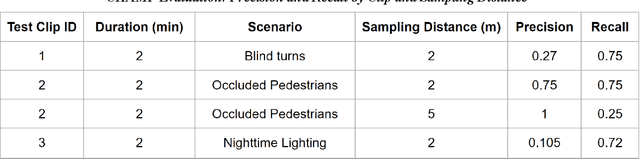

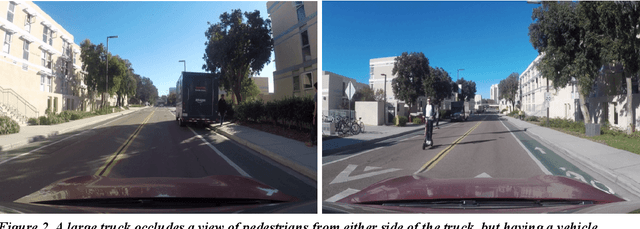

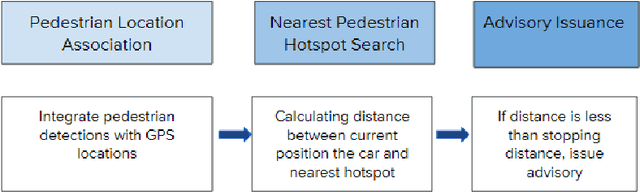

Abstract:Vehicles are constantly approaching and sharing the road with pedestrians, and as a result it is critical for vehicles to prevent any collisions with pedestrians. Current methods for pedestrian collision prevention focus on integrating visual pedestrian detectors with Automatic Emergency Braking (AEB) systems which can trigger warnings and apply brakes as a pedestrian enters a vehicle's path. Unfortunately, pedestrian-detection-based systems can be hindered in certain situations such as nighttime or when pedestrians are occluded. Our system, CHAMP (Crowdsourced, History-based Advisories of Mapped Pedestrians), addresses such issues using an online, map-based pedestrian detection system where pedestrian locations are aggregated into a dataset after repeated passes of locations. Using this dataset, we are able to learn pedestrian zones and generate advisory notices when a vehicle is approaching a pedestrian despite challenges like dark lighting or pedestrian occlusion. We collected and carefully annotated pedestrian data in La Jolla, CA to construct training and test sets of pedestrian locations. Moreover, we use the number of correct advisories, false advisories, and missed advisories to define precision and recall performance metrics to evaluate CHAMP. This approach can be tuned such that we achieve a maximum of 100% precision and 75% recall on the experimental dataset, with performance enhancement options through further data collection.

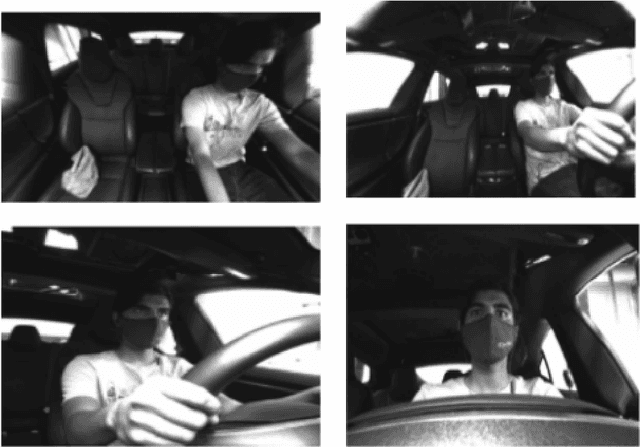

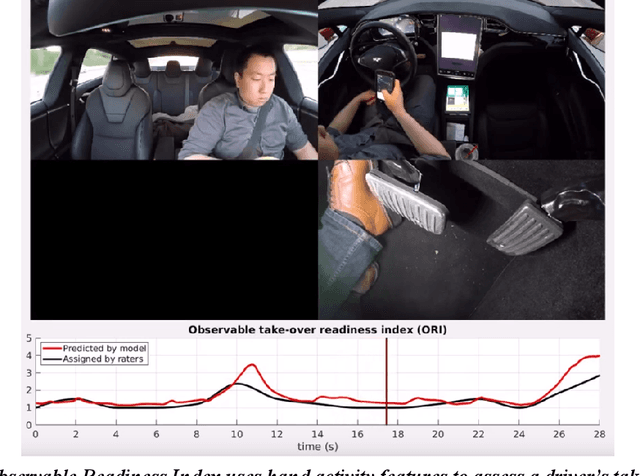

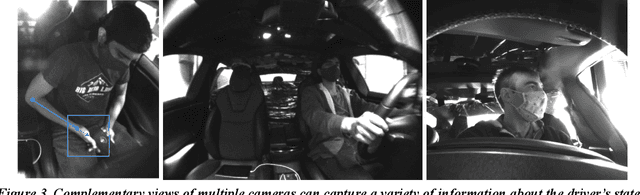

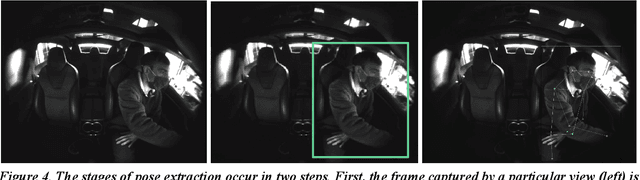

(Safe) SMART Hands: Hand Activity Analysis and Distraction Alerts Using a Multi-Camera Framework

Jan 18, 2023

Abstract:Manual (hand-related) activity is a significant source of crash risk while driving. Accordingly, analysis of hand position and hand activity occupation is a useful component to understanding a driver's readiness to take control of a vehicle. Visual sensing through cameras provides a passive means of observing the hands, but its effectiveness varies depending on camera location. We introduce an algorithmic framework, SMART Hands, for accurate hand classification with an ensemble of camera views using machine learning. We illustrate the effectiveness of this framework in a 4-camera setup, reaching 98% classification accuracy on a variety of locations and held objects for both of the driver's hands. We conclude that this multi-camera framework can be extended to additional tasks such as gaze and pose analysis, with further applications in driver and passenger safety.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge