Luke Meyer

Automated speech audiometry: Can it work using open-source pre-trained Kaldi-NL automatic speech recognition?

Dec 19, 2023

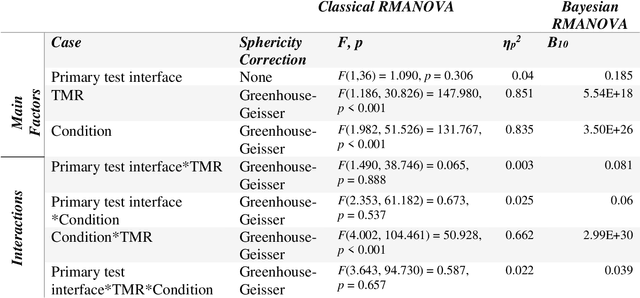

Abstract:A practical speech audiometry tool is the digits-in-noise (DIN) test for hearing screening of populations of varying ages and hearing status. The test is usually conducted by a human supervisor (e.g., clinician), who scores the responses spoken by the listener, or online, where a software scores the responses entered by the listener. The test has 24 digit-triplets presented in an adaptive staircase procedure, resulting in a speech reception threshold (SRT). We propose an alternative automated DIN test setup that can evaluate spoken responses whilst conducted without a human supervisor, using the open-source automatic speech recognition toolkit, Kaldi-NL. Thirty self-reported normal-hearing Dutch adults (19-64 years) completed one DIN+Kaldi-NL test. Their spoken responses were recorded, and used for evaluating the transcript of decoded responses by Kaldi-NL. Study 1 evaluated the Kaldi-NL performance through its word error rate (WER), percentage of summed decoding errors regarding only digits found in the transcript compared to the total number of digits present in the spoken responses. Average WER across participants was 5.0% (range 0 - 48%, SD = 8.8%), with average decoding errors in three triplets per participant. Study 2 analysed the effect that triplets with decoding errors from Kaldi-NL had on the DIN test output (SRT), using bootstrapping simulations. Previous research indicated 0.70 dB as the typical within-subject SRT variability for normal-hearing adults. Study 2 showed that up to four triplets with decoding errors produce SRT variations within this range, suggesting that our proposed setup could be feasible for clinical applications.

Evaluating Speech-in-Speech Perception via a Humanoid Robot

Dec 19, 2023

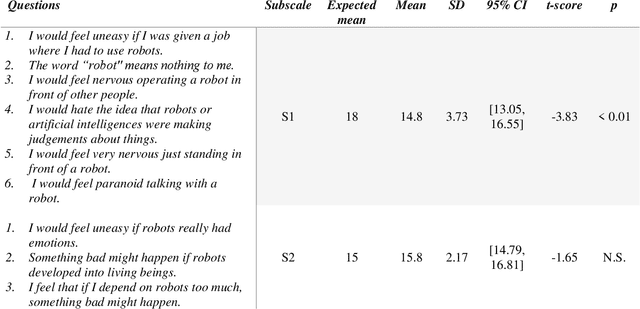

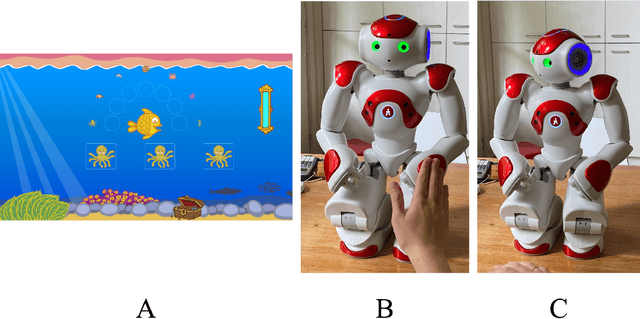

Abstract:Underlying mechanisms of speech perception masked by background speakers, a common daily listening condition, are often investigated using various and lengthy psychophysical tests. The presence of a social agent, such as an interactive humanoid NAO robot, may help maintain engagement and attention. However, such robots potentially have limited sound quality or processing speed. As a first step towards the use of NAO in psychophysical testing of speech-in-speech perception, we compared normal-hearing young adults' performance when using the standard computer interface to that when using a NAO robot to introduce the test and present all corresponding stimuli. Target sentences were presented with colour and number keywords in the presence of competing masker speech at varying target-to-masker ratios. Sentences were produced by the same speaker, but voice differences between the target and masker were introduced using speech synthesis methods. To assess test performance, speech intelligibility and data collection duration were compared between the computer and NAO setups. Human-robot interaction was assessed using the Negative Attitude Towards Robot Scale (NARS) and quantification of behavioural cues (backchannels). Speech intelligibility results showed functional similarity between the computer and NAO setups. Data collection durations were longer when using NAO. NARS results showed participants had a more positive attitude toward robots prior to their interaction with NAO. The presence of more positive backchannels when using NAO suggest higher engagement with the robot in comparison to the computer. Overall, the study presents the potential of the NAO for presentingspeech materials and collecting psychophysical measurements for speech-in-speech perception.

Use of a humanoid robot for auditory psychophysical testing

Jun 16, 2023

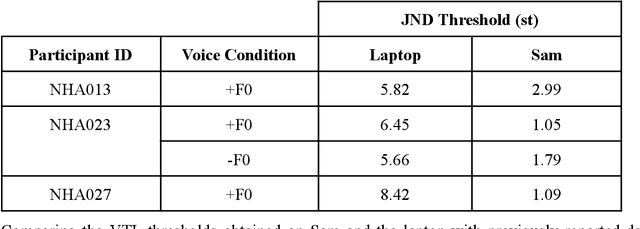

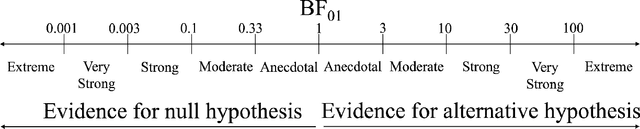

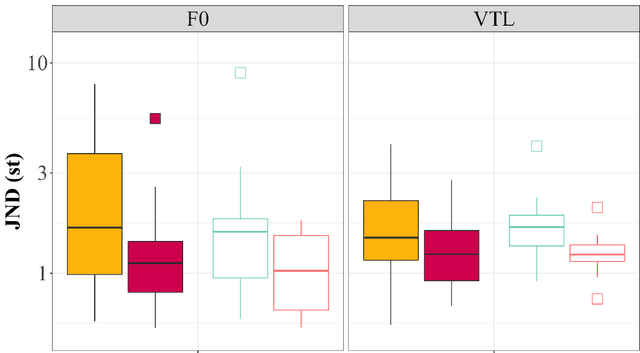

Abstract:Tasks in psychophysical tests can at times be repetitive and cause individuals to lose engagement during the test. To facilitate engagement, we propose the use of a humanoid NAO robot as an alternative interface for conducting psychophysical tests. Specifically, we aim to evaluate the performance of the NAO as an auditory testing interface, given its potential limitations and technical differences, in comparison to the current laptop interface. We examine the results and durations of two voice perception tests, voice cue sensitivity and voice gender categorisation, obtained from both the conventionally used laptop interface and the new NAO interface. Both tests investigate the perception and use of two speaker-specific voice cues, fundamental frequency (F0) and vocal tract length (VTL), important for characterising voice gender. Responses are logged on the laptop using a connected mouse, and on the NAO using the tactile sensors. Comparison of test results from both interfaces shows functional similarity between the interfaces and replicates findings from previous studies with similar tests. Comparison of test durations shows longer testing times with NAO, primarily due to longer processing times in comparison to the laptop, as well as other design limitations due to the implementation of the test on the robot. Despite the inherent constraints of the NAO robot, such as in sound quality, relatively long processing and testing times, and different methods of response logging, the NAO interface appears to facilitate collecting similar data to the current laptop interface, confirming its potential as an alternative psychophysical test interface for auditory perception tests.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge