Lukas Meier

High-dimensional additive modeling

Nov 18, 2009

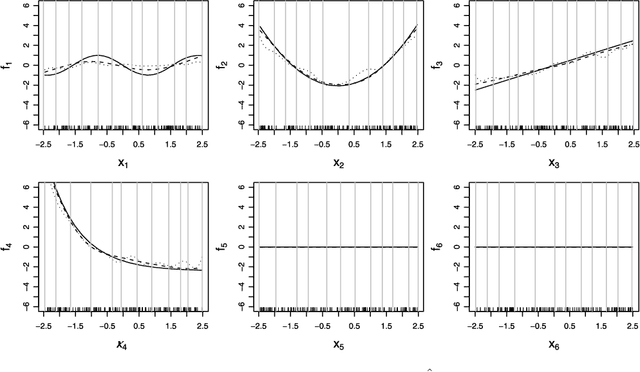

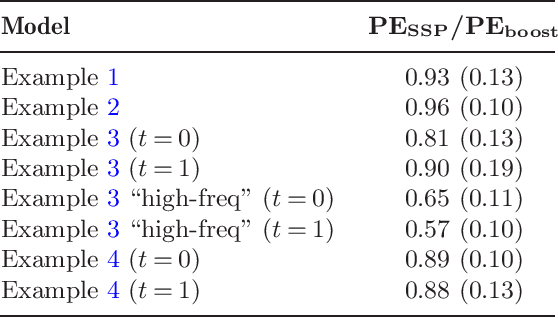

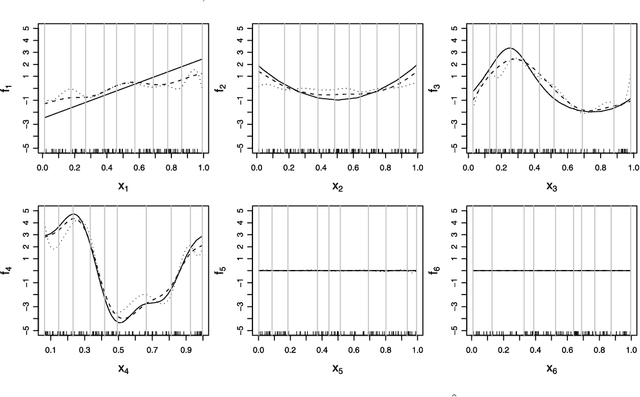

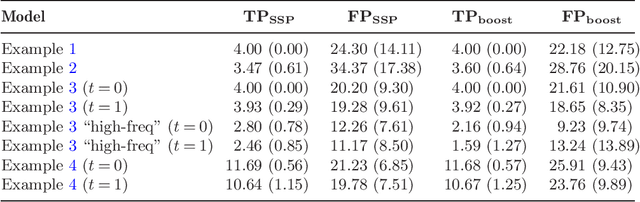

Abstract:We propose a new sparsity-smoothness penalty for high-dimensional generalized additive models. The combination of sparsity and smoothness is crucial for mathematical theory as well as performance for finite-sample data. We present a computationally efficient algorithm, with provable numerical convergence properties, for optimizing the penalized likelihood. Furthermore, we provide oracle results which yield asymptotic optimality of our estimator for high dimensional but sparse additive models. Finally, an adaptive version of our sparsity-smoothness penalized approach yields large additional performance gains.

* Published in at http://dx.doi.org/10.1214/09-AOS692 the Annals of Statistics (http://www.imstat.org/aos/) by the Institute of Mathematical Statistics (http://www.imstat.org)

P-values for high-dimensional regression

Jun 12, 2009

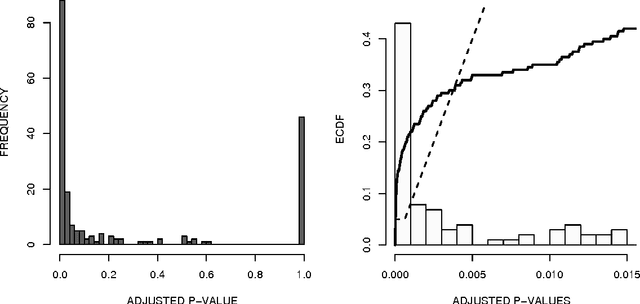

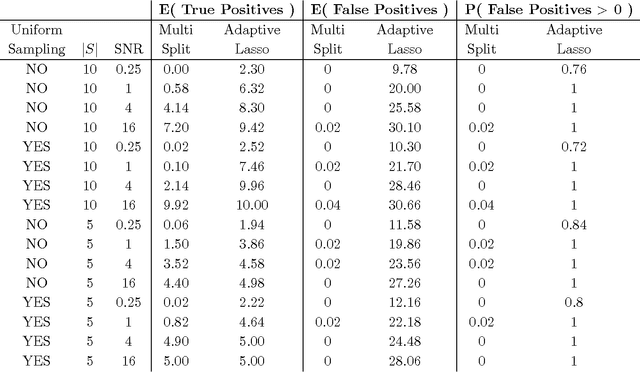

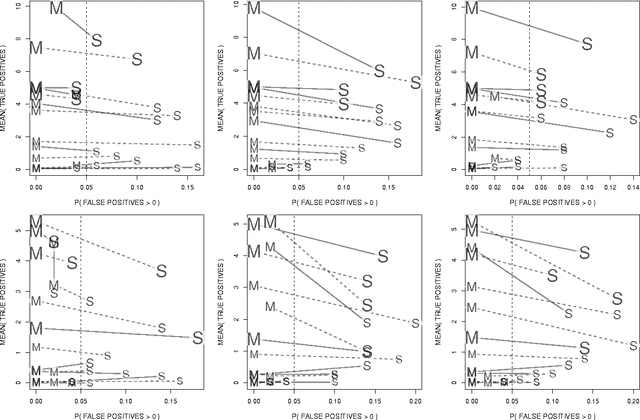

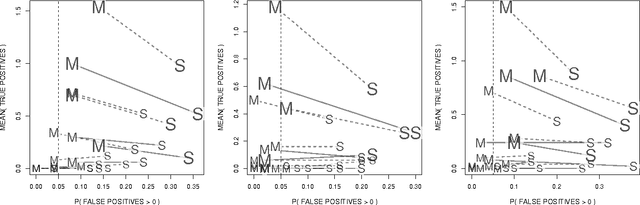

Abstract:Assigning significance in high-dimensional regression is challenging. Most computationally efficient selection algorithms cannot guard against inclusion of noise variables. Asymptotically valid p-values are not available. An exception is a recent proposal by Wasserman and Roeder (2008) which splits the data into two parts. The number of variables is then reduced to a manageable size using the first split, while classical variable selection techniques can be applied to the remaining variables, using the data from the second split. This yields asymptotic error control under minimal conditions. It involves, however, a one-time random split of the data. Results are sensitive to this arbitrary choice: it amounts to a `p-value lottery' and makes it difficult to reproduce results. Here, we show that inference across multiple random splits can be aggregated, while keeping asymptotic control over the inclusion of noise variables. We show that the resulting p-values can be used for control of both family-wise error (FWER) and false discovery rate (FDR). In addition, the proposed aggregation is shown to improve power while reducing the number of falsely selected variables substantially.

Least angle and $\ell_1$ penalized regression: A review

May 21, 2008

Abstract:Least Angle Regression is a promising technique for variable selection applications, offering a nice alternative to stepwise regression. It provides an explanation for the similar behavior of LASSO ($\ell_1$-penalized regression) and forward stagewise regression, and provides a fast implementation of both. The idea has caught on rapidly, and sparked a great deal of research interest. In this paper, we give an overview of Least Angle Regression and the current state of related research.

* Published in at http://dx.doi.org/10.1214/08-SS035 the Statistics Surveys (http://www.i-journals.org/ss/) by the Institute of Mathematical Statistics (http://www.imstat.org)

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge