Luis A. Vargas-Mieles

The split Gibbs sampler revisited: improvements to its algorithmic structure and augmented target distribution

Jun 28, 2022

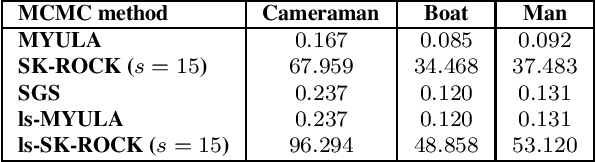

Abstract:This paper proposes a new accelerated proximal Markov chain Monte Carlo (MCMC) methodology to perform Bayesian computation efficiently in imaging inverse problems. The proposed methodology is derived from the Langevin diffusion process and stems from tightly integrating two state-of-the-art proximal Langevin MCMC samplers, SK-ROCK and split Gibbs sampling (SGS), which employ distinctively different strategies to improve convergence speed. More precisely, we show how to integrate, at the level of the Langevin diffusion process, the proximal SK-ROCK sampler which is based on a stochastic Runge-Kutta-Chebyshev approximation of the diffusion, with the model augmentation and relaxation strategy that SGS exploits to speed up Bayesian computation at the expense of asymptotic bias. This leads to a new and faster proximal SK-ROCK sampler that combines the accelerated quality of the original SK-ROCK sampler with the computational benefits of augmentation and relaxation. Moreover, rather than viewing the augmented and relaxed model as an approximation of the target model, positioning relaxation in a bias-variance trade-off, we propose to regard the augmented and relaxed model as a generalisation of the target model. This then allows us to carefully calibrate the amount of relaxation in order to simultaneously improve the accuracy of the model (as measured by the model evidence) and the sampler's convergence speed. To achieve this, we derive an empirical Bayesian method to automatically estimate the optimal amount of relaxation by maximum marginal likelihood estimation. The proposed methodology is demonstrated with a range of numerical experiments related to image deblurring and inpainting, as well as with comparisons with alternative approaches from the state of the art.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge