Luciano Zunino

Universality and diversity in word patterns

Aug 23, 2022

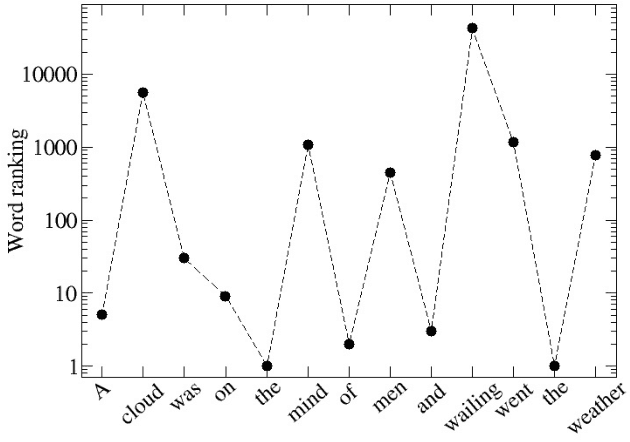

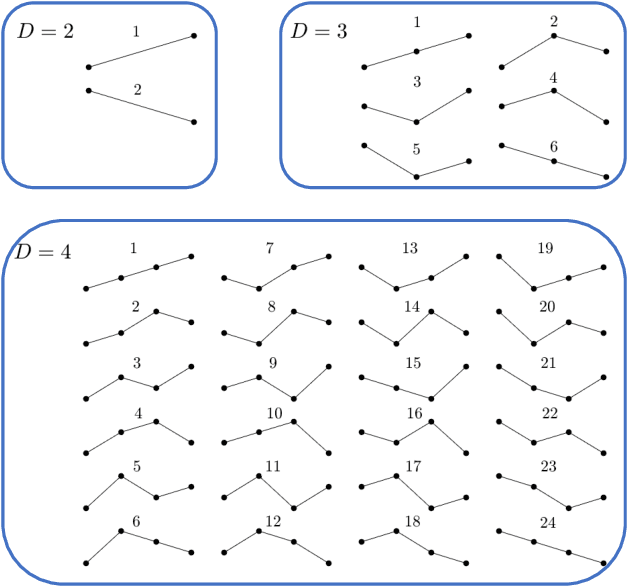

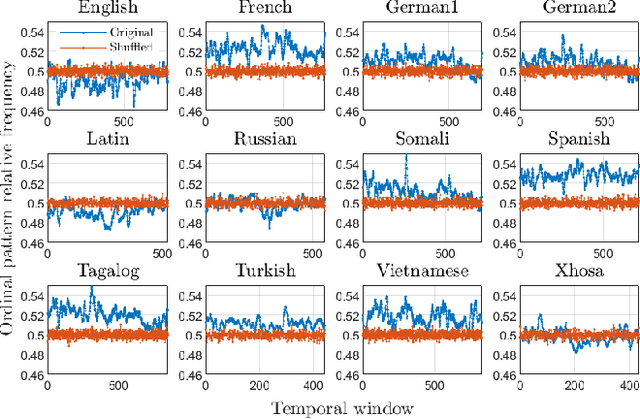

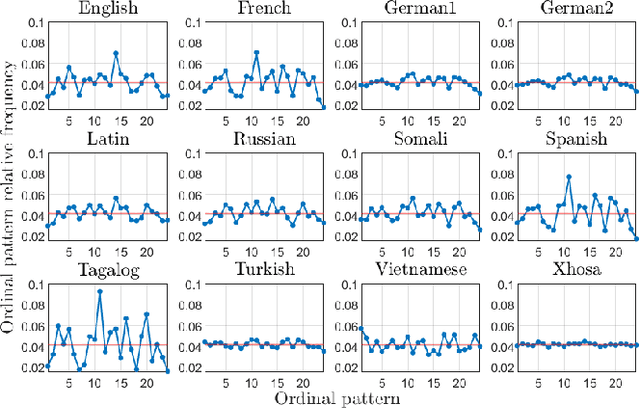

Abstract:Words are fundamental linguistic units that connect thoughts and things through meaning. However, words do not appear independently in a text sequence. The existence of syntactic rules induce correlations among neighboring words. Further, words are not evenly distributed but approximately follow a power law since terms with a pure semantic content appear much less often than terms that specify grammar relations. Using an ordinal pattern approach, we present an analysis of lexical statistical connections for eleven major languages. We find that the diverse manners that languages utilize to express word relations give rise to unique pattern distributions. Remarkably, we find that these relations can be modeled with a Markov model of order 2 and that this result is universally valid for all the studied languages. Furthermore, fluctuations of the pattern distributions can allow us to determine the historical period when the text was written and its author. Taken together, these results emphasize the relevance of time series analysis and information-theoretic methods for the understanding of statistical correlations in natural languages.

Discriminating image textures with the multiscale two-dimensional complexity-entropy causality plane

Sep 07, 2016

Abstract:The aim of this paper is to further explore the usefulness of the two-dimensional complexity-entropy causality plane as a texture image descriptor. A multiscale generalization is introduced in order to distinguish between different roughness features of images at small and large spatial scales. Numerically generated two-dimensional structures are initially considered for illustrating basic concepts in a controlled framework. Then, more realistic situations are studied. Obtained results allow us to confirm that intrinsic spatial correlations of images are successfully unveiled by implementing this multiscale symbolic information-theory approach. Consequently, we conclude that the proposed representation space is a versatile and practical tool for identifying, characterizing and discriminating image textures.

* Accepted for publication in Chaos, Solitons & Fractals

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge