Luciana T. Menon

Style Transfer Applied to Face Liveness Detection with User-Centered Models

Jul 16, 2019

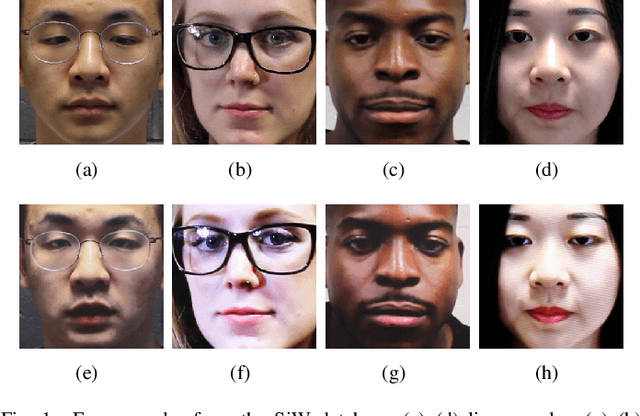

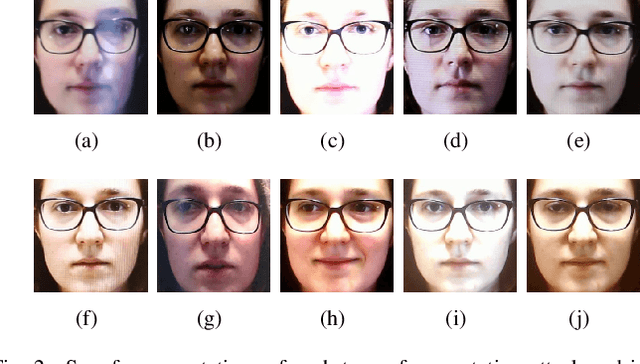

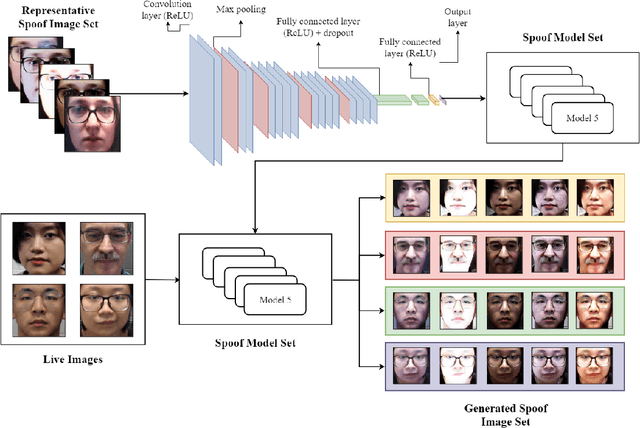

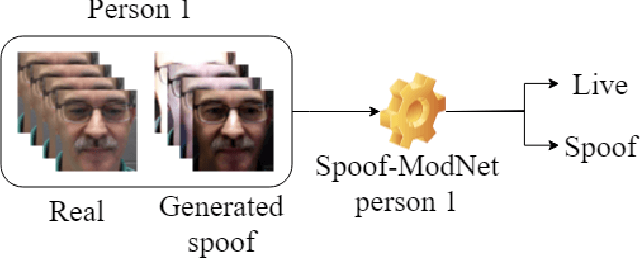

Abstract:This paper proposes a face anti-spoofing user-centered model (FAS-UCM). The major difficulty, in this case, is obtaining fraudulent images from all users to train the models. To overcome this problem, the proposed method is divided in three main parts: generation of new spoof images, based on style transfer and spoof image representation models; training of a Convolutional Neural Network (CNN) for liveness detection; evaluation of the live and spoof testing images for each subject. The generalization of the CNN to perform style transfer has shown promising qualitative results. Preliminary results have shown that the proposed method is capable of distinguishing between live and spoof images on the SiW database, with an average classification error rate of 0.22.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge