Luca Capriotti

Guided and Variance-Corrected Fusion with One-shot Style Alignment for Large-Content Image Generation

Dec 17, 2024

Abstract:Producing large images using small diffusion models is gaining increasing popularity, as the cost of training large models could be prohibitive. A common approach involves jointly generating a series of overlapped image patches and obtaining large images by merging adjacent patches. However, results from existing methods often exhibit obvious artifacts, e.g., seams and inconsistent objects and styles. To address the issues, we proposed Guided Fusion (GF), which mitigates the negative impact from distant image regions by applying a weighted average to the overlapping regions. Moreover, we proposed Variance-Corrected Fusion (VCF), which corrects data variance at post-averaging, generating more accurate fusion for the Denoising Diffusion Probabilistic Model. Furthermore, we proposed a one-shot Style Alignment (SA), which generates a coherent style for large images by adjusting the initial input noise without adding extra computational burden. Extensive experiments demonstrated that the proposed fusion methods improved the quality of the generated image significantly. As a plug-and-play module, the proposed method can be widely applied to enhance other fusion-based methods for large image generation.

CFR-ICL: Cascade-Forward Refinement with Iterative Click Loss for Interactive Image Segmentation

Mar 09, 2023

Abstract:The click-based interactive segmentation aims to extract the object of interest from an image with the guidance of user clicks. Recent work has achieved great overall performance by employing the segmentation from the previous output. However, in most state-of-the-art approaches, 1) the inference stage involves inflexible heuristic rules and a separate refinement model; and 2) the training cannot balance the number of user clicks and model performance. To address the challenges, we propose a click-based and mask-guided interactive image segmentation framework containing three novel components: Cascade-Forward Refinement (CFR), Iterative Click Loss (ICL), and SUEM image augmentation. The proposed ICL allows model training to improve segmentation and reduce user interactions simultaneously. The CFR offers a unified inference framework to generate segmentation results in a coarse-to-fine manner. The proposed SUEM augmentation is a comprehensive way to create large and diverse training sets for interactive image segmentation. Extensive experiments demonstrate the state-of-the-art performance of the proposed approach on five public datasets. Remarkably, our model achieves an average of 2.9 and 7.5 clicks of NoC@95 on the Berkeley and DAVIS sets, respectively, improving by 33.2% and 15.5% over the previous state-of-the-art results. The code and trained model are available at https://github.com/TitorX/CFR-ICL-Interactive-Segmentation.

An Efficient Instance Segmentation Approach for Extracting Fission Gas Bubbles on U-10Zr Annular Fuel

Feb 08, 2023

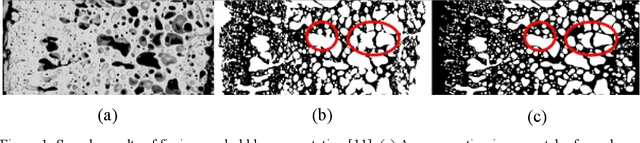

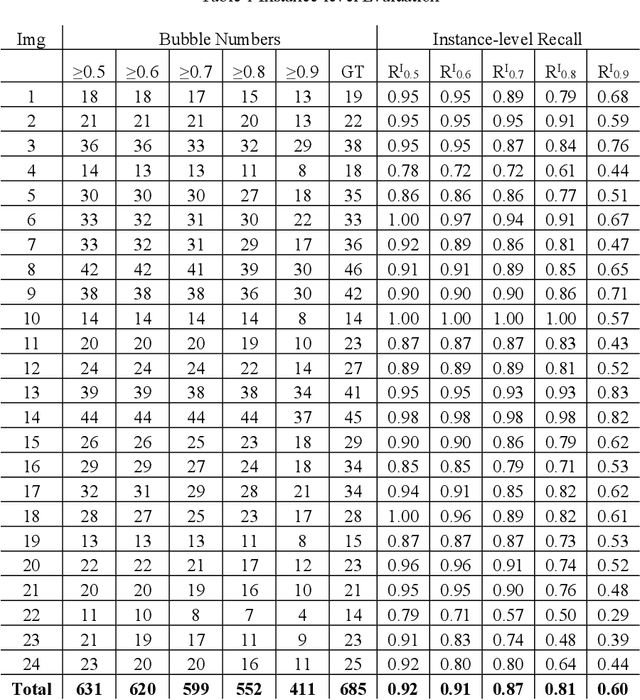

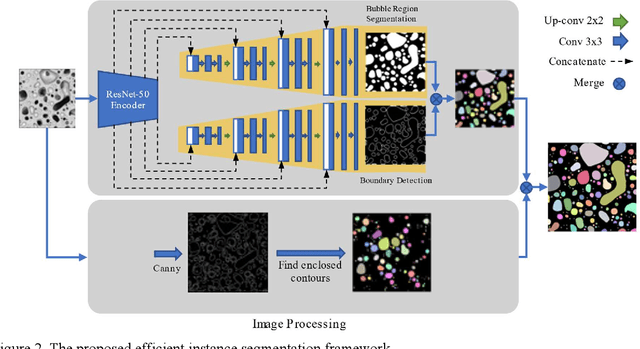

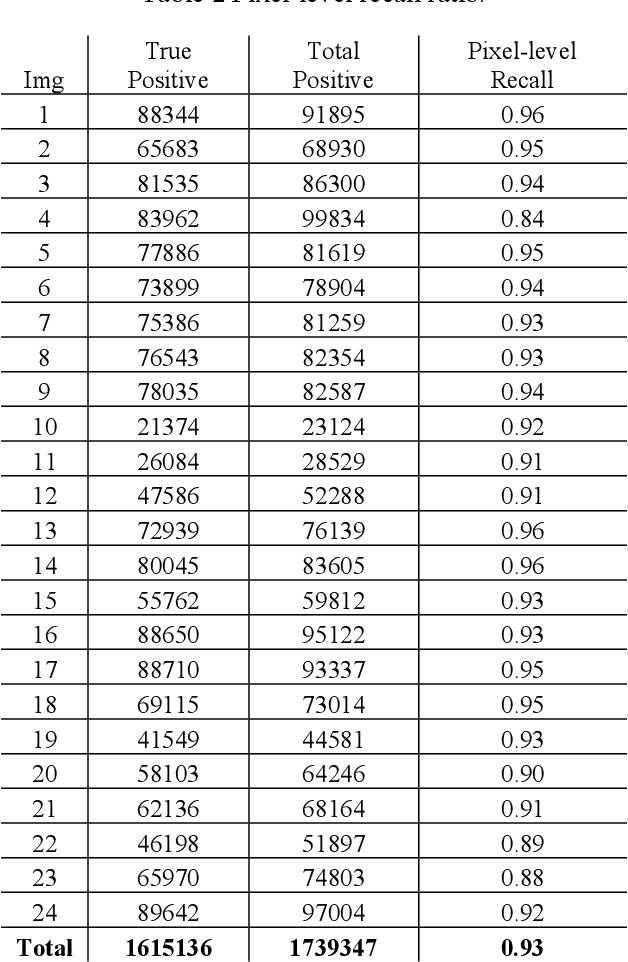

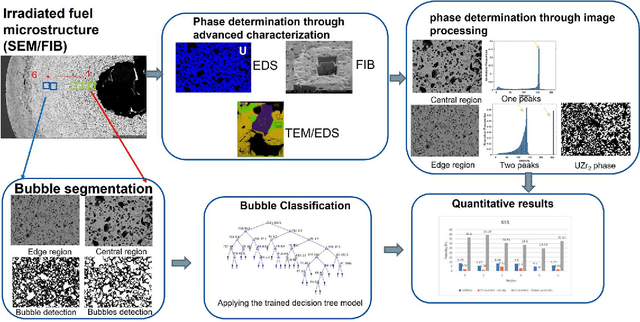

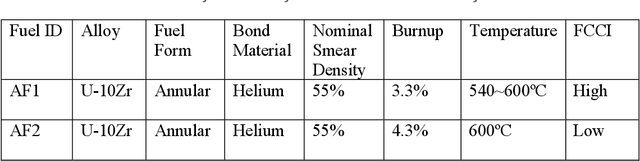

Abstract:U-10Zr-based nuclear fuel is pursued as a primary candidate for next-generation sodium-cooled fast reactors. However, more advanced characterization and analysis are needed to form a fundamental understating of the fuel performance, and make U-10Zr fuel qualify for commercial use. The movement of lanthanides across the fuel section from the hot fuel center to the cool cladding surface is one of the key factors to affect fuel performance. In the advanced annular U-10Zr fuel, the lanthanides present as fission gas bubbles. Due to a lack of annotated data, existing literature utilized a multiple-threshold method to separate the bubbles and calculate bubble statistics on an annular fuel. However, the multiple-threshold method cannot achieve robust performance on images with different qualities and contrasts, and cannot distinguish different bubbles. This paper proposes a hybrid framework for efficient bubble segmentation. We develop a bubble annotation tool and generate the first fission gas bubble dataset with more than 3000 bubbles from 24 images. A multi-task deep learning network integrating U-Net and ResNet is designed to accomplish instance-level bubble segmentation. Combining the segmentation results and image processing step achieves the best recall ratio of more than 90% with very limited annotated data. Our model shows outstanding improvement by comparing the previously proposed thresholding method. The proposed method has promising to generate a more accurate quantitative analysis of fission gas bubbles on U-10Zr annular fuels. The results will contribute to identifying the bubbles with lanthanides and finally build the relationship between the thermal gradation and lanthanides movements of U-10Zr annular fuels. Mover, the deep learning model is applicable to other similar material micro-structure segmentation tasks.

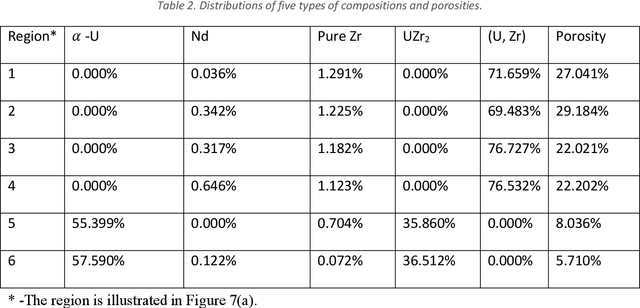

Advanced Characterization-Informed Framework and Quantitative Insight to Irradiated Annular U-10Zr Metallic Fuels

Oct 17, 2022

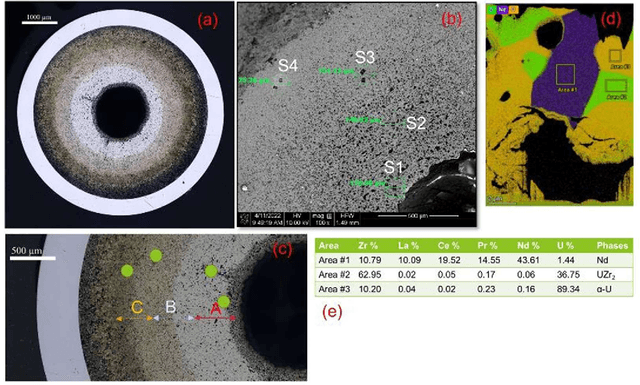

Abstract:U-10Zr-based metallic nuclear fuel is a promising fuel candidate for next-generation sodium-cooled fast reactors.The research experience of the Idaho National Laboratory for this type of fuel dates back to the 1960s. Idaho National Laboratory researchers have accumulated a considerable amount of experience and knowledge regarding fuel performance at the engineering scale. The limitation of advanced characterization and lack of proper data analysis tools prevented a mechanistic understanding of fuel microstructure evolution and properties degradation during irradiation. This paper proposed a new workflow, coupled with domain knowledge obtained by advanced post-irradiation examination methods, to provide unprecedented and quantified insights into the fission gas bubbles and pores, and lanthanide distribution in an annular fuel irradiated in the Advanced Test Reactor. In the study, researchers identify and confirm that the Zr-bearing secondary phases exist and generate the quantitative ratios of seven microstructures along the thermal gradient. Moreover, the distributions of fission gas bubbles on two samples of U-10Zr advanced fuels were quantitatively compared. Conclusive findings were obtained and allowed for evaluation of the lanthanide transportation through connected bubbles based on approximately 67,000 fission gas bubbles of the two advanced samples.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge