Linhui Huang

CONFINE: Conformal Prediction for Interpretable Neural Networks

Jun 01, 2024

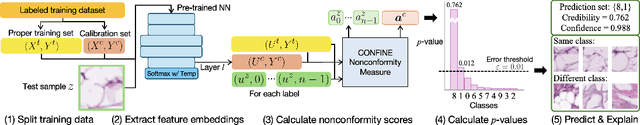

Abstract:Deep neural networks exhibit remarkable performance, yet their black-box nature limits their utility in fields like healthcare where interpretability is crucial. Existing explainability approaches often sacrifice accuracy and lack quantifiable measures of prediction uncertainty. In this study, we introduce Conformal Prediction for Interpretable Neural Networks (CONFINE), a versatile framework that generates prediction sets with statistically robust uncertainty estimates instead of point predictions to enhance model transparency and reliability. CONFINE not only provides example-based explanations and confidence estimates for individual predictions but also boosts accuracy by up to 3.6%. We define a new metric, correct efficiency, to evaluate the fraction of prediction sets that contain precisely the correct label and show that CONFINE achieves correct efficiency of up to 3.3% higher than the original accuracy, matching or exceeding prior methods. CONFINE's marginal and class-conditional coverages attest to its validity across tasks spanning medical image classification to language understanding. Being adaptable to any pre-trained classifier, CONFINE marks a significant advance towards transparent and trustworthy deep learning applications in critical domains.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge