Lina Hacker

Distribution-informed and wavelength-flexible data-driven photoacoustic oximetry

Mar 21, 2024Abstract:Significance: Photoacoustic imaging (PAI) promises to measure spatially-resolved blood oxygen saturation, but suffers from a lack of accurate and robust spectral unmixing methods to deliver on this promise. Accurate blood oxygenation estimation could have important clinical applications, from cancer detection to quantifying inflammation. Aim: This study addresses the inflexibility of existing data-driven methods for estimating blood oxygenation in PAI by introducing a recurrent neural network architecture. Approach: We created 25 simulated training dataset variations to assess neural network performance. We used a long short-term memory network to implement a wavelength-flexible network architecture and proposed the Jensen-Shannon divergence to predict the most suitable training dataset. Results: The network architecture can handle arbitrary input wavelengths and outperforms linear unmixing and the previously proposed learned spectral decolouring method. Small changes in the training data significantly affect the accuracy of our method, but we find that the Jensen-Shannon divergence correlates with the estimation error and is thus suitable for predicting the most appropriate training datasets for any given application. Conclusions: A flexible data-driven network architecture combined with the Jensen-Shannon Divergence to predict the best training data set provides a promising direction that might enable robust data-driven photoacoustic oximetry for clinical use cases.

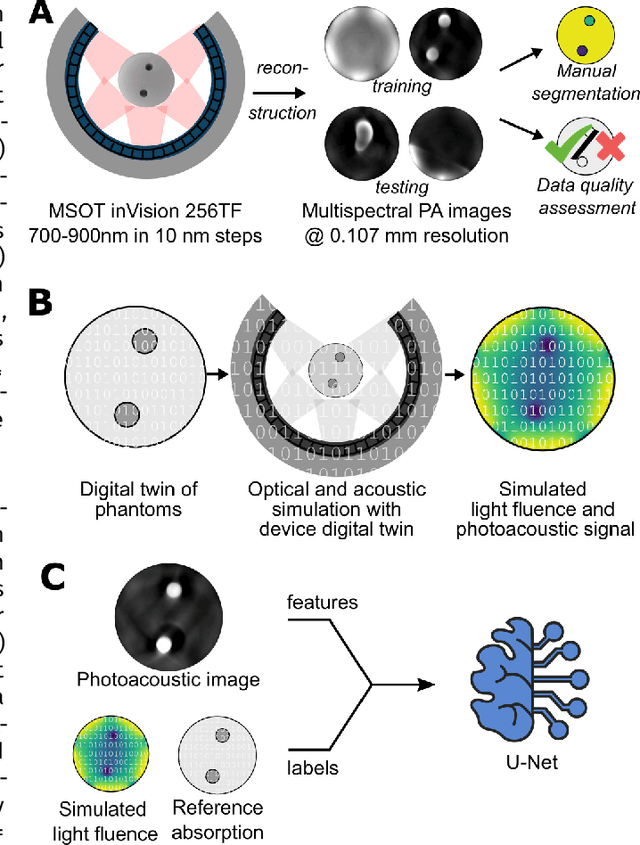

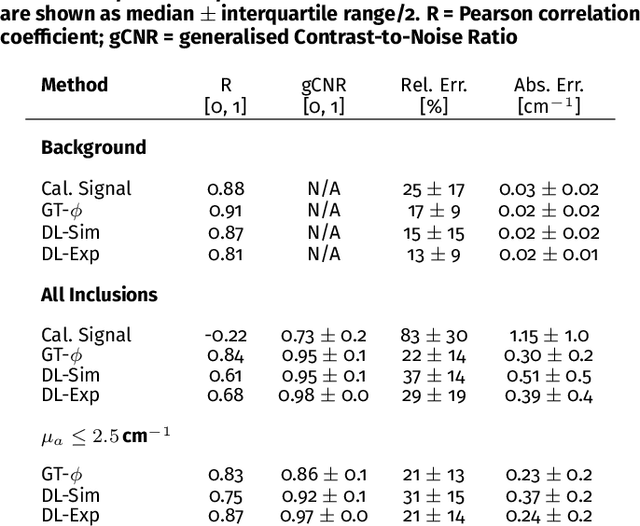

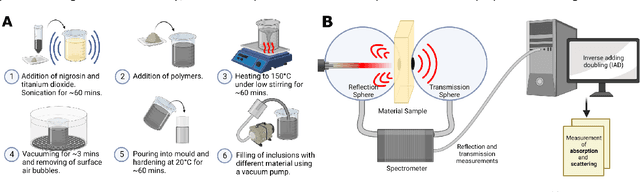

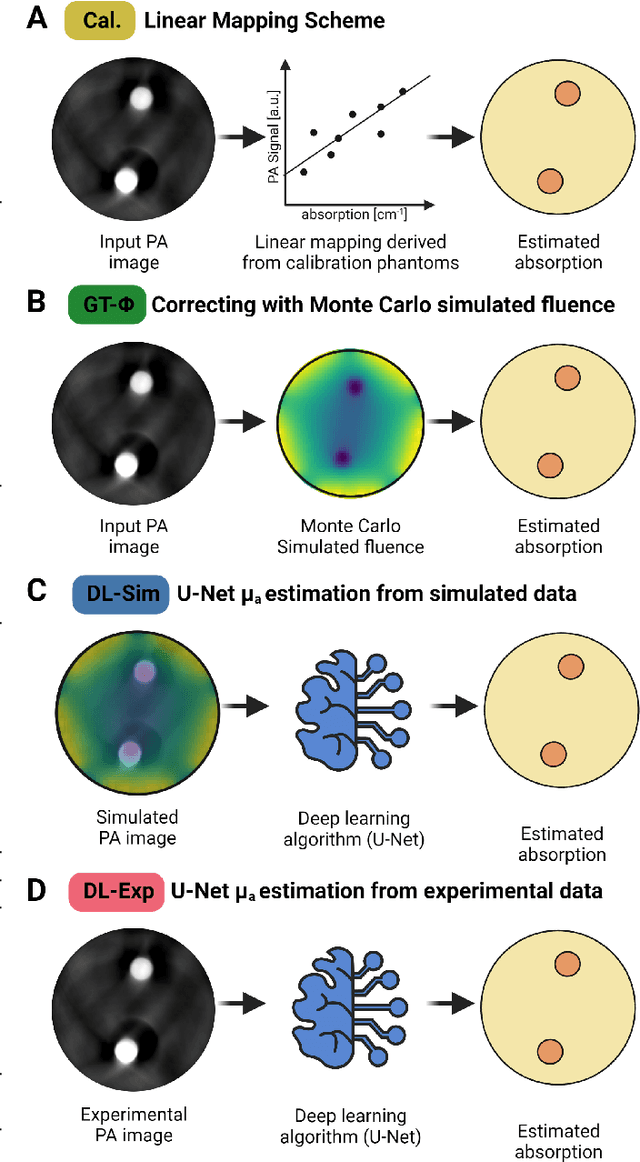

Moving beyond simulation: data-driven quantitative photoacoustic imaging using tissue-mimicking phantoms

Jun 11, 2023

Abstract:Accurate measurement of optical absorption coefficients from photoacoustic imaging (PAI) data would enable direct mapping of molecular concentrations, providing vital clinical insight. The ill-posed nature of the problem of absorption coefficient recovery has prohibited PAI from achieving this goal in living systems due to the domain gap between simulation and experiment. To bridge this gap, we introduce a collection of experimentally well-characterised imaging phantoms and their digital twins. This first-of-a-kind phantom data set enables supervised training of a U-Net on experimental data for pixel-wise estimation of absorption coefficients. We show that training on simulated data results in artefacts and biases in the estimates, reinforcing the existence of a domain gap between simulation and experiment. Training on experimentally acquired data, however, yielded more accurate and robust estimates of optical absorption coefficients. We compare the results to fluence correction with a Monte Carlo model from reference optical properties of the materials, which yields a quantification error of approximately 20%. Application of the trained U-Nets to a blood flow phantom demonstrated spectral biases when training on simulated data, while application to a mouse model highlighted the ability of both learning-based approaches to recover the depth-dependent loss of signal intensity. We demonstrate that training on experimental phantoms can restore the correlation of signal amplitudes measured in depth. While the absolute quantification error remains high and further improvements are needed, our results highlight the promise of deep learning to advance quantitative PAI.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge