Lina Dencik

The politics of deceptive borders: 'biomarkers of deceit' and the case of iBorderCtrl

Jan 10, 2020

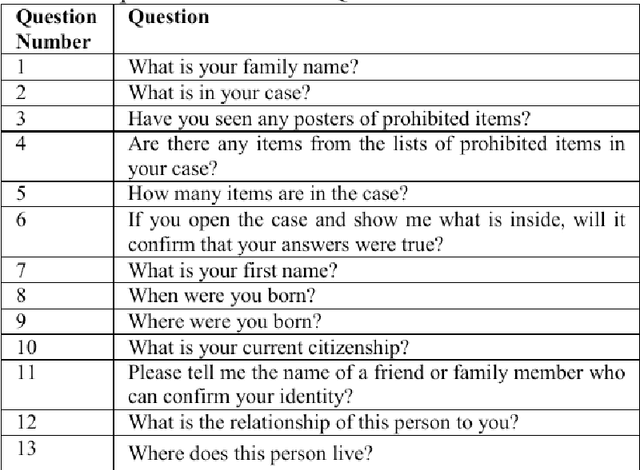

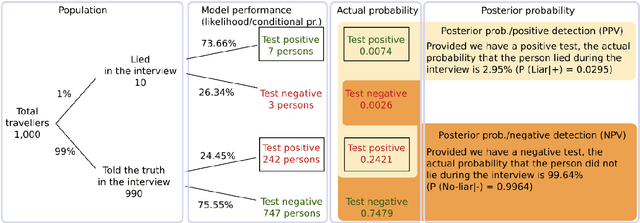

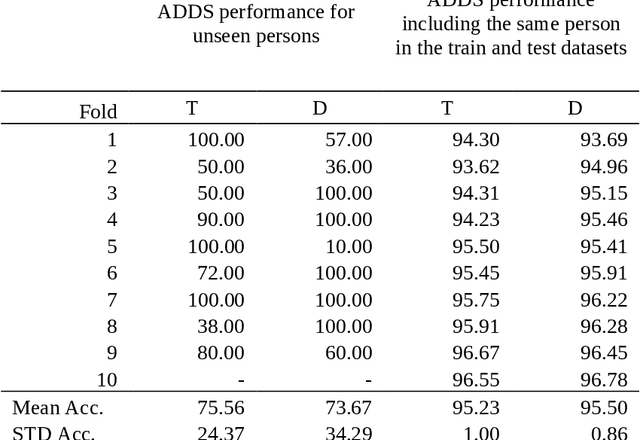

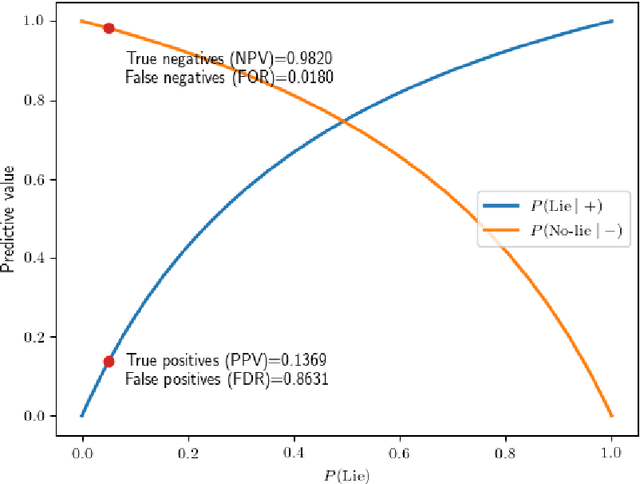

Abstract:This paper critically examines a recently developed proposal for a border control system called iBorderCtrl, designed to detect deception based on facial recognition technology and the measurement of micro-expressions, termed 'biomarkers of deceit'. Funded under the European Commission's Horizon 2020 programme, we situate our analysis in the wider political economy of 'emotional AI' and the history of deception detection technologies. We then move on to interrogate the design of iBorderCtrl using publicly available documents and assess the assumptions and scientific validation underpinning the project design. Finally, drawing on a Bayesian analysis we outline statistical fallacies in the foundational premise of mass screening and argue that it is very unlikely that the model that iBorderCtrl provides for deception detection would work in practice. By interrogating actual systems in this way, we argue that we can begin to question the very premise of the development of data-driven systems, and emotional AI and deception detection in particular, pushing back on the assumption that these systems are fulfilling the tasks they claim to be attending to and instead ask what function such projects carry out in the creation of subjects and management of populations. This function is not merely technical but, rather, we argue, distinctly political and forms part of a mode of governance increasingly shaping life opportunities and fundamental rights.

What does it mean to solve the problem of discrimination in hiring? Social, technical and legal perspectives from the UK on automated hiring systems

Sep 28, 2019

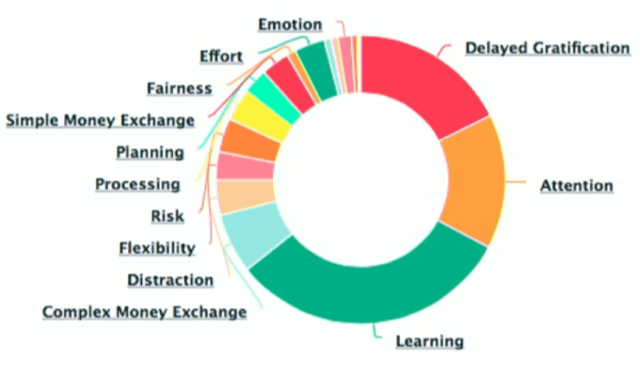

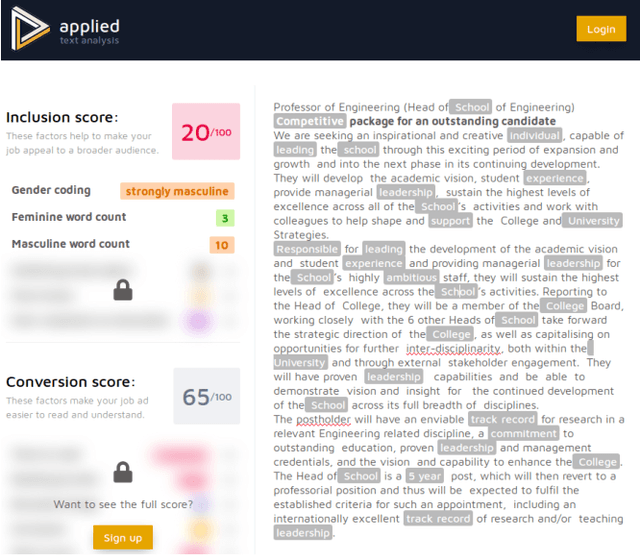

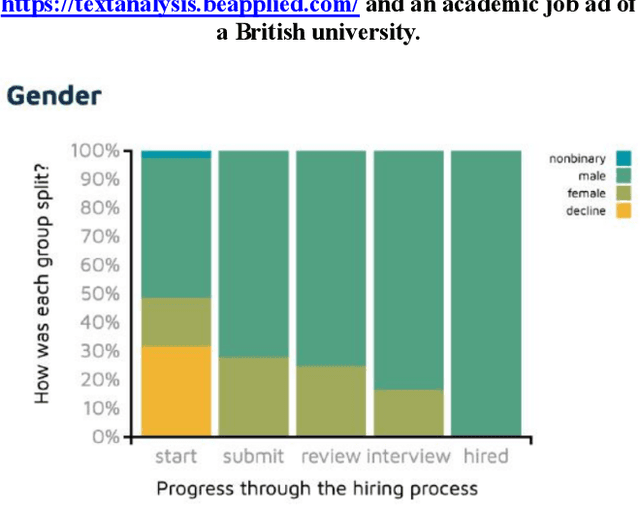

Abstract:The ability to get and keep a job is a key aspect of participating in society and sustaining livelihoods. Yet the way decisions are made on who is eligible for jobs, and why, are rapidly changing with the advent and growth in uptake of automated hiring systems (AHSs) powered by data-driven tools. Key concerns about such AHSs include the lack of transparency and potential limitation of access to jobs for specific profiles. In relation to the latter, however, several of these AHSs claim to detect and mitigate discriminatory practices against protected groups and promote diversity and inclusion at work. Yet whilst these tools have a growing user-base around the world, such claims of bias mitigation are rarely scrutinised and evaluated, and when done so, have almost exclusively been from a US socio-legal perspective. In this paper, we introduce a perspective outside the US by critically examining how three prominent automated hiring systems (AHSs) in regular use in the UK, HireVue, Pymetrics and Applied, understand and attempt to mitigate bias and discrimination. Using publicly available documents, we describe how their tools are designed, validated and audited for bias, highlighting assumptions and limitations, before situating these in the socio-legal context of the UK. The UK has a very different legal background to the US in terms not only of hiring and equality law, but also in terms of data protection (DP) law. We argue that this might be important for addressing concerns about transparency and could mean a challenge to building bias mitigation into AHSs definitively capable of meeting EU legal standards. This is significant as these AHSs, especially those developed in the US, may obscure rather than improve systemic discrimination in the workplace.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge