The politics of deceptive borders: 'biomarkers of deceit' and the case of iBorderCtrl

Paper and Code

Jan 10, 2020

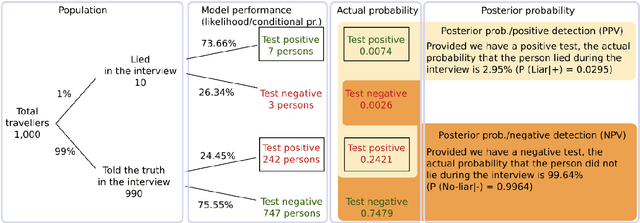

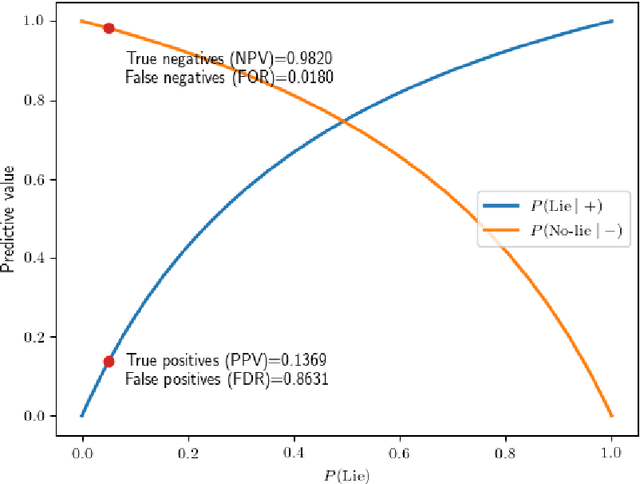

This paper critically examines a recently developed proposal for a border control system called iBorderCtrl, designed to detect deception based on facial recognition technology and the measurement of micro-expressions, termed 'biomarkers of deceit'. Funded under the European Commission's Horizon 2020 programme, we situate our analysis in the wider political economy of 'emotional AI' and the history of deception detection technologies. We then move on to interrogate the design of iBorderCtrl using publicly available documents and assess the assumptions and scientific validation underpinning the project design. Finally, drawing on a Bayesian analysis we outline statistical fallacies in the foundational premise of mass screening and argue that it is very unlikely that the model that iBorderCtrl provides for deception detection would work in practice. By interrogating actual systems in this way, we argue that we can begin to question the very premise of the development of data-driven systems, and emotional AI and deception detection in particular, pushing back on the assumption that these systems are fulfilling the tasks they claim to be attending to and instead ask what function such projects carry out in the creation of subjects and management of populations. This function is not merely technical but, rather, we argue, distinctly political and forms part of a mode of governance increasingly shaping life opportunities and fundamental rights.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge