Liel Leman

Uncertainty Estimation based on Geometric Separation

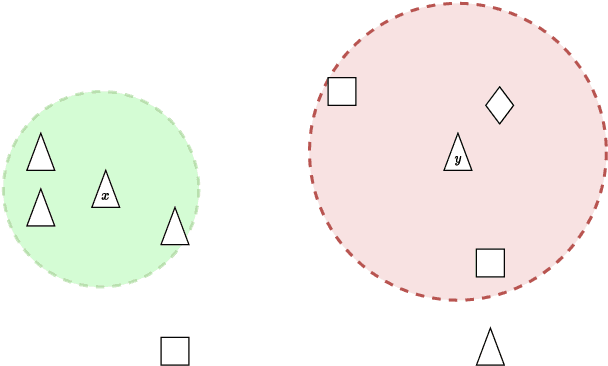

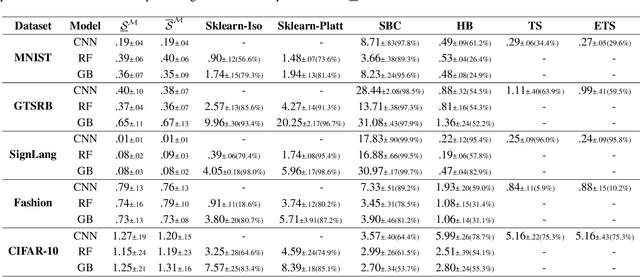

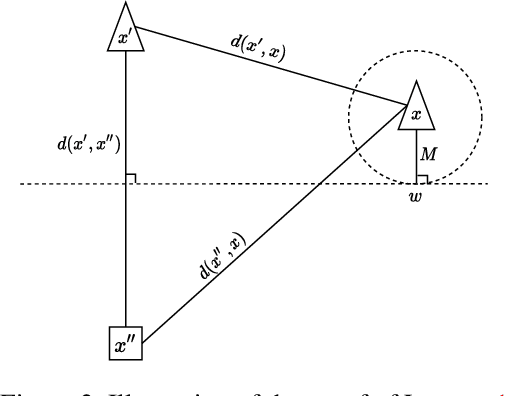

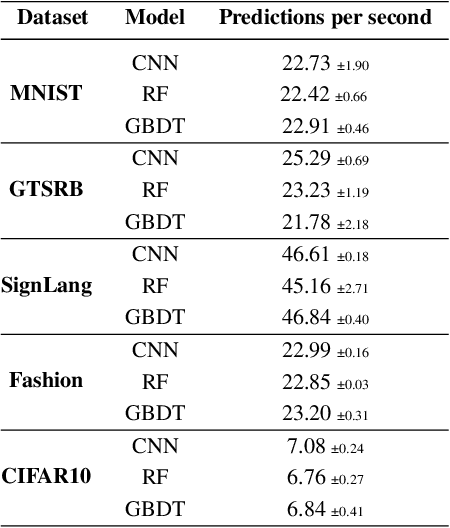

Jan 11, 2023Abstract:In machine learning, accurately predicting the probability that a specific input is correct is crucial for risk management. This process, known as uncertainty (or confidence) estimation, is particularly important in mission-critical applications such as autonomous driving. In this work, we put forward a novel geometric-based approach for improving uncertainty estimations in machine learning models. Our approach involves using the geometric distance of the current input from existing training inputs as a signal for estimating uncertainty, and then calibrating this signal using standard post-hoc techniques. We demonstrate that our method leads to more accurate uncertainty estimations than recently proposed approaches through extensive evaluation on a variety of datasets and models. Additionally, we optimize our approach so that it can be implemented on large datasets in near real-time applications, making it suitable for time-sensitive scenarios.

A Geometric Method for Improved Uncertainty Estimation in Real-time

Jun 23, 2022

Abstract:Machine learning classifiers are probabilistic in nature, and thus inevitably involve uncertainty. Predicting the probability of a specific input to be correct is called uncertainty (or confidence) estimation and is crucial for risk management. Post-hoc model calibrations can improve models' uncertainty estimations without the need for retraining, and without changing the model. Our work puts forward a geometric-based approach for uncertainty estimation. Roughly speaking, we use the geometric distance of the current input from the existing training inputs as a signal for estimating uncertainty and then calibrate that signal (instead of the model's estimation) using standard post-hoc calibration techniques. We show that our method yields better uncertainty estimations than recently proposed approaches by extensively evaluating multiple datasets and models. In addition, we also demonstrate the possibility of performing our approach in near real-time applications. Our code is available at our Github https://github.com/NoSleepDeveloper/Geometric-Calibrator.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge