Lawrence Wai

AGenT Zero: Zero-shot Automatic Multiple-Choice Question Generation for Skill Assessments

Dec 18, 2020

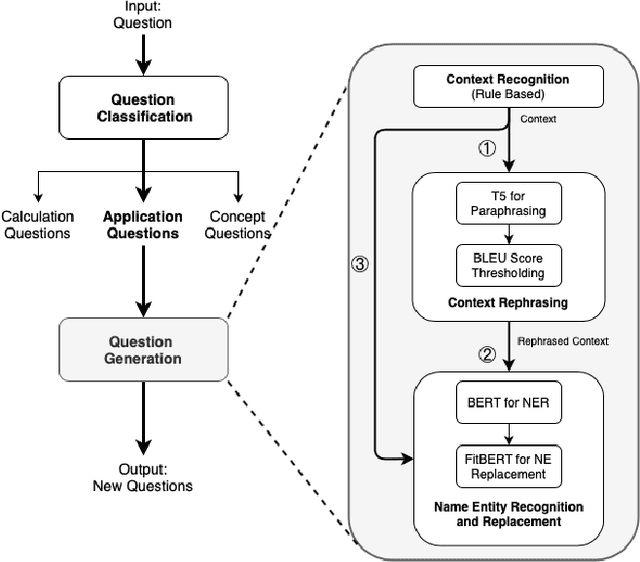

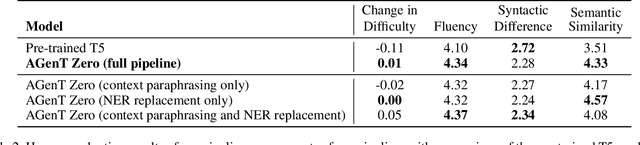

Abstract:Multiple-choice questions (MCQs) offer the most promising avenue for skill evaluation in the era of virtual education and job recruiting, where traditional performance-based alternatives such as projects and essays have become less viable, and grading resources are constrained. The automated generation of MCQs would allow assessment creation at scale. Recent advances in natural language processing have given rise to many complex question generation methods. However, the few methods that produce deployable results in specific domains require a large amount of domain-specific training data that can be very costly to acquire. Our work provides an initial foray into MCQ generation under high data-acquisition cost scenarios by strategically emphasizing paraphrasing the question context (compared to the task). In addition to maintaining semantic similarity between the question-answer pairs, our pipeline, which we call AGenT Zero, consists of only pre-trained models and requires no fine-tuning, minimizing data acquisition costs for question generation. AGenT Zero successfully outperforms other pre-trained methods in fluency and semantic similarity. Additionally, with some small changes, our assessment pipeline can be generalized to a broader question and answer space, including short answer or fill in the blank questions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge