Lauri Eklund

Cell Tracking via Proposal Generation and Selection

May 09, 2017

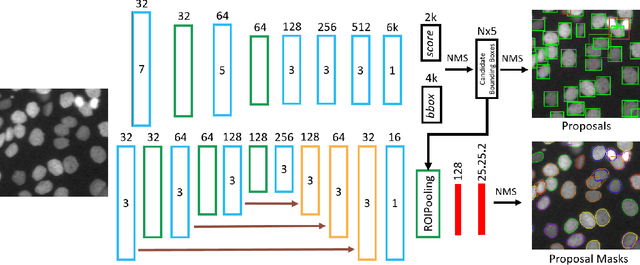

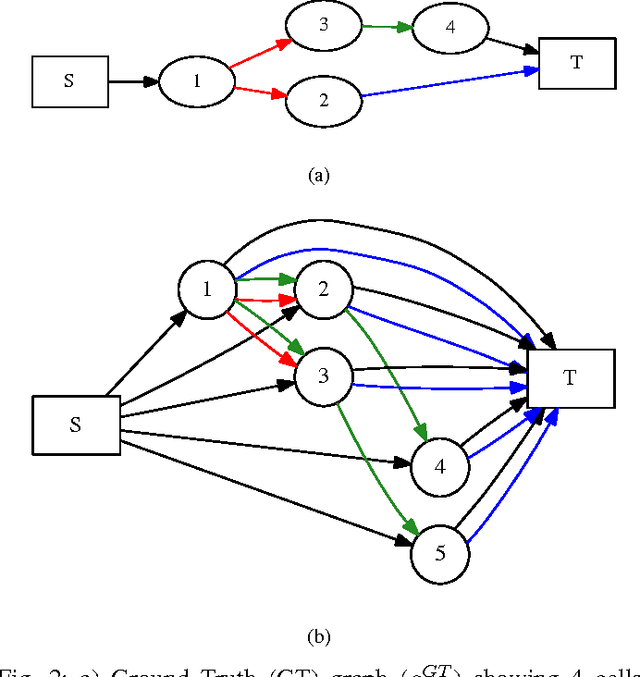

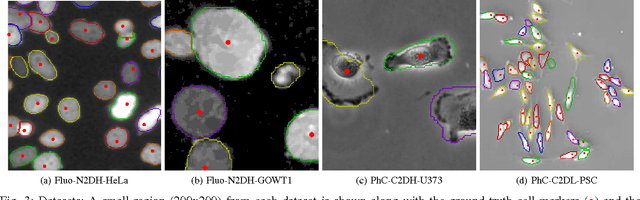

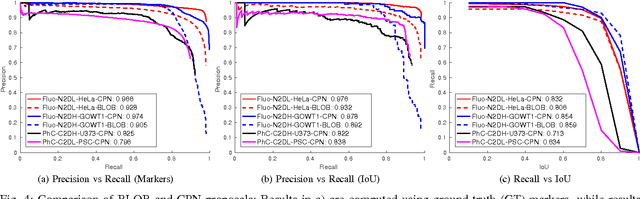

Abstract:Microscopy imaging plays a vital role in understanding many biological processes in development and disease. The recent advances in automation of microscopes and development of methods and markers for live cell imaging has led to rapid growth in the amount of image data being captured. To efficiently and reliably extract useful insights from these captured sequences, automated cell tracking is essential. This is a challenging problem due to large variation in the appearance and shapes of cells depending on many factors including imaging methodology, biological characteristics of cells, cell matrix composition, labeling methodology, etc. Often cell tracking methods require a sequence-specific segmentation method and manual tuning of many tracking parameters, which limits their applicability to sequences other than those they are designed for. In this paper, we propose 1) a deep learning based cell proposal method, which proposes candidates for cells along with their scores, and 2) a cell tracking method, which links proposals in adjacent frames in a graphical model using edges representing different cellular events and poses joint cell detection and tracking as the selection of a subset of cell and edge proposals. Our method is completely automated and given enough training data can be applied to a wide variety of microscopy sequences. We evaluate our method on multiple fluorescence and phase contrast microscopy sequences containing cells of various shapes and appearances from ISBI cell tracking challenge, and show that our method outperforms existing cell tracking methods. Code is available at: https://github.com/SaadUllahAkram/CellTracker

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge