Laurentiu Piciu

SOLIS -- The MLOps journey from data acquisition to actionable insights

Dec 22, 2021

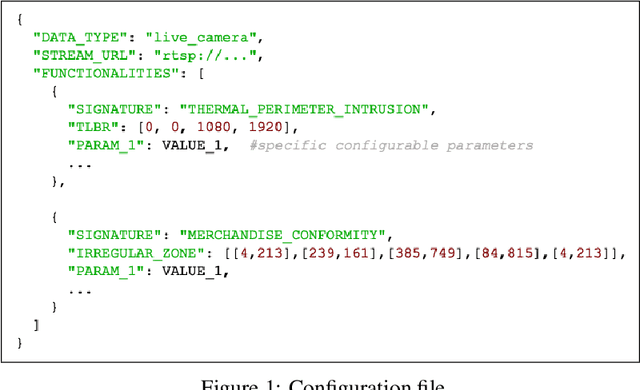

Abstract:Machine Learning operations is unarguably a very important and also one of the hottest topics in Artificial Intelligence lately. Being able to define very clear hypotheses for actual real-life problems that can be addressed by machine learning models, collecting and curating large amounts of data for model training and validation followed by model architecture search and actual optimization and finally presenting the results fits very well the scenario of Data Science experiments. This approach however does not supply the needed procedures and pipelines for the actual deployment of machine learning capabilities in real production grade systems. Automating live configuration mechanisms, on the fly adapting to live or offline data capture and consumption, serving multiple models in parallel either on edge or cloud architectures, addressing specific limitations of GPU memory or compute power, post-processing inference or prediction results and serving those either as APIs or with IoT based communication stacks in the same end-to-end pipeline are the real challenges that we try to address in this particular paper. In this paper we present a unified deployment pipeline and freedom-to-operate approach that supports all above requirements while using basic cross-platform tensor framework and script language engines.

ProVe -- Self-supervised pipeline for automated product replacement and cold-starting based on neural language models

Jun 26, 2020

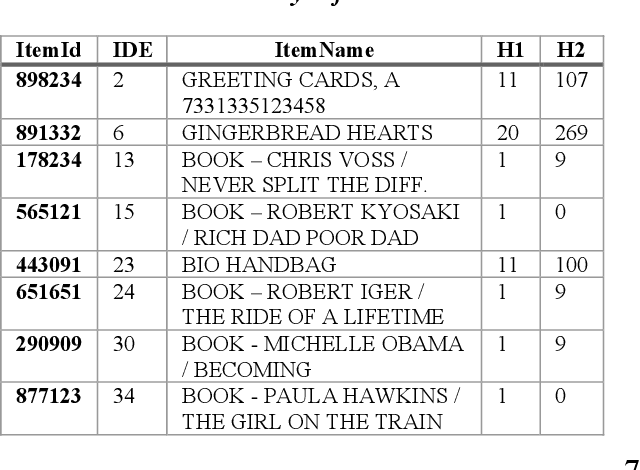

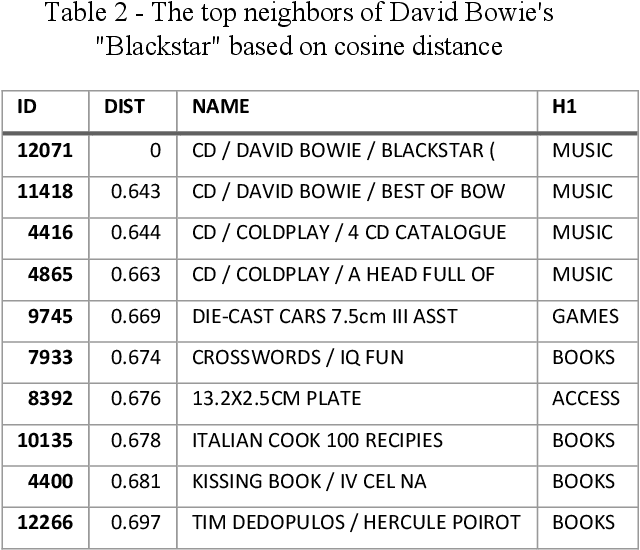

Abstract:In retail vertical industries, businesses are dealing with human limitation of quickly understanding and adapting to new purchasing behaviors. Moreover, retail businesses need to overcome the human limitation of properly managing a massive selection of products/brands/categories. These limitations lead to deficiencies from both commercial (e.g. loss of sales, decrease in customer satisfaction) and operational perspective (e.g. out-of-stock, over-stock). In this paper, we propose a pipeline approach based on Natural Language Understanding, for recommending the most suitable replacements for products that are out-of-stock. Moreover, we will propose a solution for managing products that were newly introduced in a retailer's portfolio with almost no transactional history. This solution will help businesses: automatically assign the new products to the right category; recommend complementary products for cross-sell from day 1; perform sales predictions even with almost no transactional history. Finally, the vector space model resulted by applying the pipeline presented in this paper is directly used as semantic information in deep learning-based demand forecasting solutions, leading to more accurate predictions. The whole research and experimentation process have been done using real-life private transactional data, however the source code is available on https://github.com/Lummetry/ProVe

CloudifierNet -- Deep Vision Models for Artificial Image Processing

Nov 04, 2019

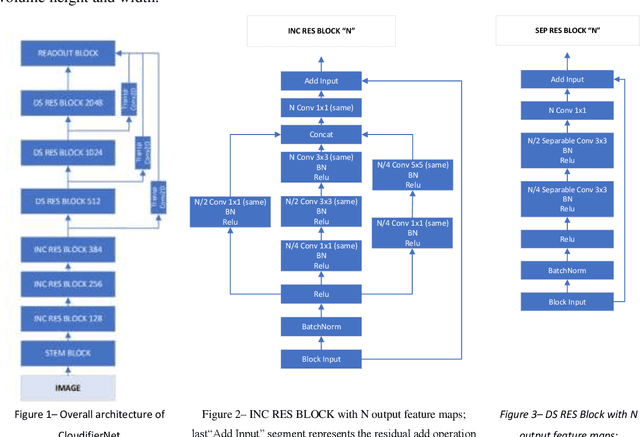

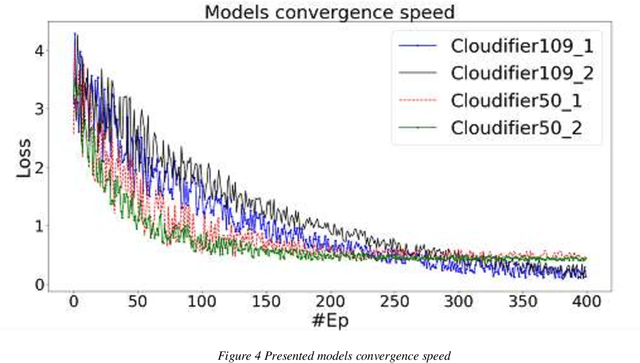

Abstract:Today, more and more, it is necessary that most applications and documents developed in previous or current technologies to be accessible online on cloud-based infrastructures. That is why the migration of legacy systems including their hosts of documents to new technologies and online infrastructures, using modern Artificial Intelligence techniques, is absolutely necessary. With the advancement of Artificial Intelligence and Deep Learning with its multitude of applications, a new area of research is emerging - that of automated systems development and maintenance. The underlying work objective that led to this paper aims to research and develop truly intelligent systems able to analyze user interfaces from various sources and generate real and usable inferences ranging from architecture analysis to actual code generation. One key element of such systems is that of artificial scene detection and analysis based on deep learning computer vision systems. Computer vision models and particularly deep directed acyclic graphs based on convolutional modules are generally constructed and trained based on natural images datasets. Due to this fact, the models will develop during the training process natural image feature detectors apart from the base graph modules that will learn basic primitive features. In the current paper, we will present the base principles of a deep neural pipeline for computer vision applied to artificial scenes (scenes generated by user interfaces or similar). Finally, we will present the conclusions based on experimental development and benchmarking against state-of-the-art transfer-learning implemented deep vision models.

Advanced Customer Activity Prediction based on Deep Hierarchic Encoder-Decoders

May 16, 2019

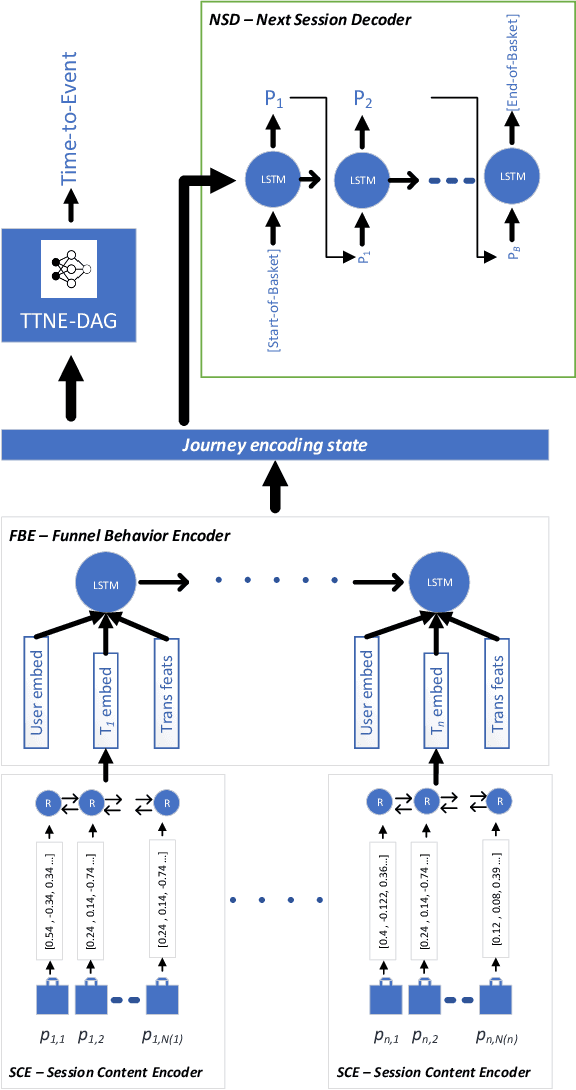

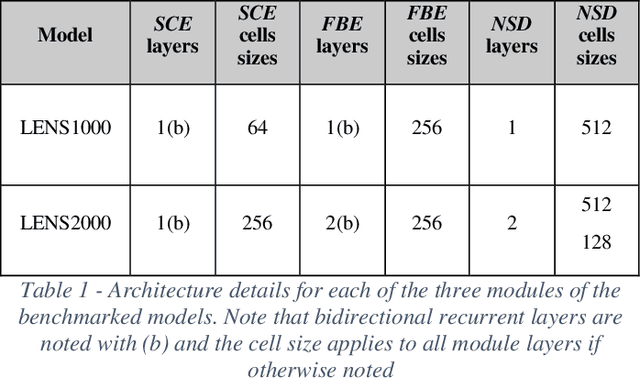

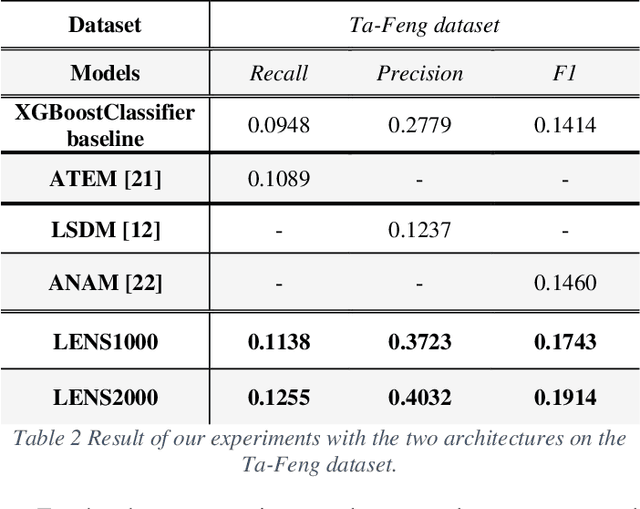

Abstract:Product recommender systems and customer profiling techniques have always been a priority in online retail. Recent machine learning research advances and also wide availability of massive parallel numerical computing has enabled various approaches and directions of recommender systems advancement. Worth to mention is the fact that in past years multiple traditional "offline" retail business are gearing more and more towards employing inferential and even predictive analytics both to stock-related problems such as predictive replenishment but also to enrich customer interaction experience. One of the most important areas of recommender systems research and development is that of Deep Learning based models which employ representational learning to model consumer behavioral patterns. Current state of the art in Deep Learning based recommender systems uses multiple approaches ranging from already classical methods such as the ones based on learning product representation vector, to recurrent analysis of customer transactional time-series and up to generative models based on adversarial training. Each of these methods has multiple advantages and inherent weaknesses such as inability of understanding the actual user-journey, ability to propose only single product recommendation or top-k product recommendations without prediction of actual next-best-offer. In our work we will present a new and innovative architectural approach of applying state-of-the-art hierarchical multi-module encoder-decoder architecture in order to solve several of current state-of-the-art recommender systems issues. Our approach will also produce by-products such as product need-based segmentation and customer behavioral segmentation - all in an end-to-end trainable approach.

Deep recommender engine based on efficient product embeddings neural pipeline

Mar 24, 2019

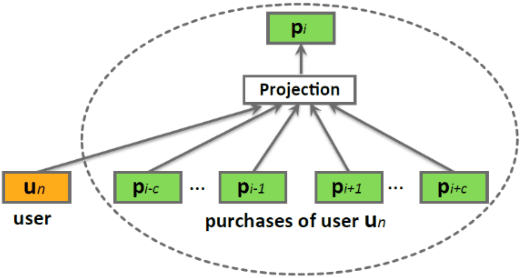

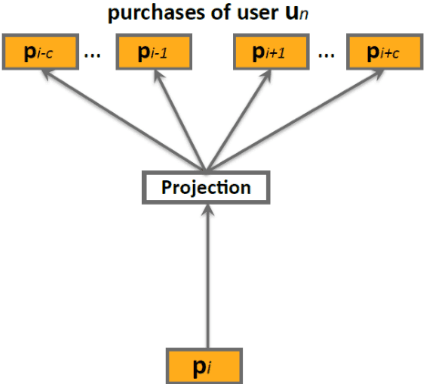

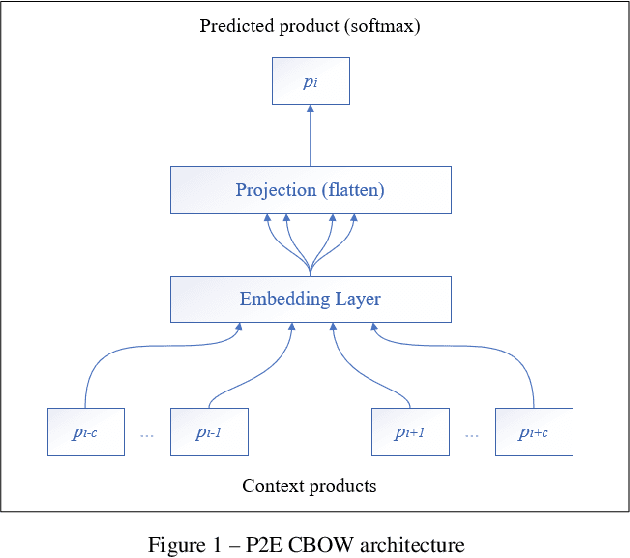

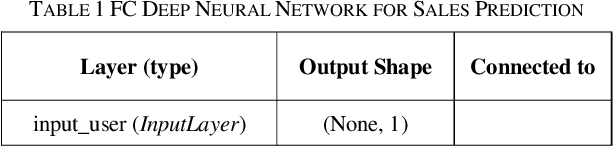

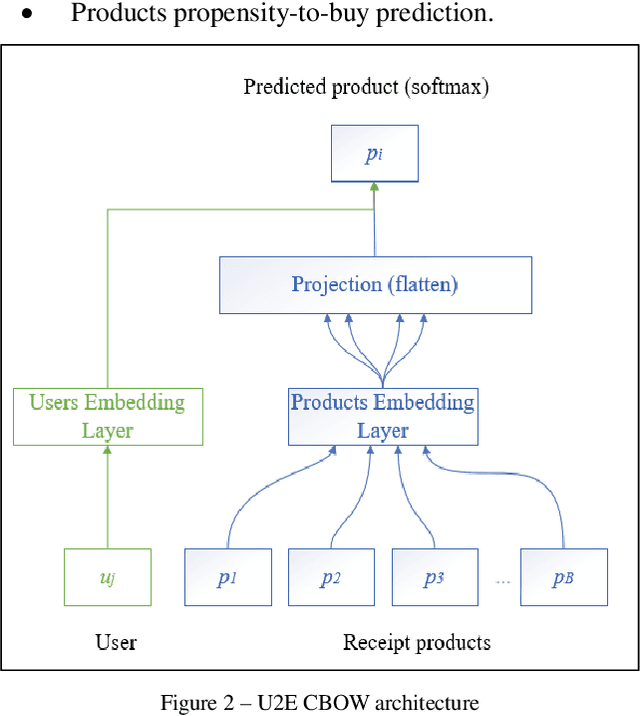

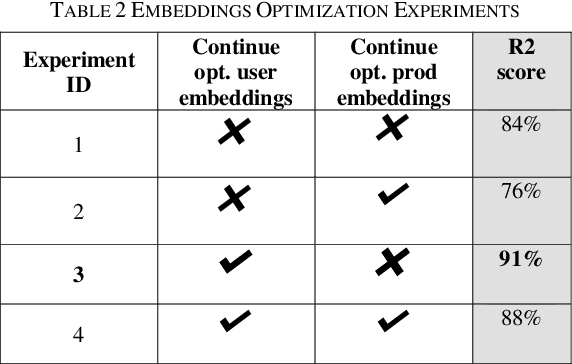

Abstract:Predictive analytics systems are currently one of the most important areas of research and development within the Artificial Intelligence domain and particularly in Machine Learning. One of the "holy grails" of predictive analytics is the research and development of the "perfect" recommendation system. In our paper we propose an advanced pipeline model for the multi-task objective of determining product complementarity, similarity and sales prediction using deep neural models applied to big-data sequential transaction systems. Our highly parallelized hybrid pipeline consists of both unsupervised and supervised models, used for the objectives of generating semantic product embeddings and predicting sales, respectively. Our experimentation and benchmarking have been done using very large pharma-industry retailer Big Data stream.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge