Laurent Rodriguez

Cortex Inspired Learning to Recover Damaged Signal Modality with ReD-SOM Model

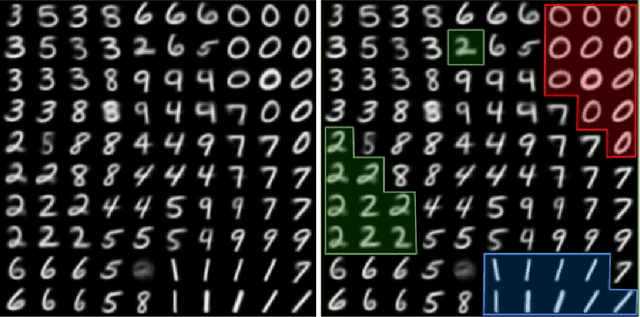

Jul 27, 2023Abstract:Recent progress in the fields of AI and cognitive sciences opens up new challenges that were previously inaccessible to study. One of such modern tasks is recovering lost data of one modality by using the data from another one. A similar effect (called the McGurk Effect) has been found in the functioning of the human brain. Observing this effect, one modality of information interferes with another, changing its perception. In this paper, we propose a way to simulate such an effect and use it to reconstruct lost data modalities by combining Variational Auto-Encoders, Self-Organizing Maps, and Hebb connections in a unified ReD-SOM (Reentering Deep Self-organizing Map) model. We are inspired by human's capability to use different zones of the brain in different modalities, in case of having a lack of information in one of the modalities. This new approach not only improves the analysis of ambiguous data but also restores the intended signal! The results obtained on the multimodal dataset demonstrate an increase of quality of the signal reconstruction. The effect is remarkable both visually and quantitatively, specifically in presence of a significant degree of signal's distortion.

A unified software/hardware scalable architecture for brain-inspired computing based on self-organizing neural models

Jan 06, 2022

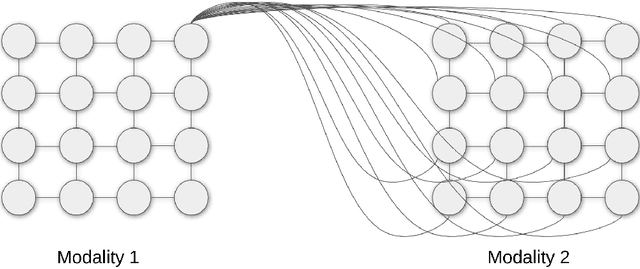

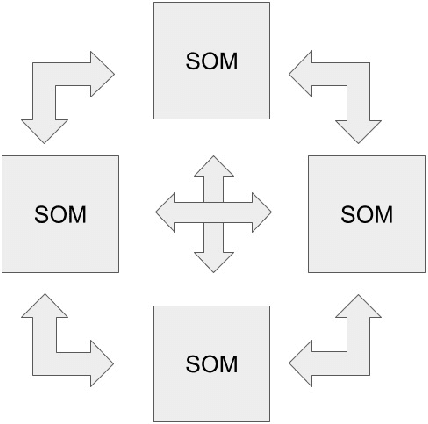

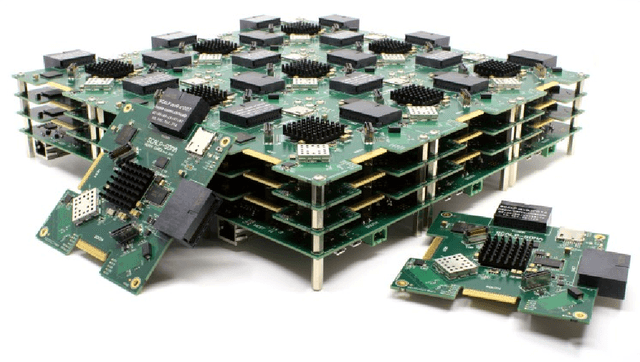

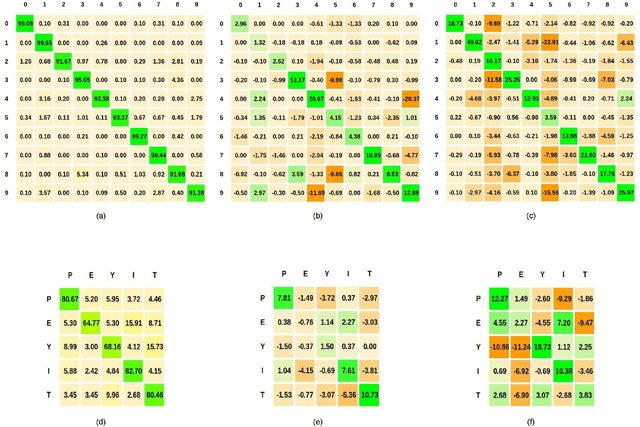

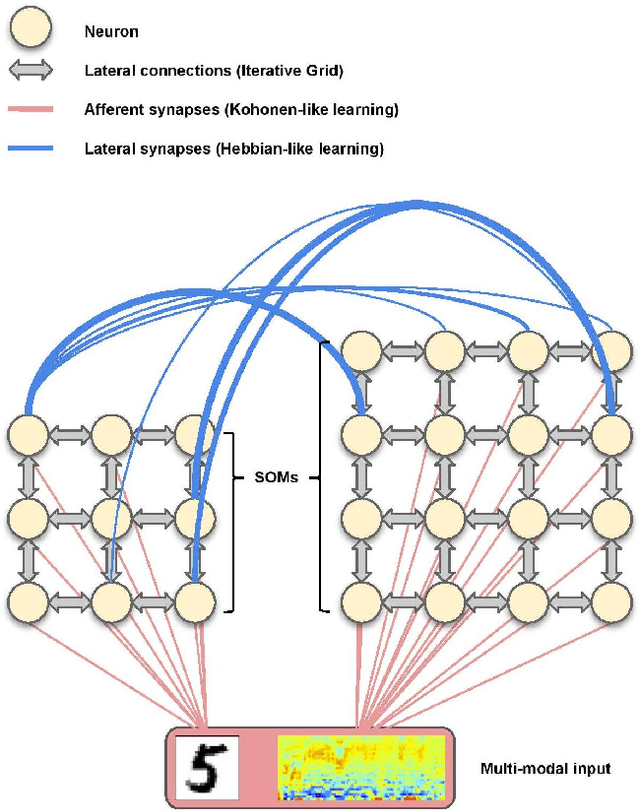

Abstract:The field of artificial intelligence has significantly advanced over the past decades, inspired by discoveries from the fields of biology and neuroscience. The idea of this work is inspired by the process of self-organization of cortical areas in the human brain from both afferent and lateral/internal connections. In this work, we develop an original brain-inspired neural model associating Self-Organizing Maps (SOM) and Hebbian learning in the Reentrant SOM (ReSOM) model. The framework is applied to multimodal classification problems. Compared to existing methods based on unsupervised learning with post-labeling, the model enhances the state-of-the-art results. This work also demonstrates the distributed and scalable nature of the model through both simulation results and hardware execution on a dedicated FPGA-based platform named SCALP (Self-configurable 3D Cellular Adaptive Platform). SCALP boards can be interconnected in a modular way to support the structure of the neural model. Such a unified software and hardware approach enables the processing to be scaled and allows information from several modalities to be merged dynamically. The deployment on hardware boards provides performance results of parallel execution on several devices, with the communication between each board through dedicated serial links. The proposed unified architecture, composed of the ReSOM model and the SCALP hardware platform, demonstrates a significant increase in accuracy thanks to multimodal association, and a good trade-off between latency and power consumption compared to a centralized GPU implementation.

Improving Self-Organizing Maps with Unsupervised Feature Extraction

Sep 04, 2020

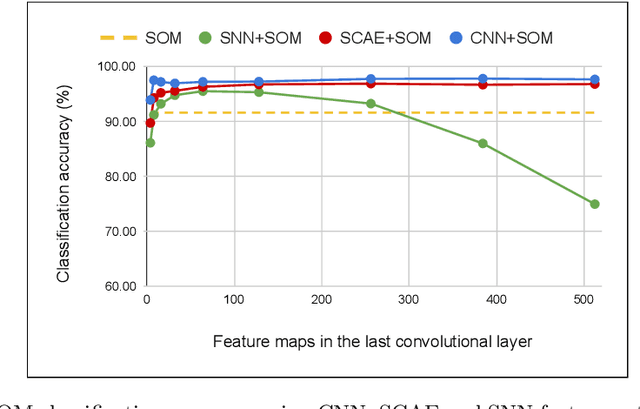

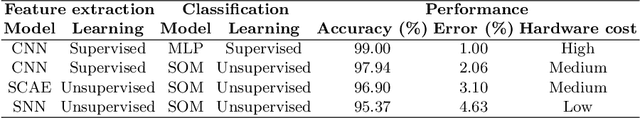

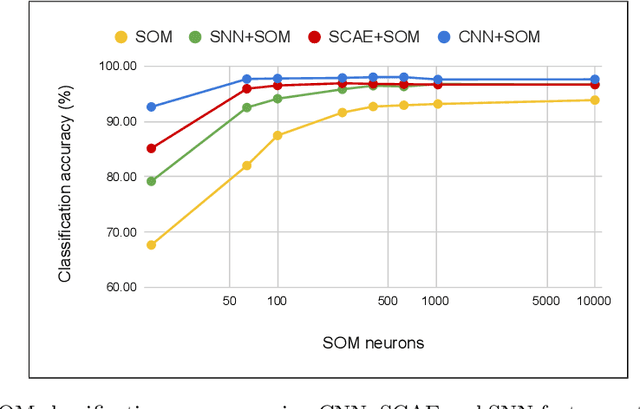

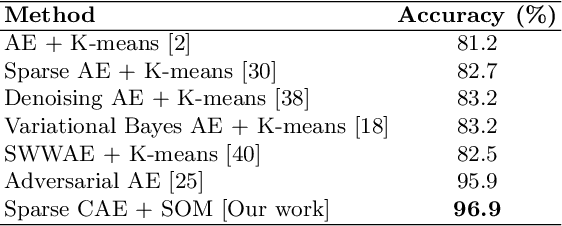

Abstract:The Self-Organizing Map (SOM) is a brain-inspired neural model that is very promising for unsupervised learning, especially in embedded applications. However, it is unable to learn efficient prototypes when dealing with complex datasets. We propose in this work to improve the SOM performance by using extracted features instead of raw data. We conduct a comparative study on the SOM classification accuracy with unsupervised feature extraction using two different approaches: a machine learning approach with Sparse Convolutional Auto-Encoders using gradient-based learning, and a neuroscience approach with Spiking Neural Networks using Spike Timing Dependant Plasticity learning. The SOM is trained on the extracted features, then very few labeled samples are used to label the neurons with their corresponding class. We investigate the impact of the feature maps, the SOM size and the labeled subset size on the classification accuracy using the different feature extraction methods. We improve the SOM classification by +6.09\% and reach state-of-the-art performance on unsupervised image classification.

Brain-inspired self-organization with cellular neuromorphic computing for multimodal unsupervised learning

Apr 11, 2020

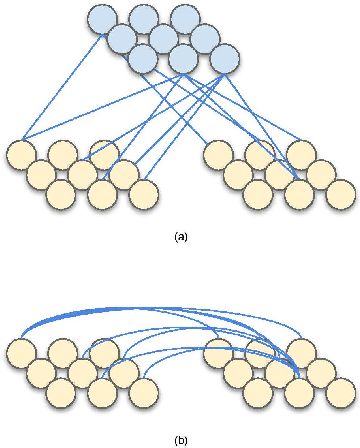

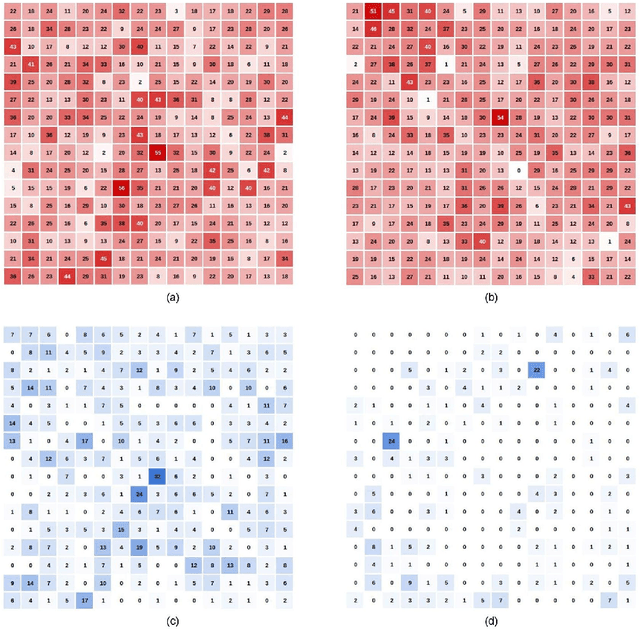

Abstract:Cortical plasticity is one of the main features that enable our capability to learn and adapt in our environment. Indeed, the cerebral cortex has the ability to self-organize itself through two distinct forms of plasticity: the structural plasticity that creates (sprouting) or cuts (pruning) synaptic connections between neurons, and the synaptic plasticity that modifies the synaptic connections strength. These mechanisms are very likely at the basis of an extremely interesting characteristic of the human brain development: the multimodal association. [...] To model such a behavior, Edelman and Damasio proposed respectively the Reentry and the Convergence Divergence Zone frameworks where bi-directional neural communications can lead to both multimodal fusion (convergence) and inter-modal activation (divergence). [...] In this paper, we build a brain-inspired neural system based on the Reentry principles, using Self-Organizing Maps and Hebbian-like learning. We propose and compare different computational methods for unsupervised learning and inference, then quantify the gain of both convergence and divergence mechanisms in a multimodal classification task. The divergence mechanism is used to label one modality based on the other, while the convergence mechanism is used to improve the overall accuracy of the system. We perform our experiments on a constructed written/spoken digits database and a DVS/EMG hand gestures database. Finally, we implement our system on the Iterative Grid, a cellular neuromorphic architecture that enables distributed computing with local connectivity. We show the gain of the so-called hardware plasticity induced by our model, where the system's topology is not fixed by the user but learned along the system's experience through self-organization.

* Preprint, 24 pages, 11 figures, 4 tables

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge