Lars Rumberg

Two-Stream Aural-Visual Affect Analysis in the Wild

Mar 03, 2020

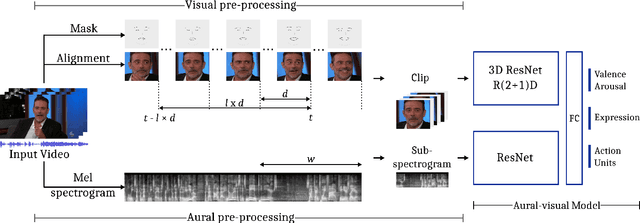

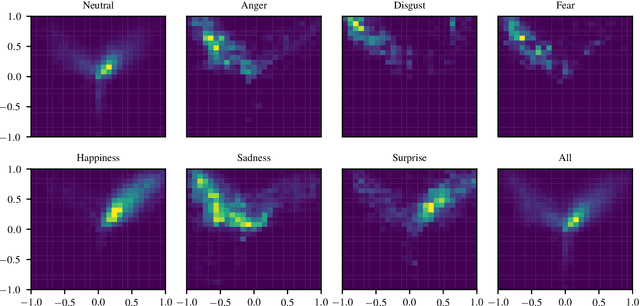

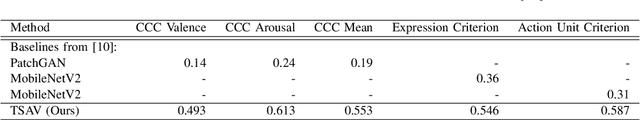

Abstract:Human affect recognition is an essential part of natural human-computer interaction. However, current methods are still in their infancy, especially for in-the-wild data. In this work, we introduce our submission to the Affective Behavior Analysis in-the-wild (ABAW) 2020 competition. We propose a two-stream aural-visual analysis model to recognize affective behavior from videos. Audio and image streams are first processed separately and fed into a convolutional neural network. Instead of applying recurrent architectures for temporal analysis we only use temporal convolutions. Furthermore, the model is given access to additional features extracted during face-alignment. At training time, we exploit correlations between different emotion representations to improve performance. Our model achieves promising results on the challenging Aff-Wild2 database.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge