Lanhang Zhai

Research on Mask Wearing Detection of Natural Population Based on Improved YOLOv4

Aug 24, 2022

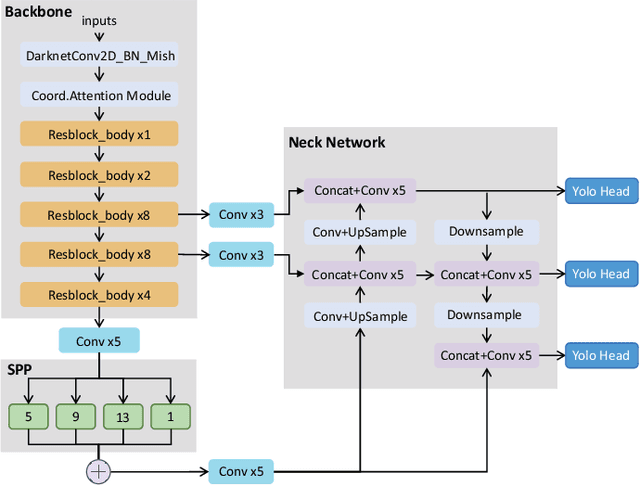

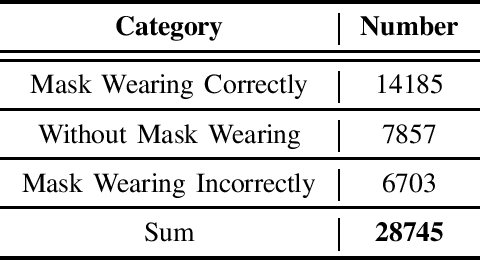

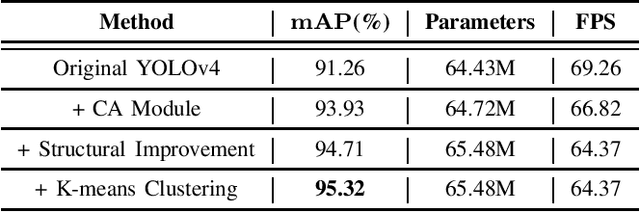

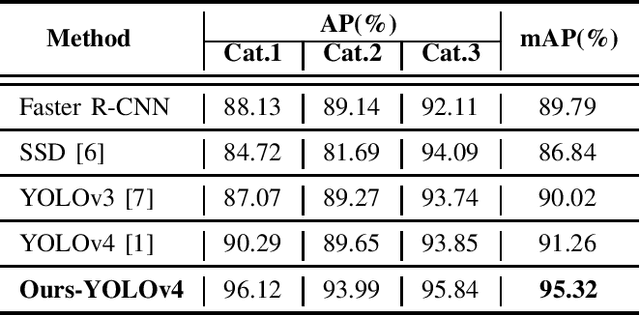

Abstract:Recently, the domestic COVID-19 epidemic situation has been serious, but in some public places, some people do not wear masks or wear masks incorrectly, which requires the relevant staff to instantly remind and supervise them to wear masks correctly. However, in the face of such important and complicated work, it is necessary to carry out automated mask wearing detection in public places. This paper proposes a new mask wearing detection method based on the improved YOLOv4. Specifically, firstly, we add the Coordinate Attention Module to the backbone to coordinate feature fusion and representation. Secondly, we conduct a series of network structural improvements to enhance the model performance and robustness. Thirdly, we deploy the K-means clustering algorithm to make the nine anchor boxes more suitable for our NPMD dataset. The experimental results show that the improved YOLOv4 performs better, exceeding the baseline by 4.06% AP with a comparable speed of 64.37 FPS.

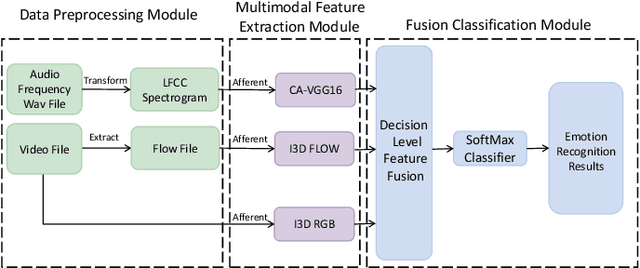

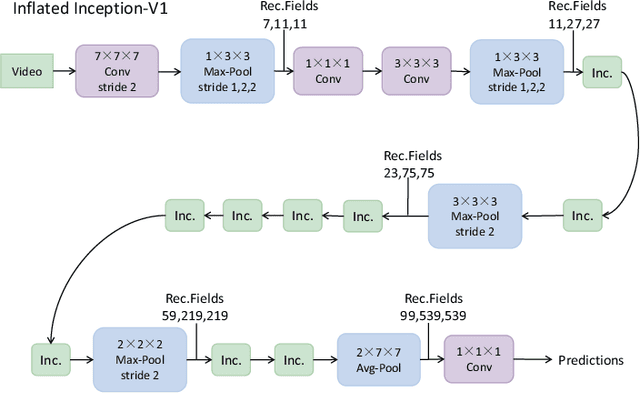

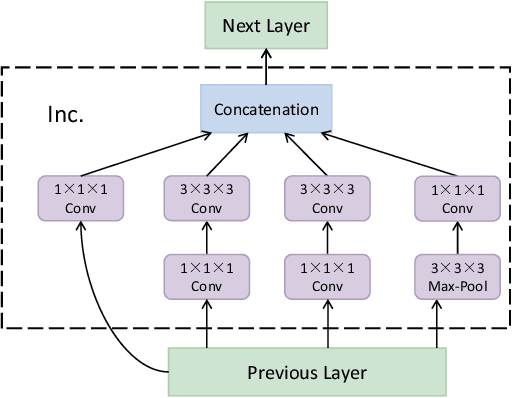

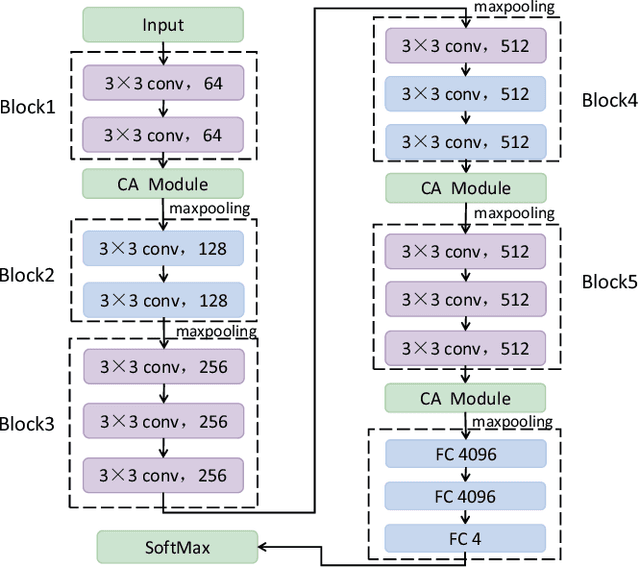

ICANet: A Method of Short Video Emotion Recognition Driven by Multimodal Data

Aug 24, 2022

Abstract:With the fast development of artificial intelligence and short videos, emotion recognition in short videos has become one of the most important research topics in human-computer interaction. At present, most emotion recognition methods still stay in a single modality. However, in daily life, human beings will usually disguise their real emotions, which leads to the problem that the accuracy of single modal emotion recognition is relatively terrible. Moreover, it is not easy to distinguish similar emotions. Therefore, we propose a new approach denoted as ICANet to achieve multimodal short video emotion recognition by employing three different modalities of audio, video and optical flow, making up for the lack of a single modality and then improving the accuracy of emotion recognition in short videos. ICANet has a better accuracy of 80.77% on the IEMOCAP benchmark, exceeding the SOTA methods by 15.89%.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge