Lalith Bharadwaj Baru

Harnessing Wavelet Transformations for Generalizable Deepfake Forgery Detection

Sep 26, 2024

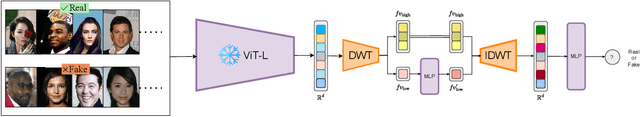

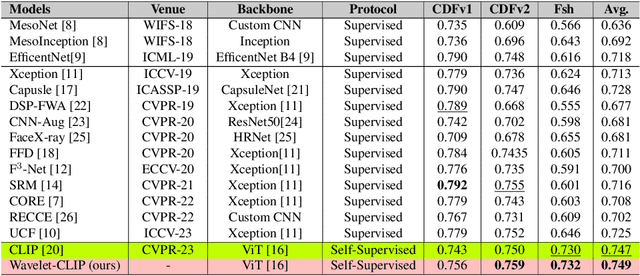

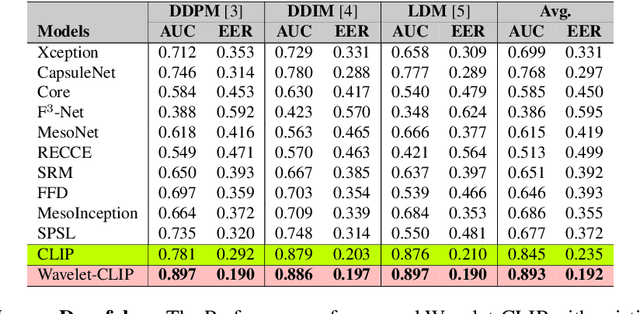

Abstract:The evolution of digital image manipulation, particularly with the advancement of deep generative models, significantly challenges existing deepfake detection methods, especially when the origin of the deepfake is obscure. To tackle the increasing complexity of these forgeries, we propose \textbf{Wavelet-CLIP}, a deepfake detection framework that integrates wavelet transforms with features derived from the ViT-L/14 architecture, pre-trained in the CLIP fashion. Wavelet-CLIP utilizes Wavelet Transforms to deeply analyze both spatial and frequency features from images, thus enhancing the model's capability to detect sophisticated deepfakes. To verify the effectiveness of our approach, we conducted extensive evaluations against existing state-of-the-art methods for cross-dataset generalization and detection of unseen images generated by standard diffusion models. Our method showcases outstanding performance, achieving an average AUC of 0.749 for cross-data generalization and 0.893 for robustness against unseen deepfakes, outperforming all compared methods. The code can be reproduced from the repo: \url{https://github.com/lalithbharadwajbaru/Wavelet-CLIP}

HyperGALE: ASD Classification via Hypergraph Gated Attention with Learnable Hyperedges

Mar 21, 2024Abstract:Autism Spectrum Disorder (ASD) is a neurodevelopmental condition characterized by varied social cognitive challenges and repetitive behavioral patterns. Identifying reliable brain imaging-based biomarkers for ASD has been a persistent challenge due to the spectrum's diverse symptomatology. Existing baselines in the field have made significant strides in this direction, yet there remains room for improvement in both performance and interpretability. We propose \emph{HyperGALE}, which builds upon the hypergraph by incorporating learned hyperedges and gated attention mechanisms. This approach has led to substantial improvements in the model's ability to interpret complex brain graph data, offering deeper insights into ASD biomarker characterization. Evaluated on the extensive ABIDE II dataset, \emph{HyperGALE} not only improves interpretability but also demonstrates statistically significant enhancements in key performance metrics compared to both previous baselines and the foundational hypergraph model. The advancement \emph{HyperGALE} brings to ASD research highlights the potential of sophisticated graph-based techniques in neurodevelopmental studies. The source code and implementation instructions are available at GitHub:https://github.com/mehular0ra/HyperGALE.

BeSt-LeS: Benchmarking Stroke Lesion Segmentation using Deep Supervision

Oct 10, 2023Abstract:Brain stroke has become a significant burden on global health and thus we need remedies and prevention strategies to overcome this challenge. For this, the immediate identification of stroke and risk stratification is the primary task for clinicians. To aid expert clinicians, automated segmentation models are crucial. In this work, we consider the publicly available dataset ATLAS $v2.0$ to benchmark various end-to-end supervised U-Net style models. Specifically, we have benchmarked models on both 2D and 3D brain images and evaluated them using standard metrics. We have achieved the highest Dice score of 0.583 on the 2D transformer-based model and 0.504 on the 3D residual U-Net respectively. We have conducted the Wilcoxon test for 3D models to correlate the relationship between predicted and actual stroke volume. For reproducibility, the code and model weights are made publicly available: https://github.com/prantik-pdeb/BeSt-LeS.

Interpreting Bias in the Neural Networks: A Peek Into Representational Similarity

Nov 14, 2022Abstract:Neural networks trained on standard image classification data sets are shown to be less resistant to data set bias. It is necessary to comprehend the behavior objective function that might correspond to superior performance for data with biases. However, there is little research on the selection of the objective function and its representational structure when trained on data set with biases. In this paper, we investigate the performance and internal representational structure of convolution-based neural networks (e.g., ResNets) trained on biased data using various objective functions. We specifically study similarities in representations, using Centered Kernel Alignment (CKA), for different objective functions (probabilistic and margin-based) and offer a comprehensive analysis of the chosen ones. According to our findings, ResNets representations obtained with Negative Log Likelihood $(\mathcal{L}_{NLL})$ and Softmax Cross-Entropy ($\mathcal{L}_{SCE}$) as loss functions are equally capable of producing better performance and fine representations on biased data. We note that without progressive representational similarities among the layers of a neural network, the performance is less likely to be robust.

Improvising the Learning of Neural Networks on Hyperspherical Manifold

Sep 29, 2021

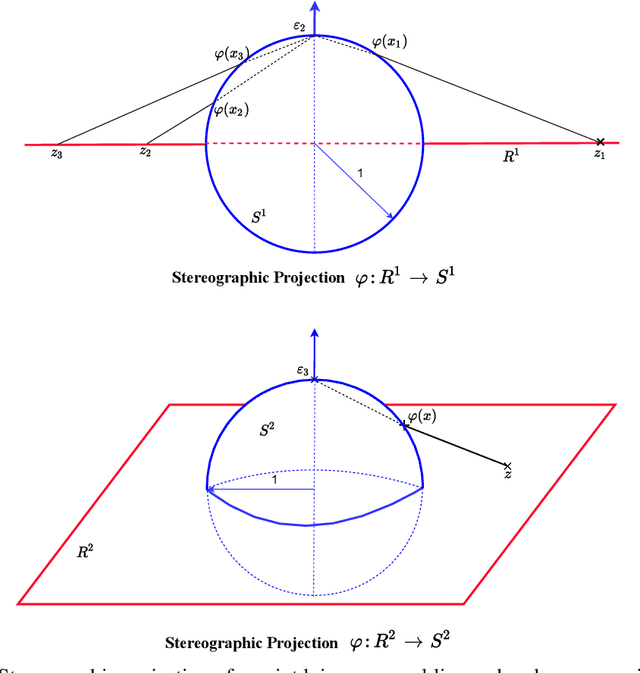

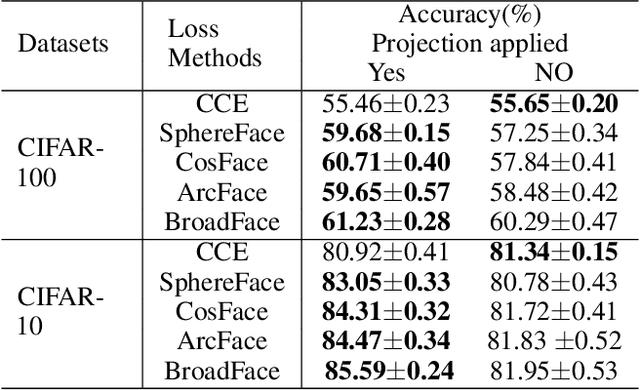

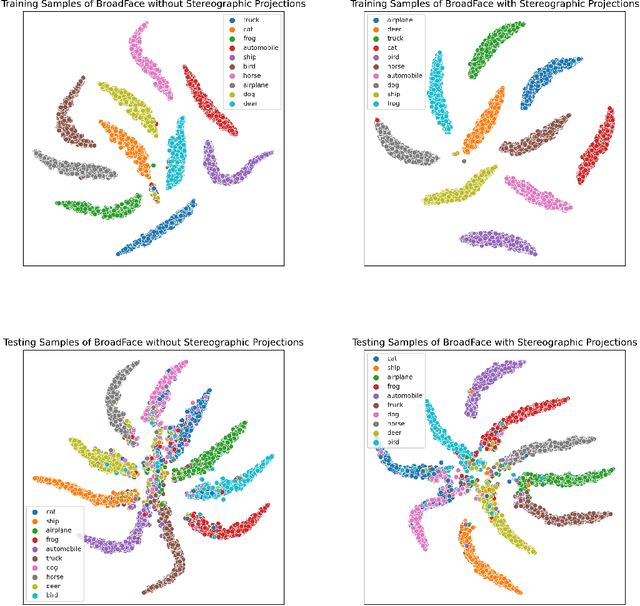

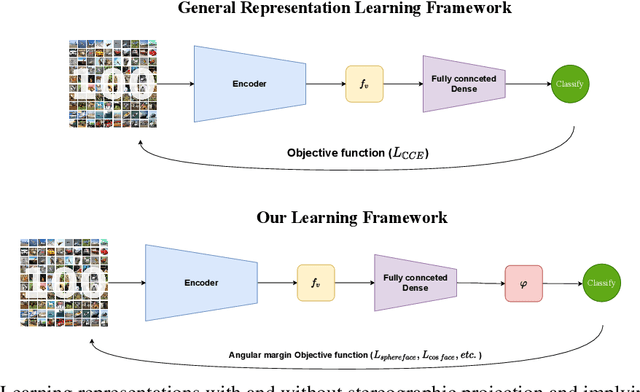

Abstract:The impact of convolution neural networks (CNNs) in the supervised settings provided tremendous increment in performance. The representations learned from CNN's operated on hyperspherical manifold led to insightful outcomes in face recognition, face identification and other supervised tasks. A broad range of activation functions is developed with hypersphere intuition which performs superior to softmax in euclidean space. The main motive of this research is to provide insights. First, the stereographic projection is implied to transform data from Euclidean space ($\mathbb{R}^{n}$) to hyperspherical manifold ($\mathbb{S}^{n}$) to analyze the performance of angular margin losses. Secondly, proving both theoretically and practically that decision boundaries constructed on hypersphere using stereographic projection obliges the learning of neural networks. Experiments have proved that applying stereographic projection on existing state-of-the-art angular margin objective functions led to improve performance for standard image classification data sets (CIFAR-10,100). The code is publicly available at: https://github.com/barulalithb/stereo-angular-margin.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge