Harnessing Wavelet Transformations for Generalizable Deepfake Forgery Detection

Paper and Code

Sep 26, 2024

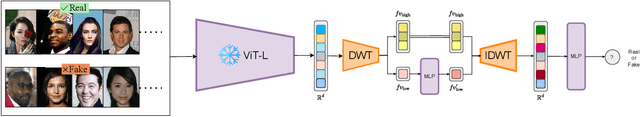

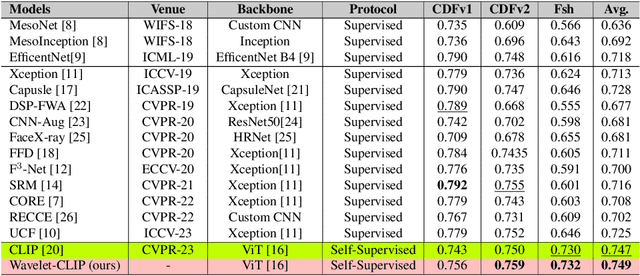

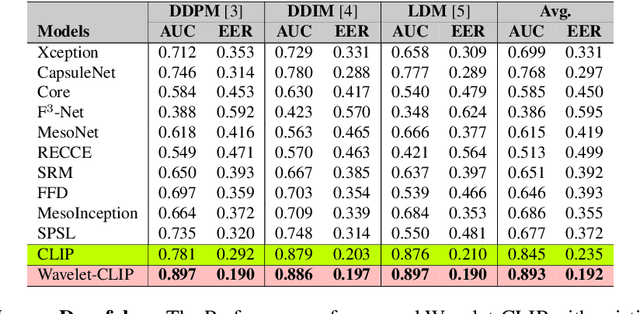

The evolution of digital image manipulation, particularly with the advancement of deep generative models, significantly challenges existing deepfake detection methods, especially when the origin of the deepfake is obscure. To tackle the increasing complexity of these forgeries, we propose \textbf{Wavelet-CLIP}, a deepfake detection framework that integrates wavelet transforms with features derived from the ViT-L/14 architecture, pre-trained in the CLIP fashion. Wavelet-CLIP utilizes Wavelet Transforms to deeply analyze both spatial and frequency features from images, thus enhancing the model's capability to detect sophisticated deepfakes. To verify the effectiveness of our approach, we conducted extensive evaluations against existing state-of-the-art methods for cross-dataset generalization and detection of unseen images generated by standard diffusion models. Our method showcases outstanding performance, achieving an average AUC of 0.749 for cross-data generalization and 0.893 for robustness against unseen deepfakes, outperforming all compared methods. The code can be reproduced from the repo: \url{https://github.com/lalithbharadwajbaru/Wavelet-CLIP}

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge