Kyudam Choi

A novel approach for holographic 3D content generation without depth map

Sep 26, 2023

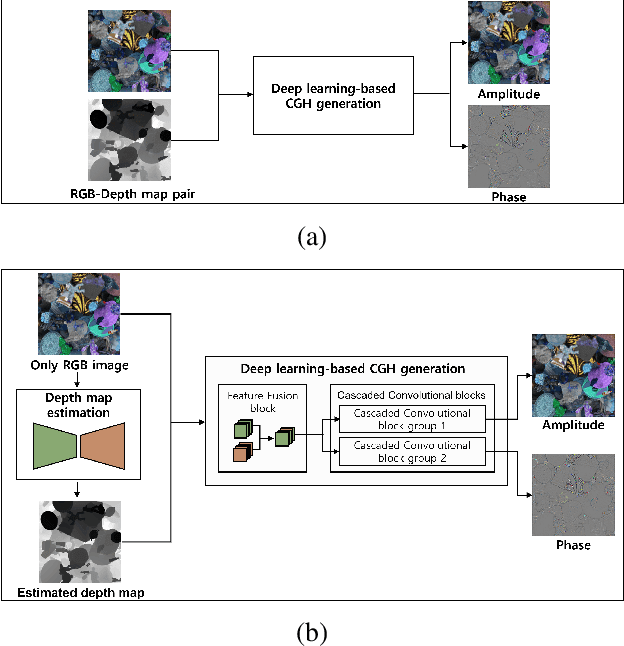

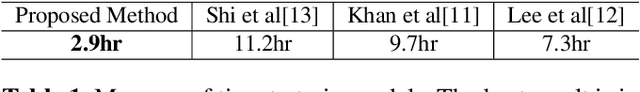

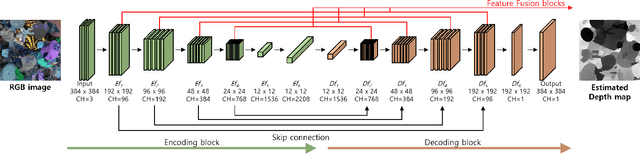

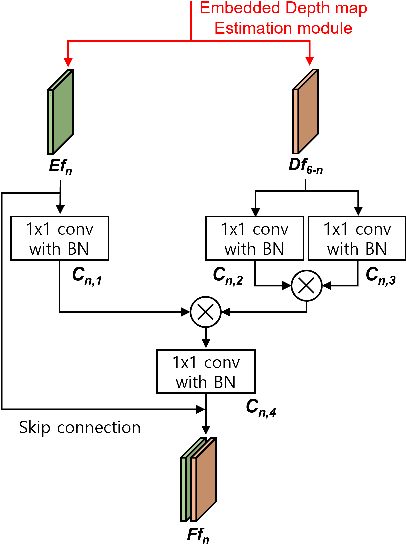

Abstract:In preparation for observing holographic 3D content, acquiring a set of RGB color and depth map images per scene is necessary to generate computer-generated holograms (CGHs) when using the fast Fourier transform (FFT) algorithm. However, in real-world situations, these paired formats of RGB color and depth map images are not always fully available. We propose a deep learning-based method to synthesize the volumetric digital holograms using only the given RGB image, so that we can overcome environments where RGB color and depth map images are partially provided. The proposed method uses only the input of RGB image to estimate its depth map and then generate its CGH sequentially. Through experiments, we demonstrate that the volumetric hologram generated through our proposed model is more accurate than that of competitive models, under the situation that only RGB color data can be provided.

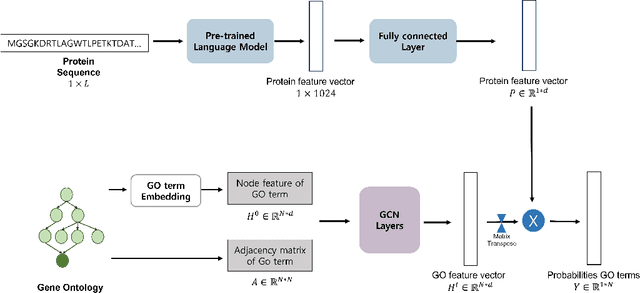

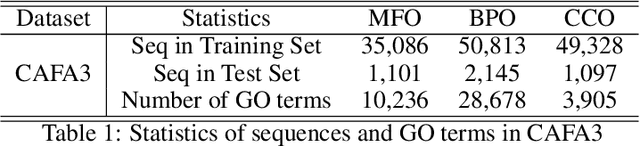

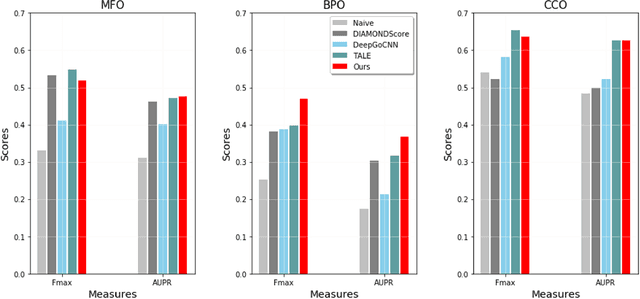

An Effective GCN-based Hierarchical Multi-label classification for Protein Function Prediction

Dec 06, 2021

Abstract:We propose an effective method to improve Protein Function Prediction (PFP) utilizing hierarchical features of Gene Ontology (GO) terms. Our method consists of a language model for encoding the protein sequence and a Graph Convolutional Network (GCN) for representing GO terms. To reflect the hierarchical structure of GO to GCN, we employ node(GO term)-wise representations containing the whole hierarchical information. Our algorithm shows effectiveness in a large-scale graph by expanding the GO graph compared to previous models. Experimental results show that our method outperformed state-of-the-art PFP approaches.

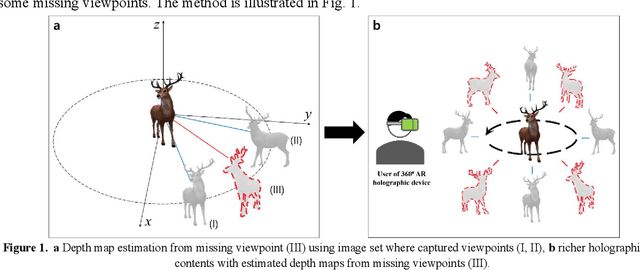

Deep Learning-based High-precision Depth Map Estimation from Missing Viewpoints for 360 Degree Digital Holography

Mar 09, 2021

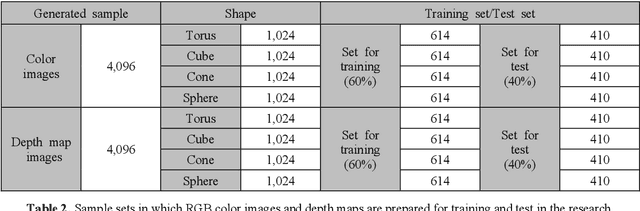

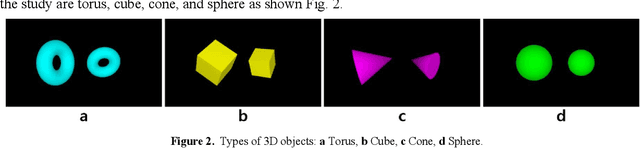

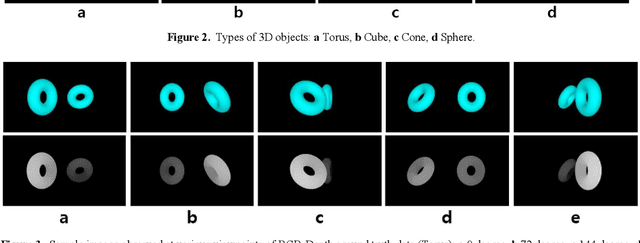

Abstract:In this paper, we propose a novel, convolutional neural network model to extract highly precise depth maps from missing viewpoints, especially well applicable to generate holographic 3D contents. The depth map is an essential element for phase extraction which is required for synthesis of computer-generated hologram (CGH). The proposed model called the HDD Net uses MSE for the better performance of depth map estimation as loss function, and utilizes the bilinear interpolation in up sampling layer with the Relu as activation function. We design and prepare a total of 8,192 multi-view images, each resolution of 640 by 360 for the deep learning study. The proposed model estimates depth maps through extracting features, up sampling. For quantitative assessment, we compare the estimated depth maps with the ground truths by using the PSNR, ACC, and RMSE. We also compare the CGH patterns made from estimated depth maps with ones made from ground truths. Furthermore, we demonstrate the experimental results to test the quality of estimated depth maps through directly reconstructing holographic 3D image scenes from the CGHs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge