Kristof Van Beeck

Person Detection Using an Ultra Low-resolution Thermal Imager on a Low-cost MCU

Dec 16, 2022

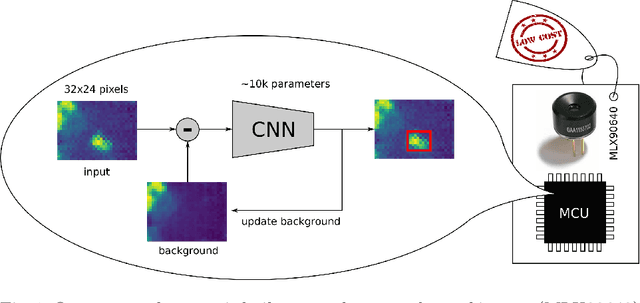

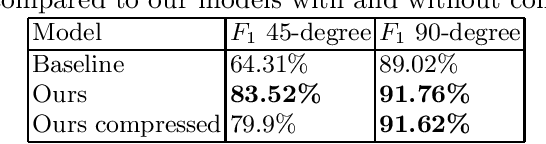

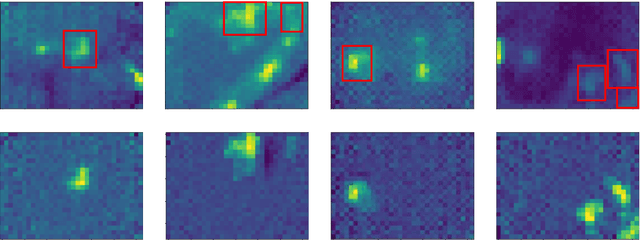

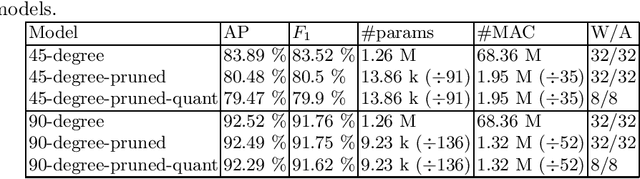

Abstract:Detecting persons in images or video with neural networks is a well-studied subject in literature. However, such works usually assume the availability of a camera of decent resolution and a high-performance processor or GPU to run the detection algorithm, which significantly increases the cost of a complete detection system. However, many applications require low-cost solutions, composed of cheap sensors and simple microcontrollers. In this paper, we demonstrate that even on such hardware we are not condemned to simple classic image processing techniques. We propose a novel ultra-lightweight CNN-based person detector that processes thermal video from a low-cost 32x24 pixel static imager. Trained and compressed on our own recorded dataset, our model achieves up to 91.62% accuracy (F1-score), has less than 10k parameters, and runs as fast as 87ms and 46ms on low-cost microcontrollers STM32F407 and STM32F746, respectively.

Anyone here? Smart embedded low-resolution omnidirectional video sensor to measure room occupancy

Jul 09, 2020

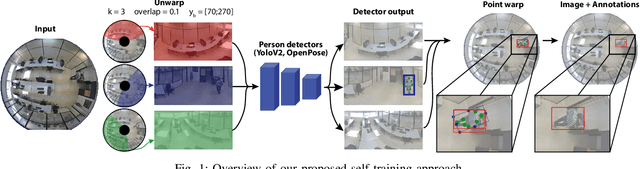

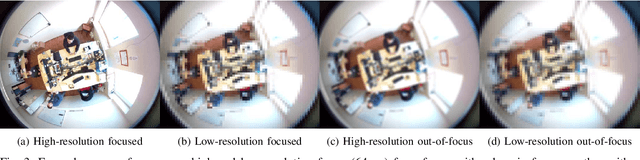

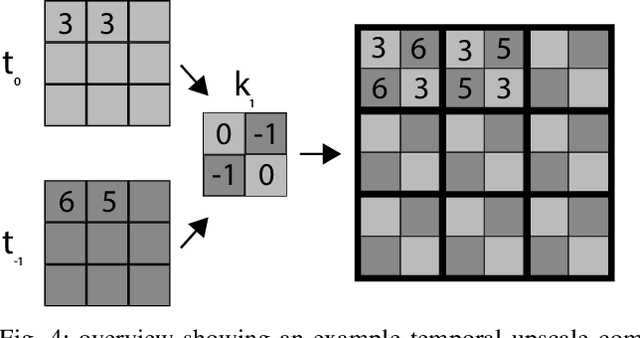

Abstract:In this paper, we present a room occupancy sensing solution with unique properties: (i) It is based on an omnidirectional vision camera, capturing rich scene info over a wide angle, enabling to count the number of people in a room and even their position. (ii) Although it uses a camera-input, no privacy issues arise because its extremely low image resolution, rendering people unrecognisable. (iii) The neural network inference is running entirely on a low-cost processing platform embedded in the sensor, reducing the privacy risk even further. (iv) Limited manual data annotation is needed, because of the self-training scheme we propose. Such a smart room occupancy rate sensor can be used in e.g. meeting rooms and flex-desks. Indeed, by encouraging flex-desking, the required office space can be reduced significantly. In some cases, however, a flex-desk that has been reserved remains unoccupied without an update in the reservation system. A similar problem occurs with meeting rooms, which are often under-occupied. By optimising the occupancy rate a huge reduction in costs can be achieved. Therefore, in this paper, we develop such system which determines the number of people present in office flex-desks and meeting rooms. Using an omnidirectional camera mounted in the ceiling, combined with a person detector, the company can intelligently update the reservation system based on the measured occupancy. Next to the optimisation and embedded implementation of such a self-training omnidirectional people detection algorithm, in this work we propose a novel approach that combines spatial and temporal image data, improving performance of our system on extreme low-resolution images.

How low can you go? Privacy-preserving people detection with an omni-directional camera

Jul 09, 2020

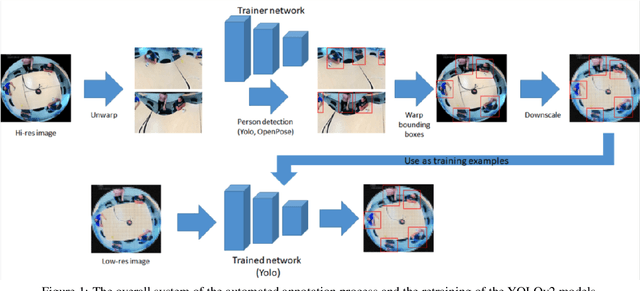

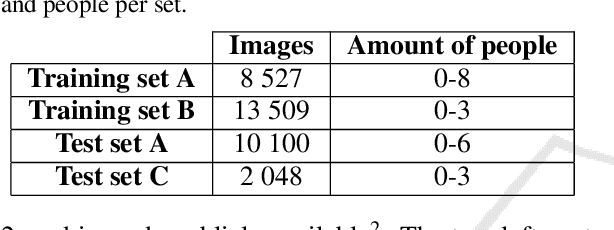

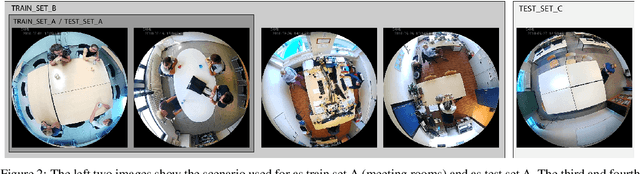

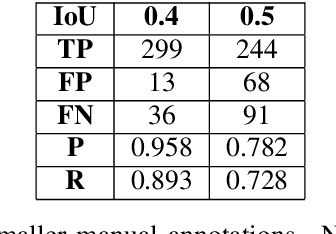

Abstract:In this work, we use a ceiling-mounted omni-directional camera to detect people in a room. This can be used as a sensor to measure the occupancy of meeting rooms and count the amount of flex-desk working spaces available. If these devices can be integrated in an embedded low-power sensor, it would form an ideal extension of automated room reservation systems in office environments. The main challenge we target here is ensuring the privacy of the people filmed. The approach we propose is going to extremely low image resolutions, such that it is impossible to recognise people or read potentially confidential documents. Therefore, we retrained a single-shot low-resolution person detection network with automatically generated ground truth. In this paper, we prove the functionality of this approach and explore how low we can go in resolution, to determine the optimal trade-off between recognition accuracy and privacy preservation. Because of the low resolution, the result is a lightweight network that can potentially be deployed on embedded hardware. Such embedded implementation enables the development of a decentralised smart camera which only outputs the required meta-data (i.e. the number of persons in the meeting room).

Automated analysis of eye-tracker-based human-human interaction studies

Jul 09, 2020

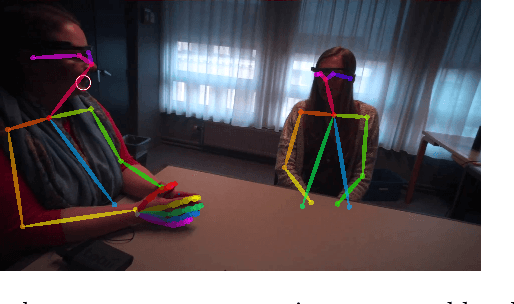

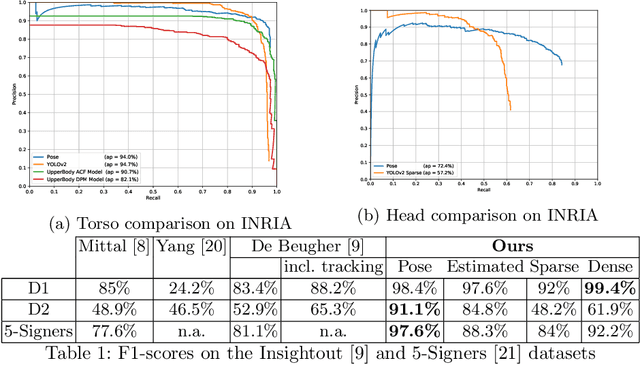

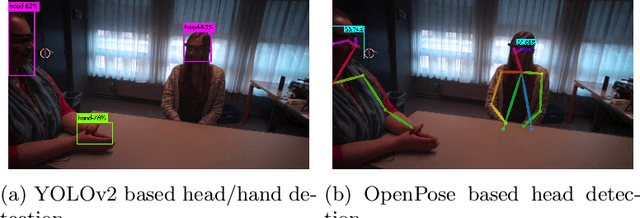

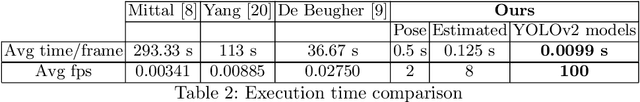

Abstract:Mobile eye-tracking systems have been available for about a decade now and are becoming increasingly popular in different fields of application, including marketing, sociology, usability studies and linguistics. While the user-friendliness and ergonomics of the hardware are developing at a rapid pace, the software for the analysis of mobile eye-tracking data in some points still lacks robustness and functionality. With this paper, we investigate which state-of-the-art computer vision algorithms may be used to automate the post-analysis of mobile eye-tracking data. For the case study in this paper, we focus on mobile eye-tracker recordings made during human-human face-to-face interactions. We compared two recent publicly available frameworks (YOLOv2 and OpenPose) to relate the gaze location generated by the eye-tracker to the head and hands visible in the scene camera data. In this paper we will show that the use of this single-pipeline framework provides robust results, which are both more accurate and faster than previous work in the field. Moreover, our approach does not rely on manual interventions during this process.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge