Kouichi Ikeno

Maximizing Invariant Data Perturbation with Stochastic Optimization

Jul 16, 2018

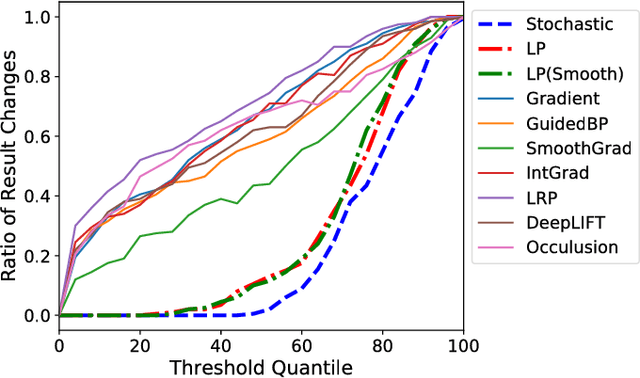

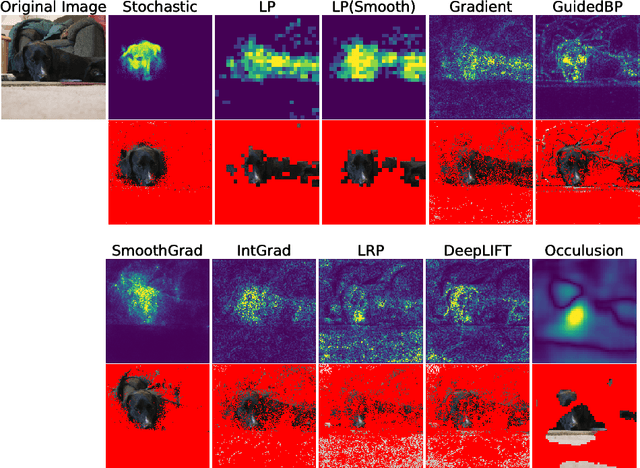

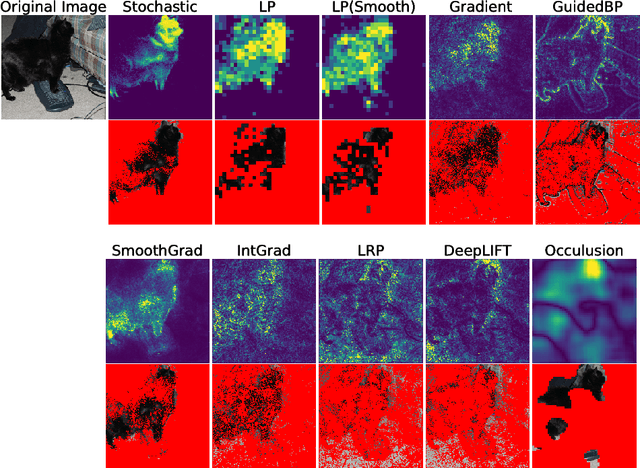

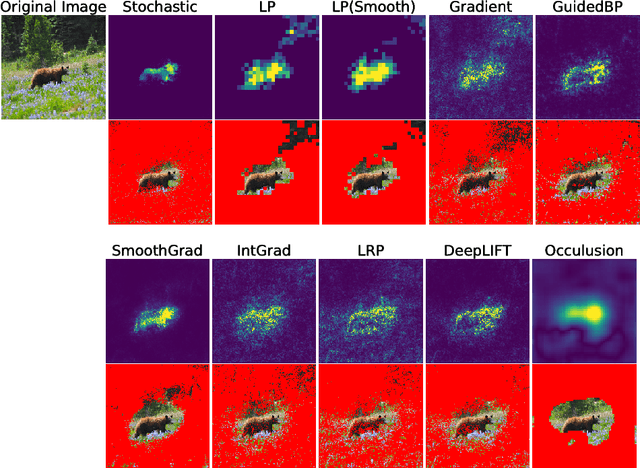

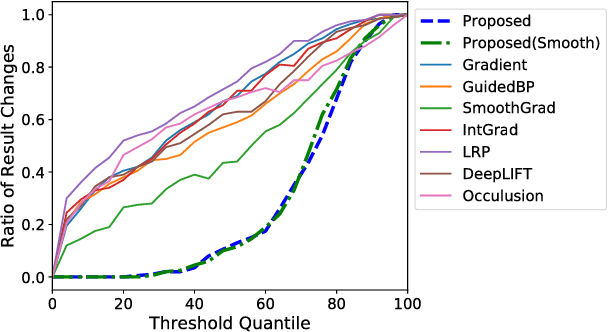

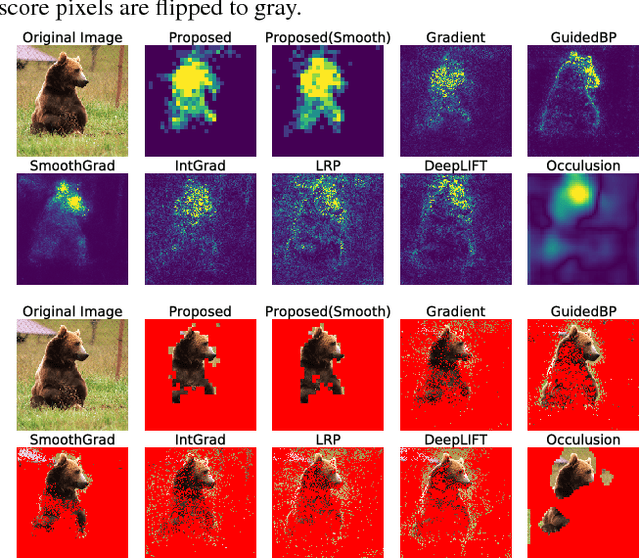

Abstract:Feature attribution methods, or saliency maps, are one of the most popular approaches for explaining the decisions of complex machine learning models such as deep neural networks. In this study, we propose a stochastic optimization approach for the perturbation-based feature attribution method. While the original optimization problem of the perturbation-based feature attribution is difficult to solve because of the complex constraints, we propose to reformulate the problem as the maximization of a differentiable function, which can be solved using gradient-based algorithms. In particular, stochastic optimization is well-suited for the proposed reformulation, and we can solve the problem using popular algorithms such as SGD, RMSProp, and Adam. The experiment on the image classification with VGG16 shows that the proposed method could identify relevant parts of the images effectively.

Maximally Invariant Data Perturbation as Explanation

Jul 11, 2018

Abstract:While several feature scoring methods are proposed to explain the output of complex machine learning models, most of them lack formal mathematical definitions. In this study, we propose a novel definition of the feature score using the maximally invariant data perturbation, which is inspired from the idea of adversarial example. In adversarial example, one seeks the smallest data perturbation that changes the model's output. In our proposed approach, we consider the opposite: we seek the maximally invariant data perturbation that does not change the model's output. In this way, we can identify important input features as the ones with small allowable data perturbations. To find the maximally invariant data perturbation, we formulate the problem as linear programming. The experiment on the image classification with VGG16 shows that the proposed method could identify relevant parts of the images effectively.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge