Kosuke Tahara

Toyota Central R&D Labs., Inc.

Ex-DoF: Expansion of Action Degree-of-Freedom with Virtual Camera Rotation for Omnidirectional Image

Nov 24, 2021

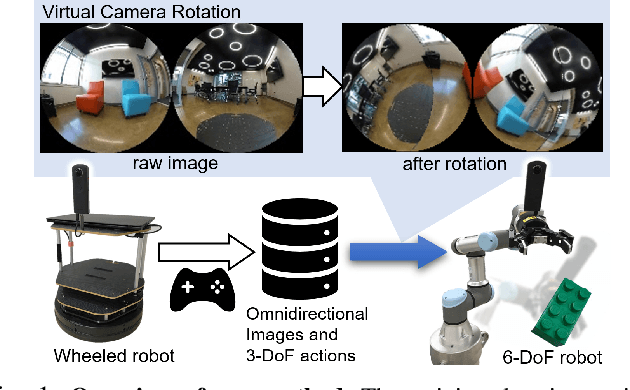

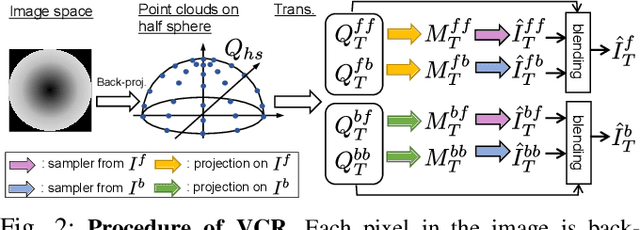

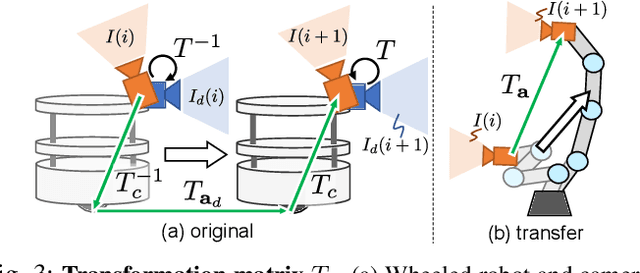

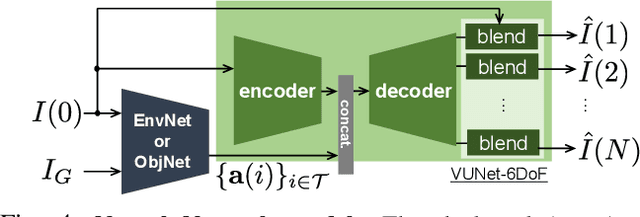

Abstract:Inter-robot transfer of training data is a little explored topic in learning and vision-based robot control. Thus, we propose a transfer method from a robot with a lower Degree-of-Freedom (DoF) action to one with a higher DoF utilizing an omnidirectional camera. The virtual rotation of the robot camera enables data augmentation in this transfer learning process. In this study, a vision-based control policy for a 6-DoF robot was trained using a dataset collected by a differential wheeled ground robot with only three DoFs. Towards application of robotic manipulations, we also demonstrate a control system of a 6-DoF arm robot using multiple policies with different fields of view to enable object reaching tasks.

Depth360: Monocular Depth Estimation using Learnable Axisymmetric Camera Model for Spherical Camera Image

Oct 20, 2021

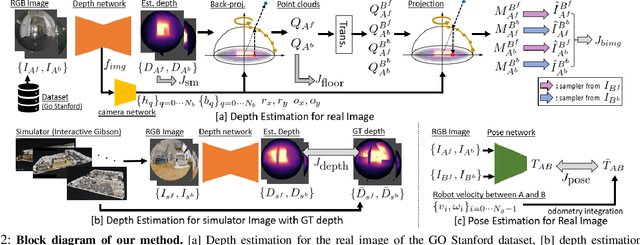

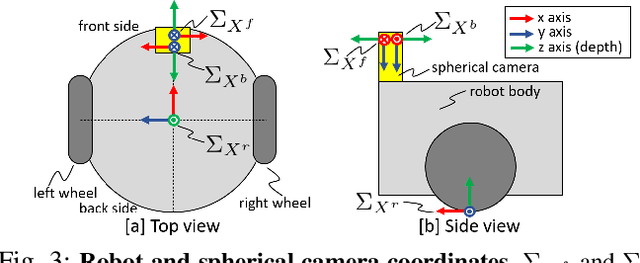

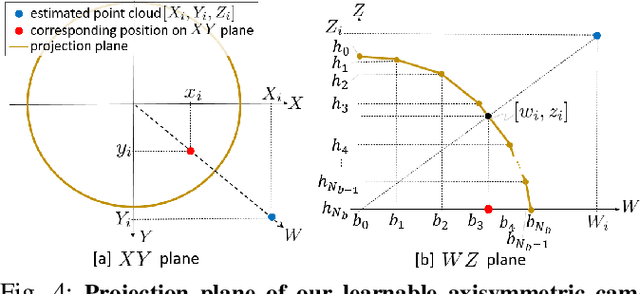

Abstract:Self-supervised monocular depth estimation has been widely investigated to estimate depth images and relative poses from RGB images. This framework is attractive for researchers because the depth and pose networks can be trained from just time sequence images without the need for the ground truth depth and poses. In this work, we estimate the depth around a robot (360 degree view) using time sequence spherical camera images, from a camera whose parameters are unknown. We propose a learnable axisymmetric camera model which accepts distorted spherical camera images with two fisheye camera images. In addition, we trained our models with a photo-realistic simulator to generate ground truth depth images to provide supervision. Moreover, we introduced loss functions to provide floor constraints to reduce artifacts that can result from reflective floor surfaces. We demonstrate the efficacy of our method using the spherical camera images from the GO Stanford dataset and pinhole camera images from the KITTI dataset to compare our method's performance with that of baseline method in learning the camera parameters.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge