Koorush Ziarati

Frost Prediction Using Machine Learning Methods in Fars Province

Jan 21, 2024Abstract:One of the common hazards and issues in meteorology and agriculture is the problem of frost, chilling or freezing. This event occurs when the minimum ambient temperature falls below a certain value. This phenomenon causes a lot of damage to the country, especially Fars province. Solving this problem requires that, in addition to predicting the minimum temperature, we can provide enough time to implement the necessary measures. Empirical methods have been provided by the Food and Agriculture Organization (FAO), which can predict the minimum temperature, but not in time. In addition to this, we can use machine learning methods to model the minimum temperature. In this study, we have used three methods Gated Recurrent Unit (GRU), Temporal Convolutional Network (TCN) as deep learning methods, and Gradient Boosting (XGBoost). A customized loss function designed for methods based on deep learning, which can be effective in reducing prediction errors. With methods based on deep learning models, not only do we observe a reduction in RMSE error compared to empirical methods but also have more time to predict minimum temperature. Thus, we can model the minimum temperature for the next 24 hours by having the current 24 hours. With the gradient boosting model (XGBoost) we can keep the prediction time as deep learning and RMSE error reduced. Finally, we experimentally concluded that machine learning methods work better than empirical methods and XGBoost model can have better performance in this problem among other implemented.

On Principal Curve-Based Classifiers and Similarity-Based Selective Sampling in Time-Series

Apr 10, 2022

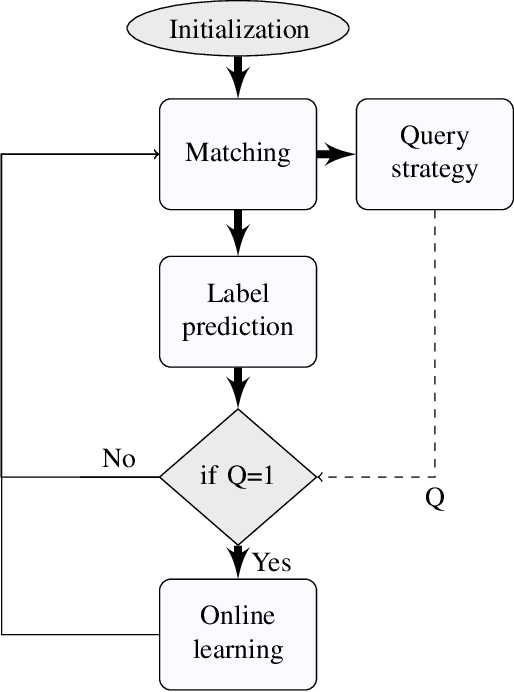

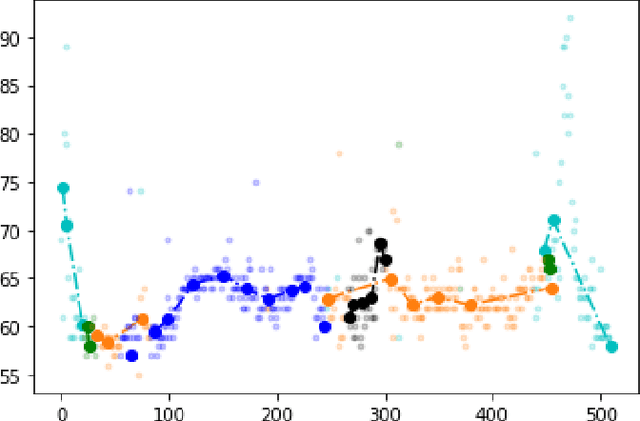

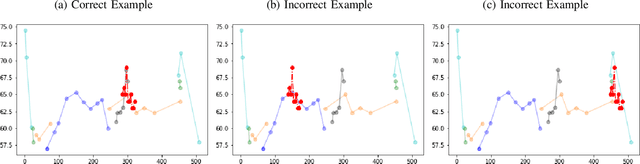

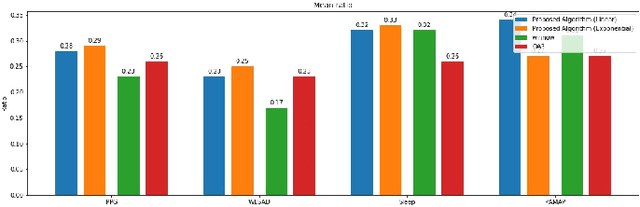

Abstract:Considering the concept of time-dilation, there exist some major issues with recurrent neural Architectures. Any variation in time spans between input data points causes performance attenuation in recurrent neural network architectures. Principal curve-based classifiers have the ability of handling any kind of variation in time spans. In other words, principal curve-based classifiers preserve the relativity of time while neural network architecture violates this property of time. On the other hand, considering the labeling costs and problems in online monitoring devices, there should be an algorithm that finds the data points which knowing their labels will cause in better performance of the classifier. Current selective sampling algorithms have lack of reliability due to the randomness of the proposed algorithms. This paper proposes a classifier and also a deterministic selective sampling algorithm with the same computational steps, both by use of principal curve as their building block in model definition.

Graph-based Local Climate Classification in Iran

Oct 18, 2021

Abstract:In this paper, we introduce a novel graph-based method to classify the regions with similar climate in a local area. We refer our proposed method as Graph Partition Based Method (GPBM). Our proposed method attempts to overcome the shortcomings of the current state-of-the-art methods in the literature. It has no limit on the number of variables that can be used and also preserves the nature of climate data. To illustrate the capability of our proposed algorithm, we benchmark its performance with other state-of-the-art climate classification techniques. The climate data is collected from 24 synoptic stations in Fars province in southern Iran. The data includes seven climate variables stored as time series from 1951 to 2017. Our results exhibit that our proposed method performs a more realistic climate classification with less computational time. It can save more information during the climate classification process and is therefore efficient in further data analysis. Furthermore, using our method, we can introduce seasonal graphs to better investigate seasonal climate changes. To the best of our knowledge, our proposed method is the first graph-based climate classification system.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge