Konstantin Bauman

The Long Tail of Context: Does it Exist and Matter?

Oct 03, 2022

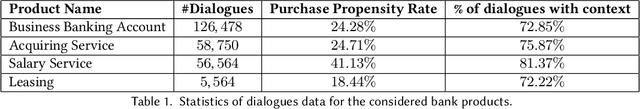

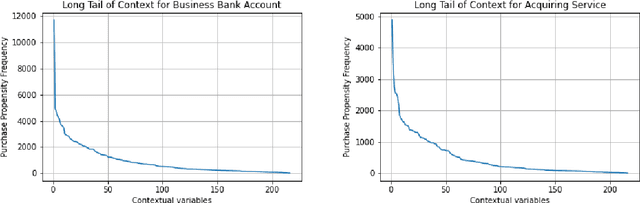

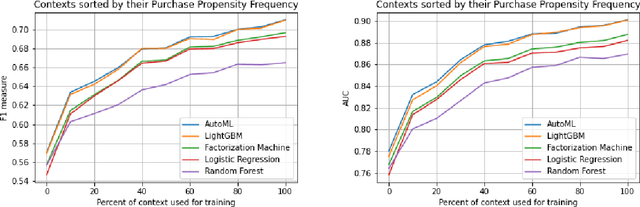

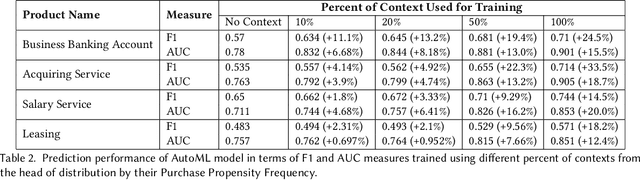

Abstract:Context has been an important topic in recommender systems over the past two decades. A standard representational approach to context assumes that contextual variables and their structures are known in an application. Most of the prior CARS papers following representational approach manually selected and considered only a few crucial contextual variables in an application, such as time, location, and company of a person. This prior work demonstrated significant recommendation performance improvements when various CARS-based methods have been deployed in numerous applications. However, some recommender systems applications deal with a much bigger and broader types of contexts, and manually identifying and capturing a few contextual variables is not sufficient in such cases. In this paper, we study such ``context-rich'' applications dealing with a large variety of different types of contexts. We demonstrate that supporting only a few most important contextual variables, although useful, is not sufficient. In our study, we focus on the application that recommends various banking products to commercial customers within the context of dialogues initiated by customer service representatives. In this application, we managed to identify over two hundred types of contextual variables. Sorting those variables by their importance forms the Long Tail of Context (LTC). In this paper, we empirically demonstrate that LTC matters and using all these contextual variables from the Long Tail leads to significant improvements in recommendation performance.

Discovering the Graph Structure in the Clustering Results

May 18, 2017

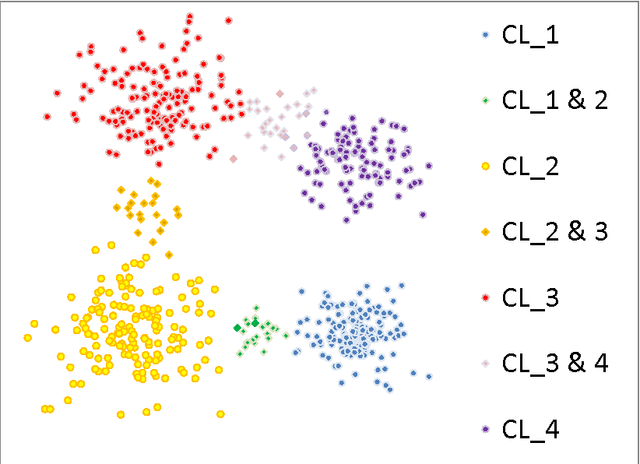

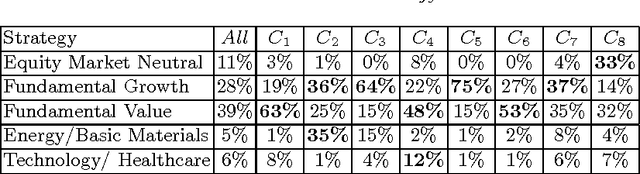

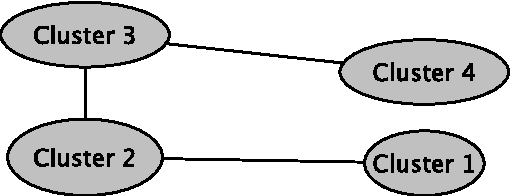

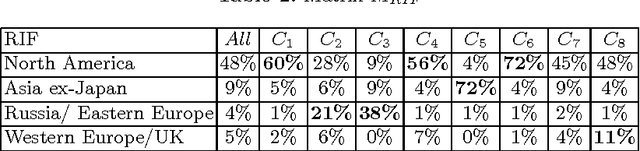

Abstract:In a standard cluster analysis, such as k-means, in addition to clusters locations and distances between them, it's important to know if they are connected or well separated from each other. The main focus of this paper is discovering the relations between the resulting clusters. We propose a new method which is based on pairwise overlapping k-means clustering, that in addition to means of clusters provides the graph structure of their relations. The proposed method has a set of parameters that can be tuned in order to control the sensitivity of the model and the desired relative size of the pairwise overlapping interval between means of two adjacent clusters, i.e., level of overlapping. We present the exact formula for calculating that parameter. The empirical study presented in the paper demonstrates that our approach works well not only on toy data but also compliments standard clustering results with a reasonable graph structure on real datasets, such as financial indices and restaurants.

One-Class Semi-Supervised Learning: Detecting Linearly Separable Class by its Mean

May 02, 2017

Abstract:In this paper, we presented a novel semi-supervised one-class classification algorithm which assumes that class is linearly separable from other elements. We proved theoretically that class is linearly separable if and only if it is maximal by probability within the sets with the same mean. Furthermore, we presented an algorithm for identifying such linearly separable class utilizing linear programming. We described three application cases including an assumption of linear separability, Gaussian distribution, and the case of linear separability in transformed space of kernel functions. Finally, we demonstrated the work of the proposed algorithm on the USPS dataset and analyzed the relationship of the performance of the algorithm and the size of the initially labeled sample.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge