Khai N. Truong

ELMI: Interactive and Intelligent Sign Language Translation of Lyrics for Song Signing

Sep 15, 2024

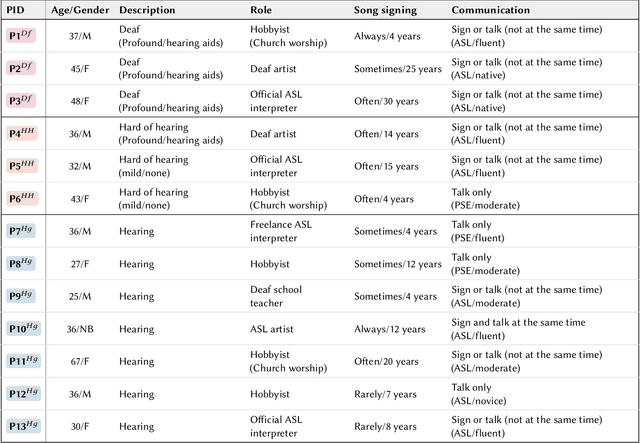

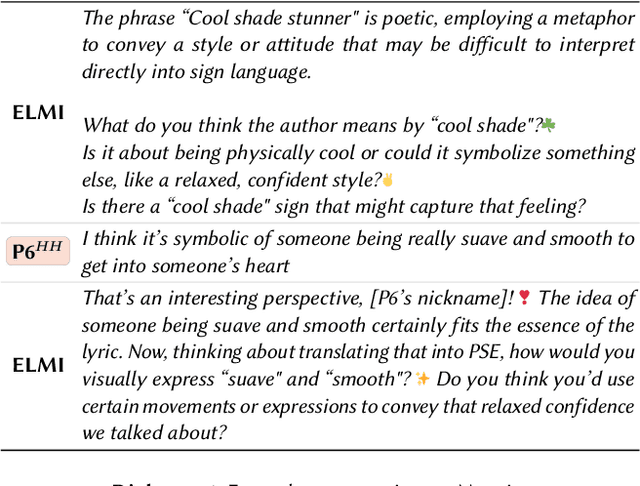

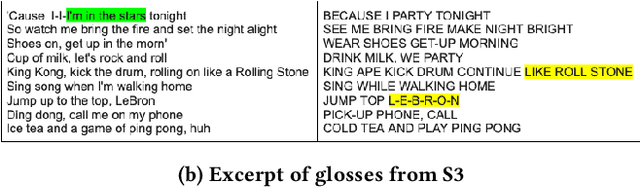

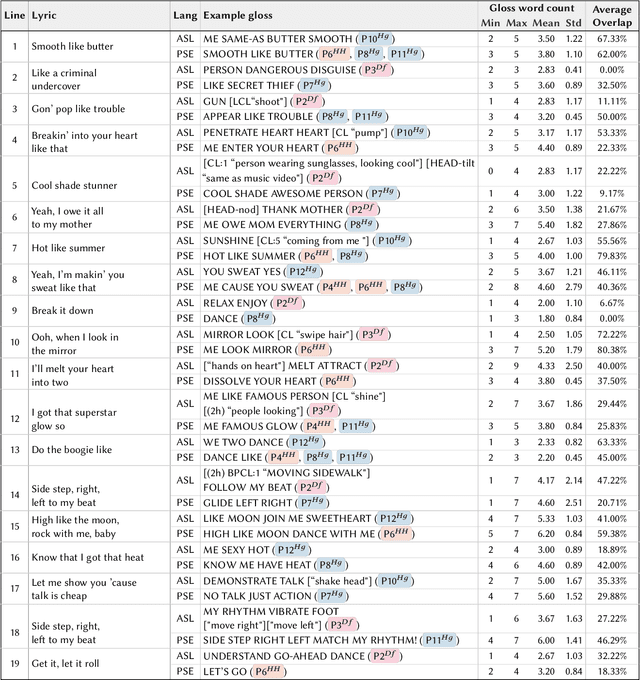

Abstract:d/Deaf and hearing song-signers become prevalent on video-sharing platforms, but translating songs into sign language remains cumbersome and inaccessible. Our formative study revealed the challenges song-signers face, including semantic, syntactic, expressive, and rhythmic considerations in translations. We present ELMI, an accessible song-signing tool that assists in translating lyrics into sign language. ELMI enables users to edit glosses line-by-line, with real-time synced lyric highlighting and music video snippets. Users can also chat with a large language model-driven AI to discuss meaning, glossing, emoting, and timing. Through an exploratory study with 13 song-signers, we examined how ELMI facilitates their workflows and how song-signers leverage and receive an LLM-driven chat for translation. Participants successfully adopted ELMI to song-signing, with active discussions on the fly. They also reported improved confidence and independence in their translations, finding ELMI encouraging, constructive, and informative. We discuss design implications for leveraging LLMs in culturally sensitive song-signing translations.

On the Challenges of Detecting Rude Conversational Behaviour

Dec 28, 2017

Abstract:In this study, we aim to identify moments of rudeness between two individuals. In particular, we segment all occurrences of rudeness in conversations into three broad, distinct categories and try to identify each. We show how machine learning algorithms can be used to identify rudeness based on acoustic and semantic signals extracted from conversations. Furthermore, we make note of our shortcomings in this task and highlight what makes this problem inherently difficult. Finally, we provide next steps which are needed to ensure further success in identifying rudeness in conversations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge