Keyvan Ansari

Blockchain-Enabled Federated Learning Approach for Vehicular Networks

Nov 10, 2023

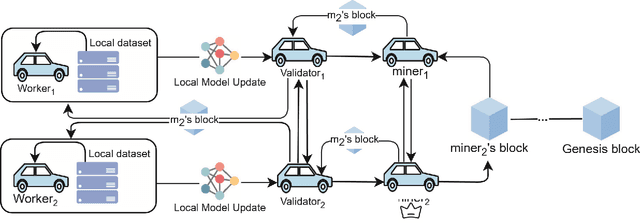

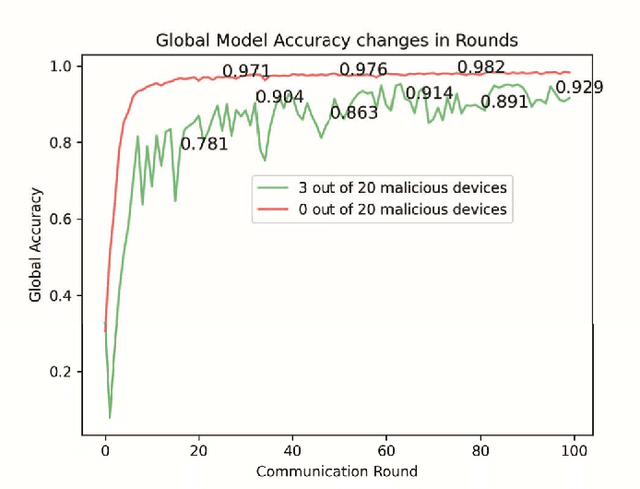

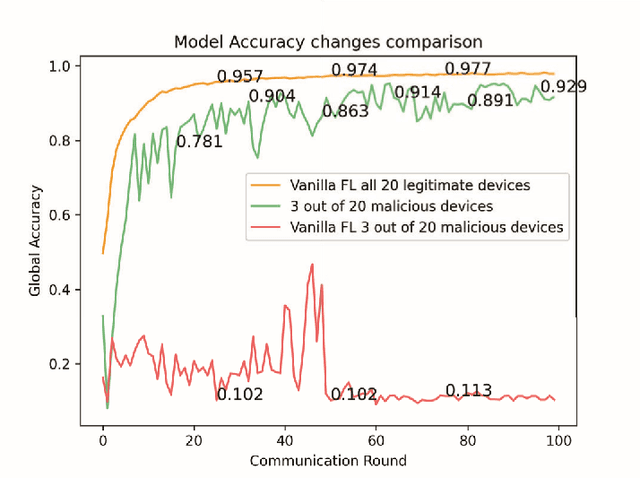

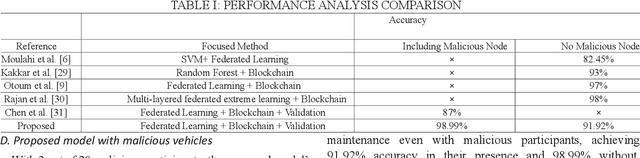

Abstract:Data from interconnected vehicles may contain sensitive information such as location, driving behavior, personal identifiers, etc. Without adequate safeguards, sharing this data jeopardizes data privacy and system security. The current centralized data-sharing paradigm in these systems raises particular concerns about data privacy. Recognizing these challenges, the shift towards decentralized interactions in technology, as echoed by the principles of Industry 5.0, becomes paramount. This work is closely aligned with these principles, emphasizing decentralized, human-centric, and secure technological interactions in an interconnected vehicular ecosystem. To embody this, we propose a practical approach that merges two emerging technologies: Federated Learning (FL) and Blockchain. The integration of these technologies enables the creation of a decentralized vehicular network. In this setting, vehicles can learn from each other without compromising privacy while also ensuring data integrity and accountability. Initial experiments show that compared to conventional decentralized federated learning techniques, our proposed approach significantly enhances the performance and security of vehicular networks. The system's accuracy stands at 91.92\%. While this may appear to be low in comparison to state-of-the-art federated learning models, our work is noteworthy because, unlike others, it was achieved in a malicious vehicle setting. Despite the challenging environment, our method maintains high accuracy, making it a competent solution for preserving data privacy in vehicular networks.

Explainable Artificial Intelligence for Smart City Application: A Secure and Trusted Platform

Oct 31, 2021

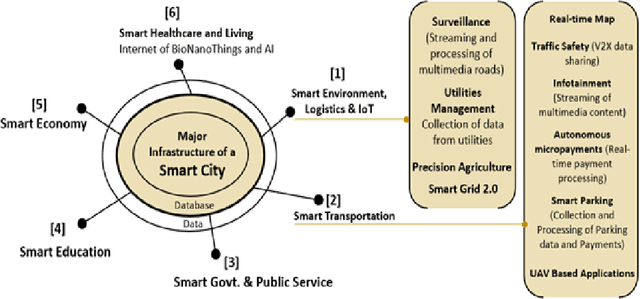

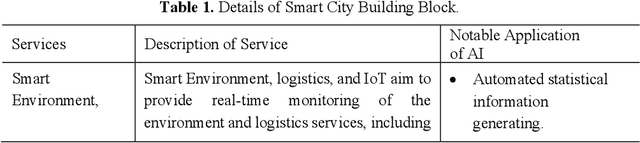

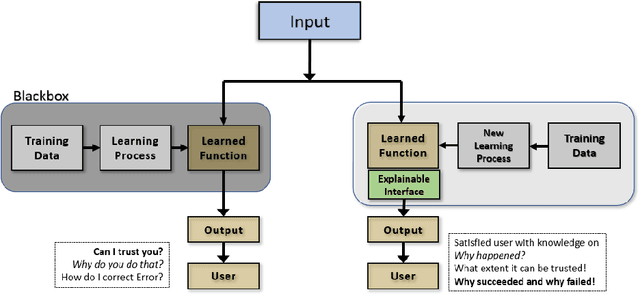

Abstract:Artificial Intelligence (AI) is one of the disruptive technologies that is shaping the future. It has growing applications for data-driven decisions in major smart city solutions, including transportation, education, healthcare, public governance, and power systems. At the same time, it is gaining popularity in protecting critical cyber infrastructure from cyber threats, attacks, damages, or unauthorized access. However, one of the significant issues of those traditional AI technologies (e.g., deep learning) is that the rapid progress in complexity and sophistication propelled and turned out to be uninterpretable black boxes. On many occasions, it is very challenging to understand the decision and bias to control and trust systems' unexpected or seemingly unpredictable outputs. It is acknowledged that the loss of control over interpretability of decision-making becomes a critical issue for many data-driven automated applications. But how may it affect the system's security and trustworthiness? This chapter conducts a comprehensive study of machine learning applications in cybersecurity to indicate the need for explainability to address this question. While doing that, this chapter first discusses the black-box problems of AI technologies for Cybersecurity applications in smart city-based solutions. Later, considering the new technological paradigm, Explainable Artificial Intelligence (XAI), this chapter discusses the transition from black-box to white-box. This chapter also discusses the transition requirements concerning the interpretability, transparency, understandability, and Explainability of AI-based technologies in applying different autonomous systems in smart cities. Finally, it has presented some commercial XAI platforms that offer explainability over traditional AI technologies before presenting future challenges and opportunities.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge