Keshav Ganapathy

Analyzing the Machine Learning Conference Review Process

Nov 26, 2020

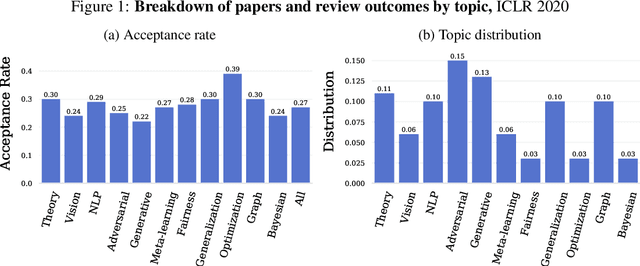

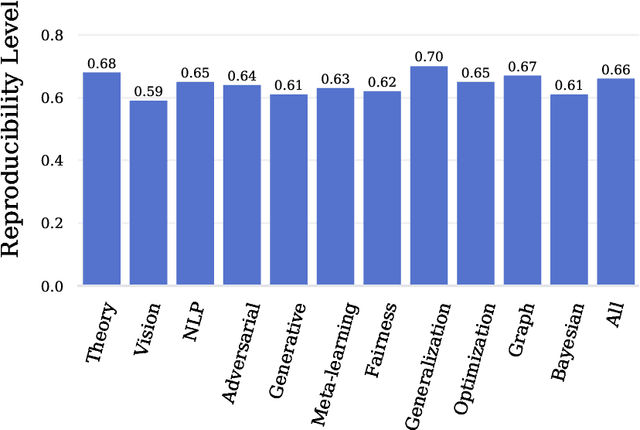

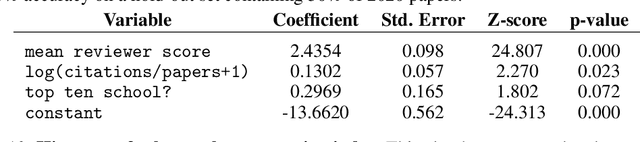

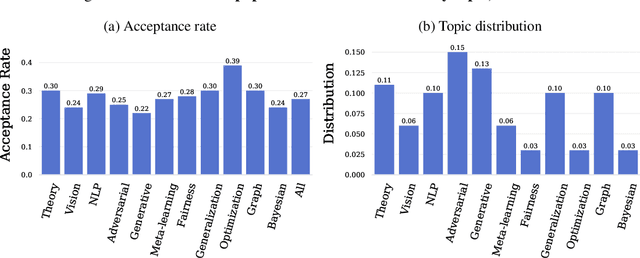

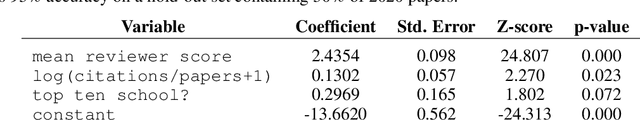

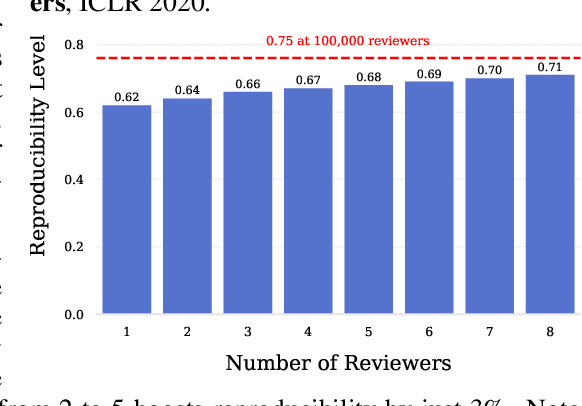

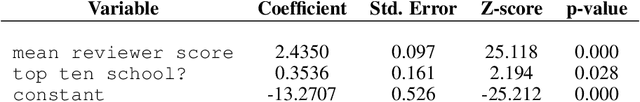

Abstract:Mainstream machine learning conferences have seen a dramatic increase in the number of participants, along with a growing range of perspectives, in recent years. Members of the machine learning community are likely to overhear allegations ranging from randomness of acceptance decisions to institutional bias. In this work, we critically analyze the review process through a comprehensive study of papers submitted to ICLR between 2017 and 2020. We quantify reproducibility/randomness in review scores and acceptance decisions, and examine whether scores correlate with paper impact. Our findings suggest strong institutional bias in accept/reject decisions, even after controlling for paper quality. Furthermore, we find evidence for a gender gap, with female authors receiving lower scores, lower acceptance rates, and fewer citations per paper than their male counterparts. We conclude our work with recommendations for future conference organizers.

An Open Review of OpenReview: A Critical Analysis of the Machine Learning Conference Review Process

Oct 26, 2020

Abstract:Mainstream machine learning conferences have seen a dramatic increase in the number of participants, along with a growing range of perspectives, in recent years. Members of the machine learning community are likely to overhear allegations ranging from randomness of acceptance decisions to institutional bias. In this work, we critically analyze the review process through a comprehensive study of papers submitted to ICLR between 2017 and 2020. We quantify reproducibility/randomness in review scores and acceptance decisions, and examine whether scores correlate with paper impact. Our findings suggest strong institutional bias in accept/reject decisions, even after controlling for paper quality. Furthermore, we find evidence for a gender gap, with female authors receiving lower scores, lower acceptance rates, and fewer citations per paper than their male counterparts. We conclude our work with recommendations for future conference organizers.

A Study of Genetic Algorithms for Hyperparameter Optimization of Neural Networks in Machine Translation

Sep 15, 2020

Abstract:With neural networks having demonstrated their versatility and benefits, the need for their optimal performance is as prevalent as ever. A defining characteristic, hyperparameters, can greatly affect its performance. Thus engineers go through a process, tuning, to identify and implement optimal hyperparameters. That being said, excess amounts of manual effort are required for tuning network architectures, training configurations, and preprocessing settings such as Byte Pair Encoding (BPE). In this study, we propose an automatic tuning method modeled after Darwin's Survival of the Fittest Theory via a Genetic Algorithm (GA). Research results show that the proposed method, a GA, outperforms a random selection of hyperparameters.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge