Keqiang Wang

Hybrid Sequential Recommender via Time-aware Attentive Memory Network

May 18, 2020

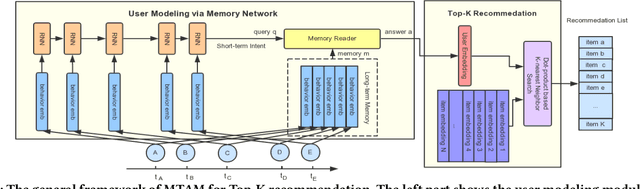

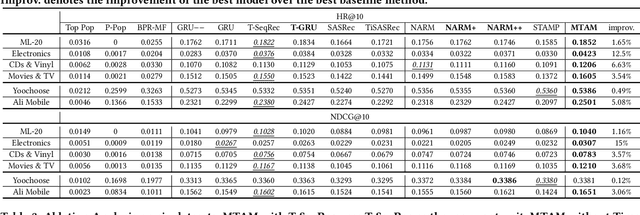

Abstract:Recommendation systems aim to assist users to discover most preferred contents from an ever-growing corpus of items. Although recommenders have been greatly improved by deep learning, they still faces several challenges: (1) Behaviors are much more complex than words in sentences, so traditional attentive and recurrent models may fail in capturing the temporal dynamics of user preferences. (2) The preferences of users are multiple and evolving, so it is difficult to integrate long-term memory and short-term intent. In this paper, we propose a temporal gating methodology to improve attention mechanism and recurrent units, so that temporal information can be considered in both information filtering and state transition. Additionally, we propose a Multi-hop Time-aware Attentive Memory network (MTAM) to integrate long-term and short-term preferences. We use the proposed time-aware GRU network to learn the short-term intent and maintain prior records in user memory. We treat the short-term intent as a query and design a multi-hop memory reading operation via the proposed time-aware attention to generate user representation based on the current intent and long-term memory. Our approach is scalable for candidate retrieval tasks and can be viewed as a non-linear generalization of latent factorization for dot-product based Top-K recommendation. Finally, we conduct extensive experiments on six benchmark datasets and the experimental results demonstrate the effectiveness of our MTAM and temporal gating methodology.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge