Kenji Ose

DuPLO: A DUal view Point deep Learning architecture for time series classificatiOn

Sep 20, 2018

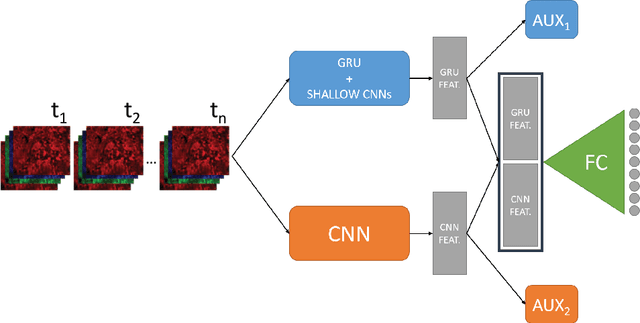

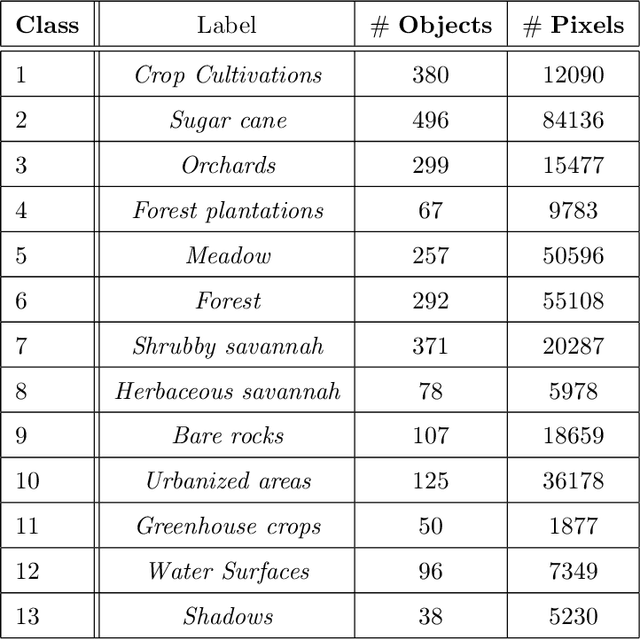

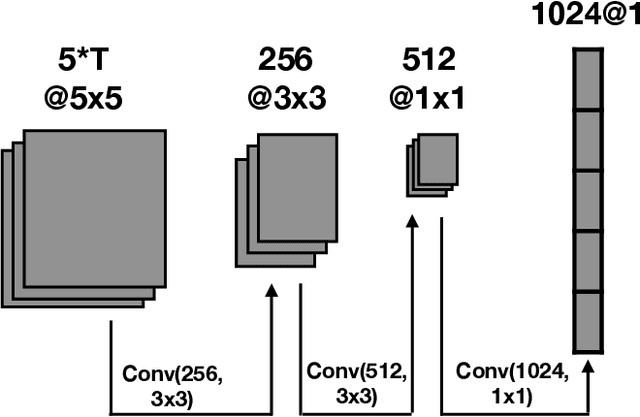

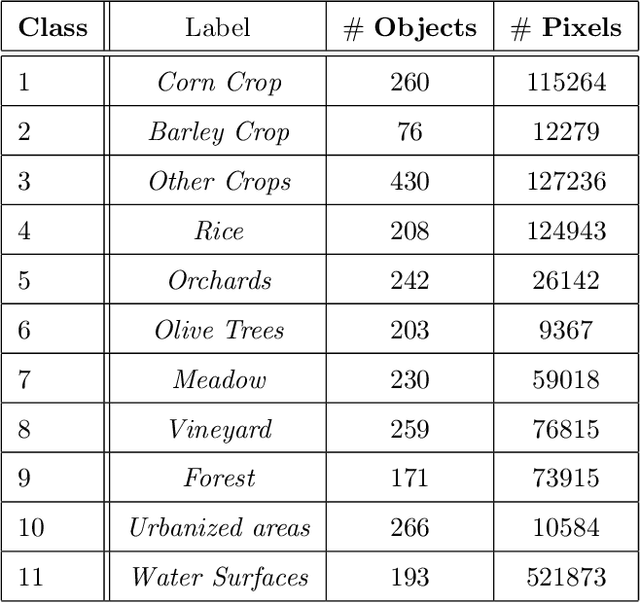

Abstract:Nowadays, modern Earth Observation systems continuously generate huge amounts of data. A notable example is represented by the Sentinel-2 mission, which provides images at high spatial resolution (up to 10m) with high temporal revisit period (every 5 days), which can be organized in Satellite Image Time Series (SITS). While the use of SITS has been proved to be beneficial in the context of Land Use/Land Cover (LULC) map generation, unfortunately, machine learning approaches commonly leveraged in remote sensing field fail to take advantage of spatio-temporal dependencies present in such data. Recently, new generation deep learning methods allowed to significantly advance research in this field. These approaches have generally focused on a single type of neural network, i.e., Convolutional Neural Networks (CNNs) or Recurrent Neural Networks (RNNs), which model different but complementary information: spatial autocorrelation (CNNs) and temporal dependencies (RNNs). In this work, we propose the first deep learning architecture for the analysis of SITS data, namely \method{} (DUal view Point deep Learning architecture for time series classificatiOn), that combines Convolutional and Recurrent neural networks to exploit their complementarity. Our hypothesis is that, since CNNs and RNNs capture different aspects of the data, a combination of both models would produce a more diverse and complete representation of the information for the underlying land cover classification task. Experiments carried out on two study sites characterized by different land cover characteristics (i.e., the \textit{Gard} site in France and the \textit{Reunion Island} in the Indian Ocean), demonstrate the significance of our proposal.

MRFusion: A Deep Learning architecture to fuse PAN and MS imagery for land cover mapping

Jun 29, 2018

Abstract:Nowadays, Earth Observation systems provide a multitude of heterogeneous remote sensing data. How to manage such richness leveraging its complementarity is a crucial chal- lenge in modern remote sensing analysis. Data Fusion techniques deal with this point proposing method to combine and exploit complementarity among the different data sensors. Considering optical Very High Spatial Resolution (VHSR) images, satellites obtain both Multi Spectral (MS) and panchro- matic (PAN) images at different spatial resolution. VHSR images are extensively exploited to produce land cover maps to deal with agricultural, ecological, and socioeconomic issues as well as assessing ecosystem status, monitoring biodiversity and provid- ing inputs to conceive food risk monitoring systems. Common techniques to produce land cover maps from such VHSR images typically opt for a prior pansharpening of the multi-resolution source for a full resolution processing. Here, we propose a new deep learning architecture to jointly use PAN and MS imagery for a direct classification without any prior image fusion or resampling process. By managing the spectral information at its native spatial resolution, our method, named MRFusion, aims at avoiding the possible infor- mation loss induced by pansharpening or any other hand-crafted preprocessing. Moreover, the proposed architecture is suitably designed to learn non-linear transformations of the sources with the explicit aim of taking as much as possible advantage of the complementarity of PAN and MS imagery. Experiments are carried out on two-real world scenarios depicting large areas with different land cover characteristics. The characteristics of the proposed scenarios underline the applicability and the generality of our method in operational settings.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge