Kenji Ishikawa

SoundSil-DS: Deep Denoising and Segmentation of Sound-field Images with Silhouettes

Nov 12, 2024Abstract:Development of optical technology has enabled imaging of two-dimensional (2D) sound fields. This acousto-optic sensing enables understanding of the interaction between sound and objects such as reflection and diffraction. Moreover, it is expected to be used an advanced measurement technology for sonars in self-driving vehicles and assistive robots. However, the low sound-pressure sensitivity of the acousto-optic sensing results in high intensity of noise on images. Therefore, denoising is an essential task to visualize and analyze the sound fields. In addition to denoising, segmentation of sound and object silhouette is also required to analyze interactions between them. In this paper, we propose sound-field-images-with-object-silhouette denoising and segmentation (SoundSil-DS) that jointly perform denoising and segmentation for sound fields and object silhouettes on a visualized image. We developed a new model based on the current state-of-the-art denoising network. We also created a dataset to train and evaluate the proposed method through acoustic simulation. The proposed method was evaluated using both simulated and measured data. We confirmed that our method can applied to experimentally measured data. These results suggest that the proposed method may improve the post-processing for sound fields, such as physical model-based three-dimensional reconstruction since it can remove unwanted noise and separate sound fields and other object silhouettes. Our code is available at https://github.com/nttcslab/soundsil-ds.

Acousto-optic reconstruction of exterior sound field based on concentric circle sampling with circular harmonic expansion

Nov 03, 2023

Abstract:Acousto-optic sensing provides an alternative approach to traditional microphone arrays by shedding light on the interaction of light with an acoustic field. Sound field reconstruction is a fascinating and advanced technique used in acousto-optics sensing. Current challenges in sound-field reconstruction methods pertain to scenarios in which the sound source is located within the reconstruction area, known as the exterior problem. Existing reconstruction algorithms, primarily designed for interior scenarios, often exhibit suboptimal performance when applied to exterior cases. This paper introduces a novel technique for exterior sound-field reconstruction. The proposed method leverages concentric circle sampling and a two-dimensional exterior sound-field reconstruction approach based on circular harmonic extensions. To evaluate the efficacy of this approach, both numerical simulations and practical experiments are conducted. The results highlight the superior accuracy of the proposed method when compared to conventional reconstruction methods, all while utilizing a minimal amount of measured projection data.

Deep sound-field denoiser: optically-measured sound-field denoising using deep neural network

Apr 27, 2023

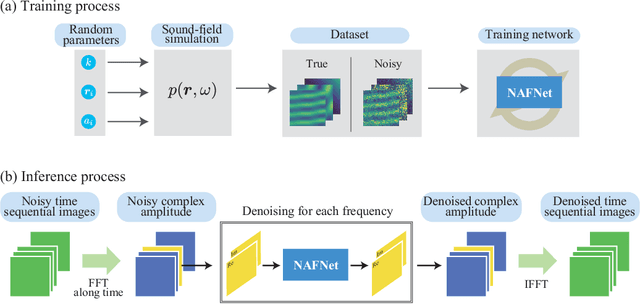

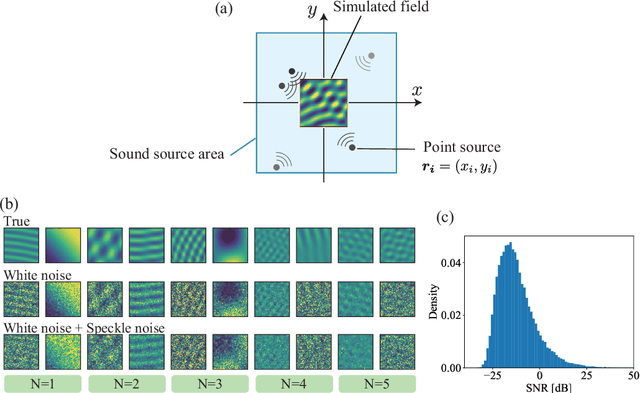

Abstract:This paper proposes a deep sound-field denoiser, a deep neural network (DNN) based denoising of optically measured sound-field images. Sound-field imaging using optical methods has gained considerable attention due to its ability to achieve high-spatial-resolution imaging of acoustic phenomena that conventional acoustic sensors cannot accomplish. However, the optically measured sound-field images are often heavily contaminated by noise because of the low sensitivity of optical interferometric measurements to airborne sound. Here, we propose a DNN-based sound-field denoising method. Time-varying sound-field image sequences are decomposed into harmonic complex-amplitude images by using a time-directional Fourier transform. The complex images are converted into two-channel images consisting of real and imaginary parts and denoised by a nonlinear-activation-free network. The network is trained on a sound-field dataset obtained from numerical acoustic simulations with randomized parameters. We compared the method with conventional ones, such as image filters and a spatiotemporal filter, on numerical and experimental data. The experimental data were measured by parallel phase-shifting interferometry and holographic speckle interferometry. The proposed deep sound-field denoiser significantly outperformed the conventional methods on both the numerical and experimental data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge