Kenji Bekki

SeeingGAN: Galactic image deblurring with deep learning for better morphological classification of galaxies

Mar 28, 2021

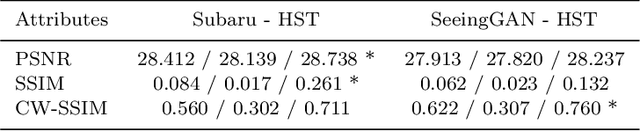

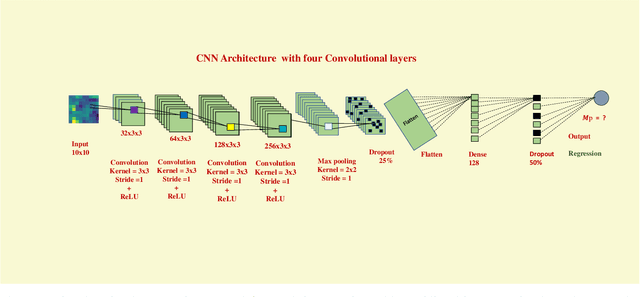

Abstract:Classification of galactic morphologies is a crucial task in galactic astronomy, and identifying fine structures of galaxies (e.g., spiral arms, bars, and clumps) is an essential ingredient in such a classification task. However, seeing effects can cause images we obtain to appear blurry, making it difficult for astronomers to derive galaxies' physical properties and, in particular, distant galaxies. Here, we present a method that converts blurred images obtained by the ground-based Subaru Telescope into quasi Hubble Space Telescope (HST) images via machine learning. Using an existing deep learning method called generative adversarial networks (GANs), we can eliminate seeing effects, effectively resulting in an image similar to an image taken by the HST. Using multiple Subaru telescope image and HST telescope image pairs, we demonstrate that our model can augment fine structures present in the blurred images in aid for better and more precise galactic classification. Using our first of its kind machine learning-based deblurring technique on space images, we can obtain up to 18% improvement in terms of CW-SSIM (Complex Wavelet Structural Similarity Index) score when comparing the Subaru-HST pair versus SeeingGAN-HST pair. With this model, we can generate HST-like images from relatively less capable telescopes, making space exploration more accessible to the broader astronomy community. Furthermore, this model can be used not only in professional morphological classification studies of galaxies but in all citizen science for galaxy classifications.

Estimating galaxy masses from kinematics of globular cluster systems: a new method based on deep learning

Feb 03, 2021

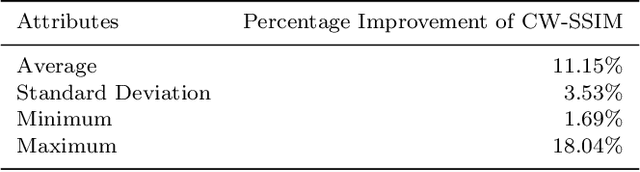

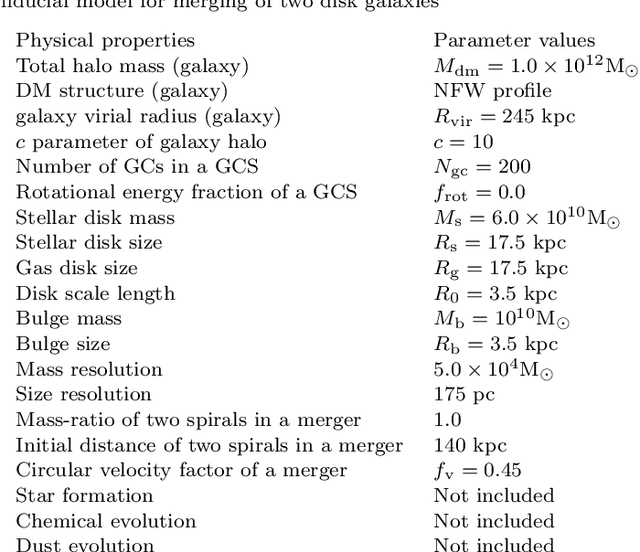

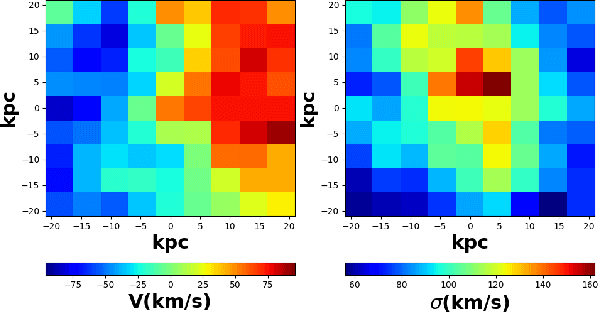

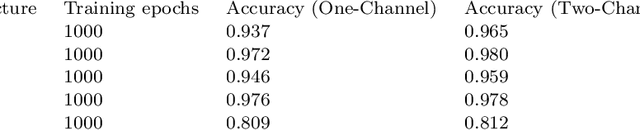

Abstract:We present a new method by which the total masses of galaxies including dark matter can be estimated from the kinematics of their globular cluster systems (GCSs). In the proposed method, we apply the convolutional neural networks (CNNs) to the two-dimensional (2D) maps of line-of-sight-velocities ($V$) and velocity dispersions ($\sigma$) of GCSs predicted from numerical simulations of disk and elliptical galaxies. In this method, we first train the CNN using either only a larger number ($\sim 200,000$) of the synthesized 2D maps of $\sigma$ ("one-channel") or those of both $\sigma$ and $V$ ("two-channel"). Then we use the CNN to predict the total masses of galaxies (i.e., test the CNN) for the totally unknown dataset that is not used in training the CNN. The principal results show that overall accuracy for one-channel and two-channel data is 97.6\% and 97.8\% respectively, which suggests that the new method is promising. The mean absolute errors (MAEs) for one-channel and two-channel data are 0.288 and 0.275 respectively, and the value of root mean square errors (RMSEs) are 0.539 and 0.51 for one-channel and two-channel respectively. These smaller MAEs and RMSEs for two-channel data (i.e., better performance) suggest that the new method can properly consider the global rotation of GCSs in the mass estimation. We stress that the prediction accuracy in the new mass estimation method not only depends on the architectures of CNNs but also can be affected by the introduction of noise in the synthesized images.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge