Ken Miura

Development of JavaScript-based deep learning platform and application to distributed training

Mar 27, 2017

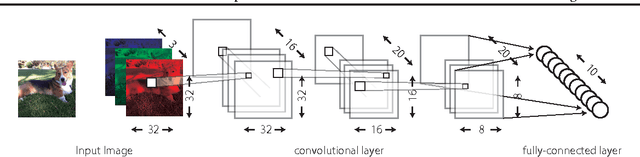

Abstract:Deep learning is increasingly attracting attention for processing big data. Existing frameworks for deep learning must be set up to specialized computer systems. Gaining sufficient computing resources therefore entails high costs of deployment and maintenance. In this work, we implement a matrix library and deep learning framework that uses JavaScript. It can run on web browsers operating on ordinary personal computers and smartphones. Using JavaScript, deep learning can be accomplished in widely diverse environments without the necessity for software installation. Using GPGPU from WebCL framework, our framework can train large scale convolutional neural networks such as VGGNet and ResNet. In the experiments, we demonstrate their practicality by training VGGNet in a distributed manner using web browsers as the client.

Implementation of a Practical Distributed Calculation System with Browsers and JavaScript, and Application to Distributed Deep Learning

Mar 19, 2015

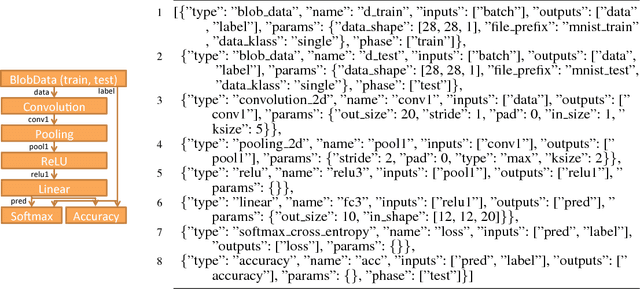

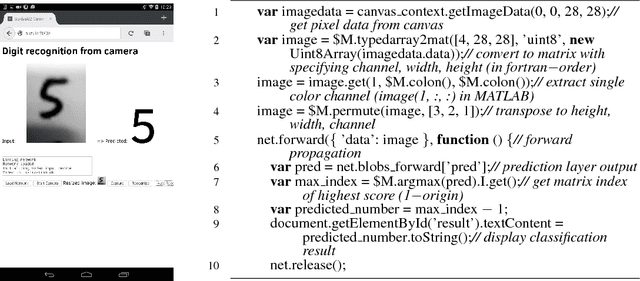

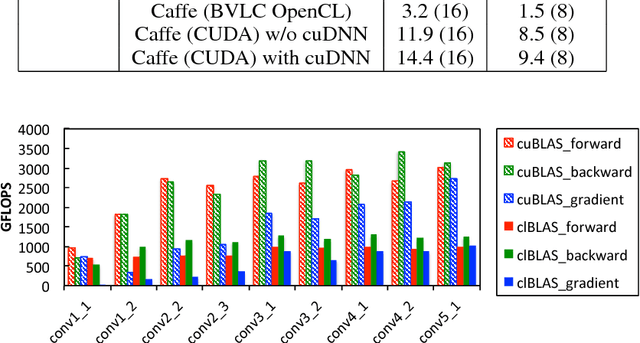

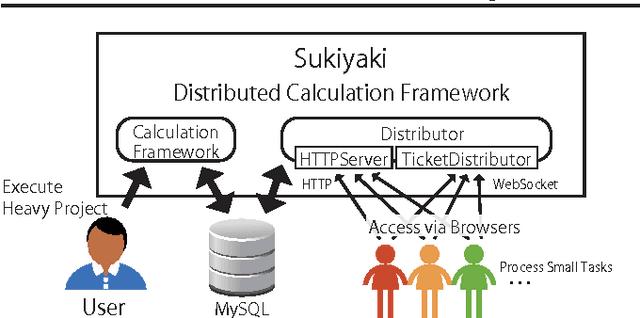

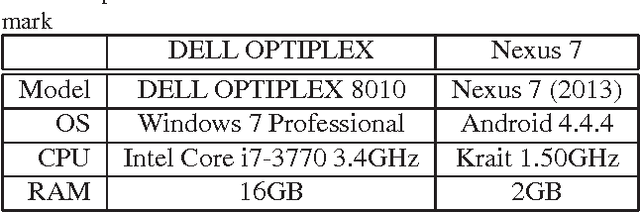

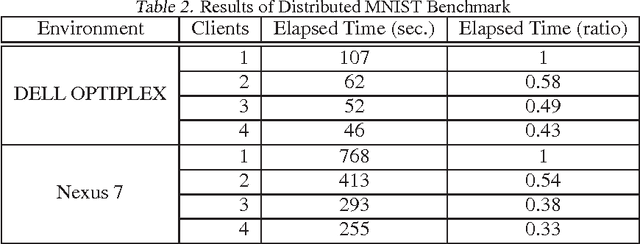

Abstract:Deep learning can achieve outstanding results in various fields. However, it requires so significant computational power that graphics processing units (GPUs) and/or numerous computers are often required for the practical application. We have developed a new distributed calculation framework called "Sashimi" that allows any computer to be used as a distribution node only by accessing a website. We have also developed a new JavaScript neural network framework called "Sukiyaki" that uses general purpose GPUs with web browsers. Sukiyaki performs 30 times faster than a conventional JavaScript library for deep convolutional neural networks (deep CNNs) learning. The combination of Sashimi and Sukiyaki, as well as new distribution algorithms, demonstrates the distributed deep learning of deep CNNs only with web browsers on various devices. The libraries that comprise the proposed methods are available under MIT license at http://mil-tokyo.github.io/.

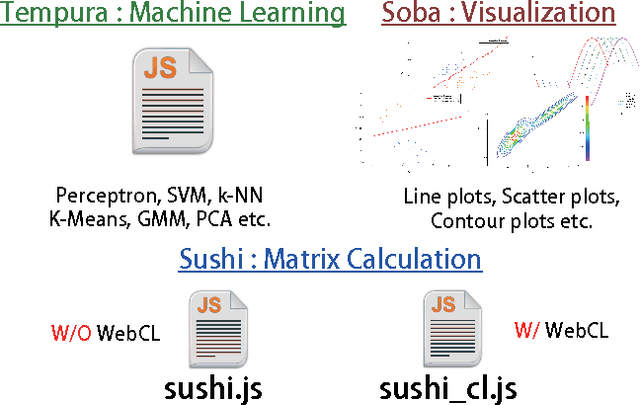

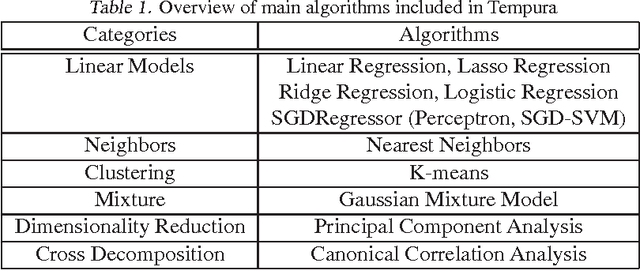

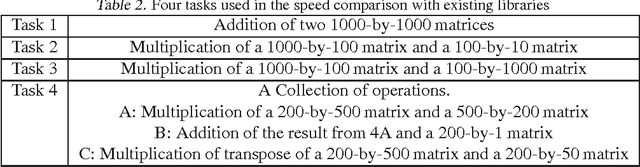

MILJS : Brand New JavaScript Libraries for Matrix Calculation and Machine Learning

Feb 21, 2015

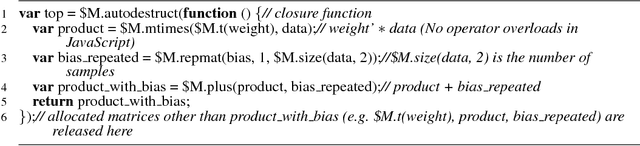

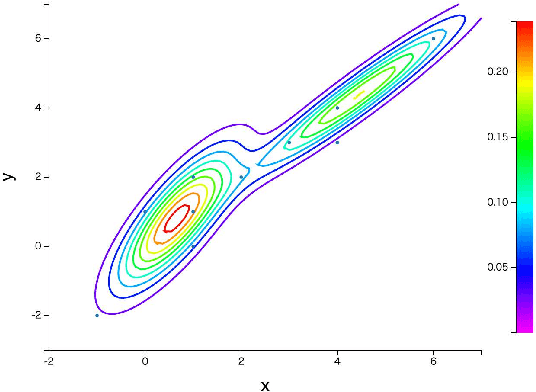

Abstract:MILJS is a collection of state-of-the-art, platform-independent, scalable, fast JavaScript libraries for matrix calculation and machine learning. Our core library offering a matrix calculation is called Sushi, which exhibits far better performance than any other leading machine learning libraries written in JavaScript. Especially, our matrix multiplication is 177 times faster than the fastest JavaScript benchmark. Based on Sushi, a machine learning library called Tempura is provided, which supports various algorithms widely used in machine learning research. We also provide Soba as a visualization library. The implementations of our libraries are clearly written, properly documented and thus can are easy to get started with, as long as there is a web browser. These libraries are available from http://mil-tokyo.github.io/ under the MIT license.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge