Keiki Takadama

Guided Dyna-Q for Mobile Robot Exploration and Navigation

Apr 23, 2020

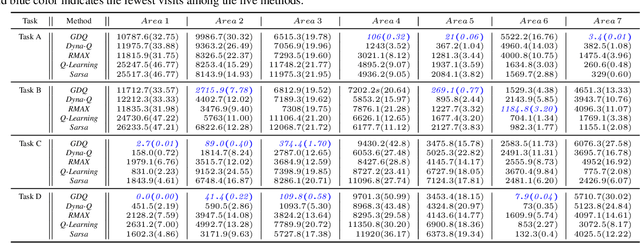

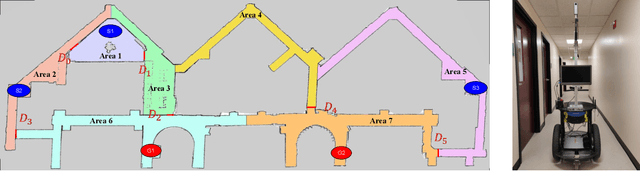

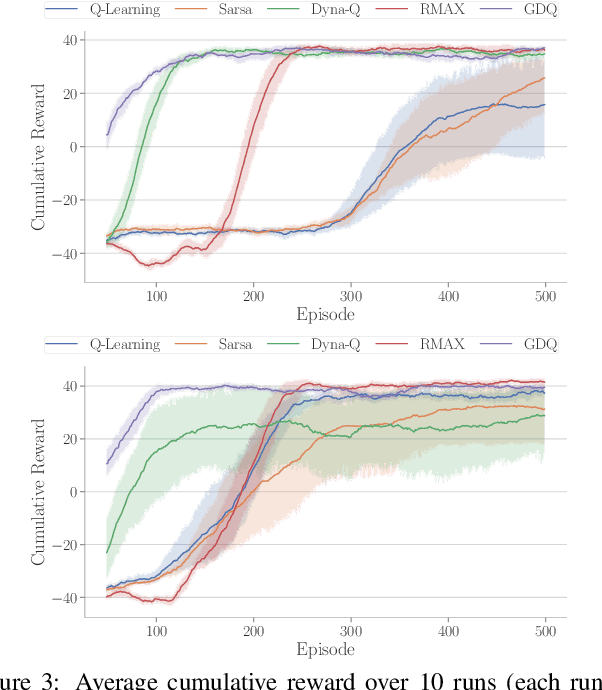

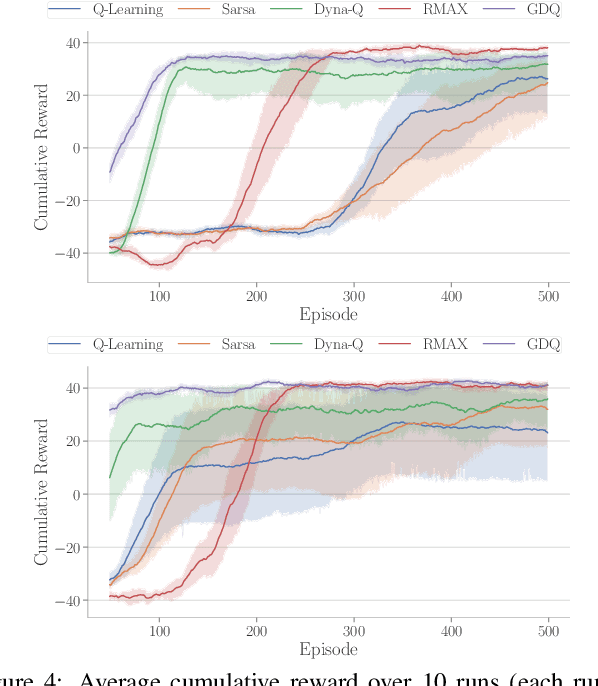

Abstract:Model-based reinforcement learning (RL) enables an agent to learn world models from trial-and-error experiences toward achieving long-term goals. Automated planning, on the other hand, can be used for accomplishing tasks through reasoning with declarative action knowledge. Despite their shared goal of completing complex tasks, the development of RL and automated planning has mainly been isolated due to their different modalities of computation. Focusing on improving model-based RL agent's exploration strategy and sample efficiency, we develop Guided Dyna-Q (GDQ) to enable RL agents to reason with action knowledge to avoid exploring less-relevant states toward more efficient task accomplishment. GDQ has been evaluated in simulation and using a mobile robot conducting navigation tasks in an office environment. Results show that GDQ reduces the effort in exploration while improving the quality of learned policies.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge