Kaustubh Mhaisekar

Aikyam: A Video Conferencing Utility for Deaf and Dumb

Dec 10, 2023Abstract:With the advent of the pandemic, the use of video conferencing platforms as a means of communication has greatly increased and with it, so have the remote opportunities. The deaf and dumb have traditionally faced several issues in communication, but now the effect is felt more severely. This paper proposes an all-encompassing video conferencing utility that can be used with existing video conferencing platforms to address these issues. Appropriate semantically correct sentences are generated from the signer's gestures which would be interpreted by the system. Along with an audio to emit this sentence, the user's feed is also used to annotate the sentence. This can be viewed by all participants, thus aiding smooth communication with all parties involved. This utility utilizes a simple LSTM model for classification of gestures. The sentences are constructed by a t5 based model. In order to achieve the required data flow, a virtual camera is used.

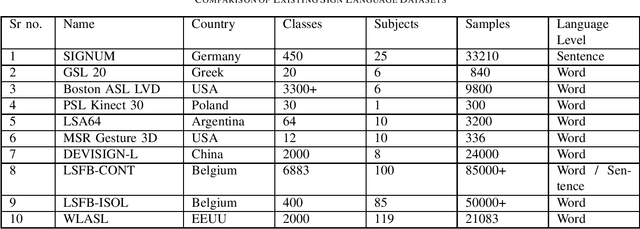

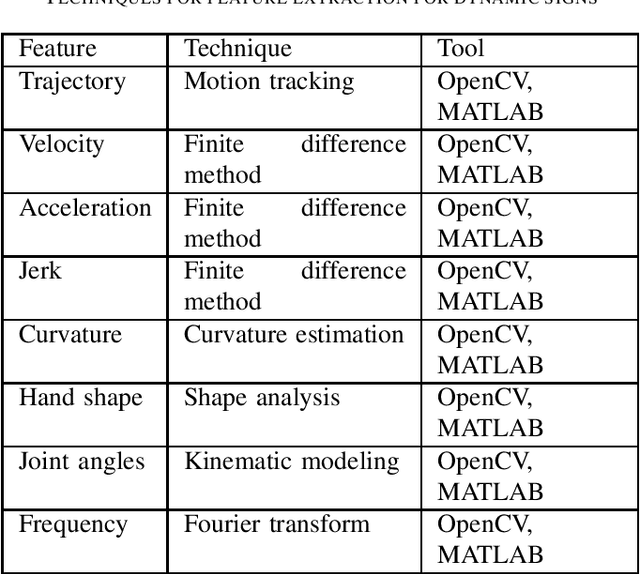

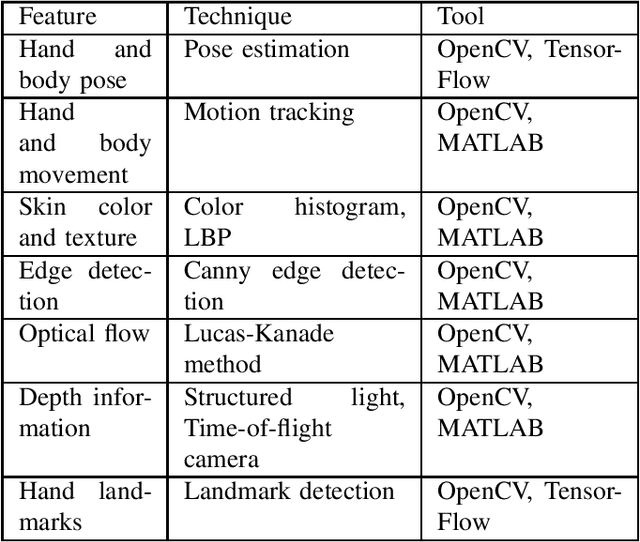

Study and Survey on Gesture Recognition Systems

Dec 01, 2023

Abstract:In recent years, there has been a considerable amount of research in the Gesture Recognition domain, mainly owing to the technological advancements in Computer Vision. Various new applications have been conceptualised and developed in this field. This paper discusses the implementation of gesture recognition systems in multiple sectors such as gaming, healthcare, home appliances, industrial robots, and virtual reality. Different methodologies for capturing gestures are compared and contrasted throughout this survey. Various data sources and data acquisition techniques have been discussed. The role of gestures in sign language has been studied and existing approaches have been reviewed. Common challenges faced while building gesture recognition systems have also been explored.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge