Karla Saldana Ochoa

Enhancing Demand-Oriented Regionalization with Agentic AI and Local Heterogeneous Data for Adaptation Planning

Nov 13, 2025Abstract:Conventional planning units or urban regions, such as census tracts, zip codes, or neighborhoods, often do not capture the specific demands of local communities and lack the flexibility to implement effective strategies for hazard prevention or response. To support the creation of dynamic planning units, we introduce a planning support system with agentic AI that enables users to generate demand-oriented regions for disaster planning, integrating the human-in-the-loop principle for transparency and adaptability. The platform is built on a representative initialized spatially constrained self-organizing map (RepSC-SOM), extending traditional SOM with adaptive geographic filtering and region-growing refinement, while AI agents can reason, plan, and act to guide the process by suggesting input features, guiding spatial constraints, and supporting interactive exploration. We demonstrate the capabilities of the platform through a case study on the flooding-related risk in Jacksonville, Florida, showing how it allows users to explore, generate, and evaluate regionalization interactively, combining computational rigor with user-driven decision making.

Creating A Coefficient of Change in the Built Environment After a Natural Disaster

Nov 09, 2021

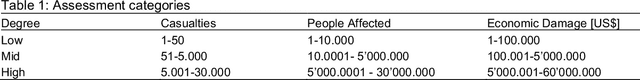

Abstract:This study proposes a novel method to assess damages in the built environment using a deep learning workflow to quantify it. Thanks to an automated crawler, aerial images from before and after a natural disaster of 50 epicenters worldwide were obtained from Google Earth, generating a 10,000 aerial image database with a spatial resolution of 2 m per pixel. The study utilizes the algorithm Seg-Net to perform semantic segmentation of the built environment from the satellite images in both instances (prior and post-natural disasters). For image segmentation, Seg-Net is one of the most popular and general CNN architectures. The Seg-Net algorithm used reached an accuracy of 92% in the segmentation. After the segmentation, we compared the disparity between both cases represented as a percentage of change. Such coefficient of change represents the damage numerically an urban environment had to quantify the overall damage in the built environment. Such an index can give the government an estimate of the number of affected households and perhaps the extent of housing damage.

A Machine learning approach for rapid disaster response based on multi-modal data. The case of housing & shelter needs

Aug 09, 2021

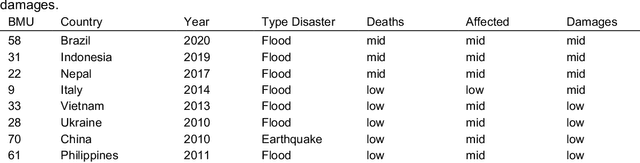

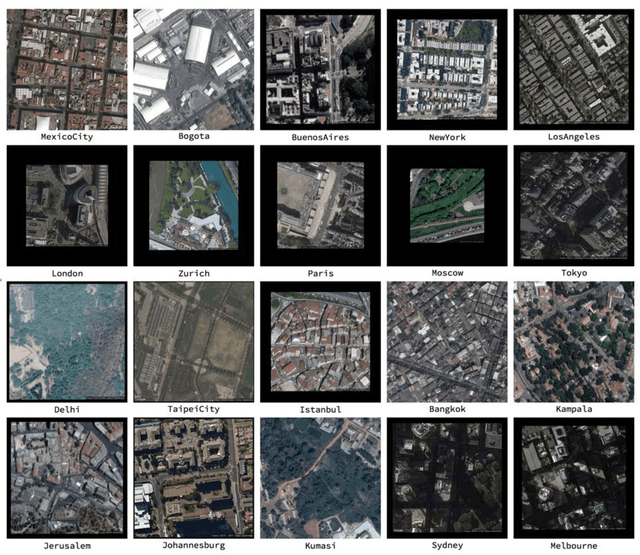

Abstract:Along with climate change, more frequent extreme events, such as flooding and tropical cyclones, threaten the livelihoods and wellbeing of poor and vulnerable populations. One of the most immediate needs of people affected by a disaster is finding shelter. While the proliferation of data on disasters is already helping to save lives, identifying damages in buildings, assessing shelter needs, and finding appropriate places to establish emergency shelters or settlements require a wide range of data to be combined rapidly. To address this gap and make a headway in comprehensive assessments, this paper proposes a machine learning workflow that aims to fuse and rapidly analyse multimodal data. This workflow is built around open and online data to ensure scalability and broad accessibility. Based on a database of 19 characteristics for more than 200 disasters worldwide, a fusion approach at the decision level was used. This technique allows the collected multimodal data to share a common semantic space that facilitates the prediction of individual variables. Each fused numerical vector was fed into an unsupervised clustering algorithm called Self-Organizing-Maps (SOM). The trained SOM serves as a predictor for future cases, allowing predicting consequences such as total deaths, total people affected, and total damage, and provides specific recommendations for assessments in the shelter and housing sector. To achieve such prediction, a satellite image from before the disaster and the geographic and demographic conditions are shown to the trained model, which achieved a prediction accuracy of 62 %

Indexical Cities: Articulating Personal Models of Urban Preference with Geotagged Data

Jan 23, 2020

Abstract:How to assess the potential of liking a city or a neighborhood before ever having been there. The concept of urban quality has until now pertained to global city ranking, where cities are evaluated under a grid of given parameters, or either to empirical and sociological approaches, often constrained by the amount of available information. Using state of the art machine learning techniques and thousands of geotagged satellite and perspective images from diverse urban cultures, this research characterizes personal preference in urban spaces and predicts a spectrum of unknown likeable places for a specific observer. Unlike most urban perception studies, our intention is not by any means to provide an objective measure of urban quality, but rather to portray personal views of the city or Cities of Indexes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge