A Machine learning approach for rapid disaster response based on multi-modal data. The case of housing & shelter needs

Paper and Code

Aug 09, 2021

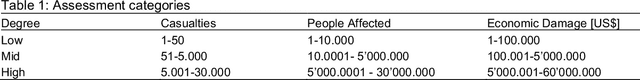

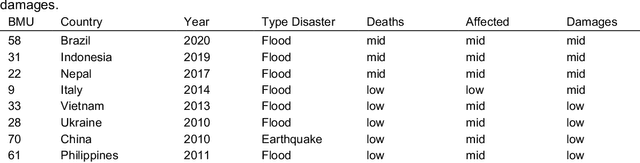

Along with climate change, more frequent extreme events, such as flooding and tropical cyclones, threaten the livelihoods and wellbeing of poor and vulnerable populations. One of the most immediate needs of people affected by a disaster is finding shelter. While the proliferation of data on disasters is already helping to save lives, identifying damages in buildings, assessing shelter needs, and finding appropriate places to establish emergency shelters or settlements require a wide range of data to be combined rapidly. To address this gap and make a headway in comprehensive assessments, this paper proposes a machine learning workflow that aims to fuse and rapidly analyse multimodal data. This workflow is built around open and online data to ensure scalability and broad accessibility. Based on a database of 19 characteristics for more than 200 disasters worldwide, a fusion approach at the decision level was used. This technique allows the collected multimodal data to share a common semantic space that facilitates the prediction of individual variables. Each fused numerical vector was fed into an unsupervised clustering algorithm called Self-Organizing-Maps (SOM). The trained SOM serves as a predictor for future cases, allowing predicting consequences such as total deaths, total people affected, and total damage, and provides specific recommendations for assessments in the shelter and housing sector. To achieve such prediction, a satellite image from before the disaster and the geographic and demographic conditions are shown to the trained model, which achieved a prediction accuracy of 62 %

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge