Karell Bertet

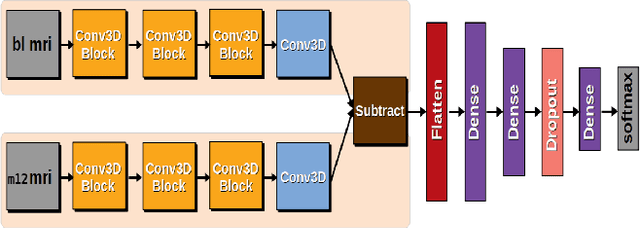

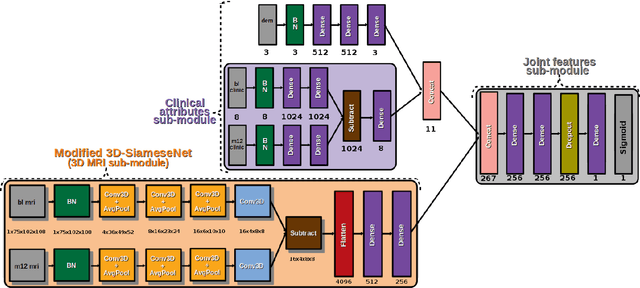

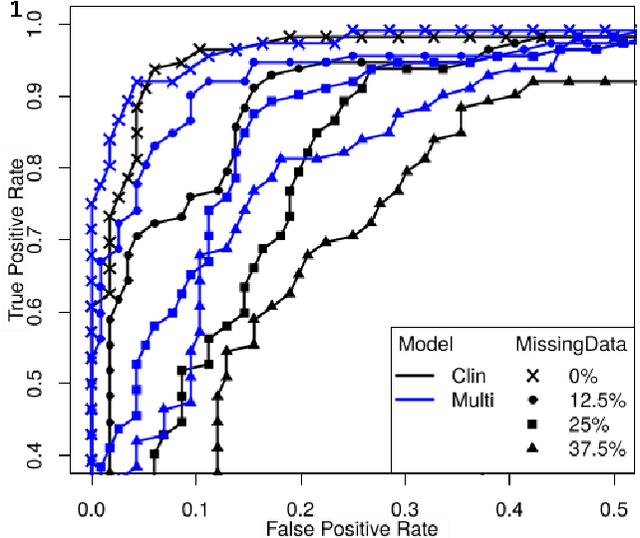

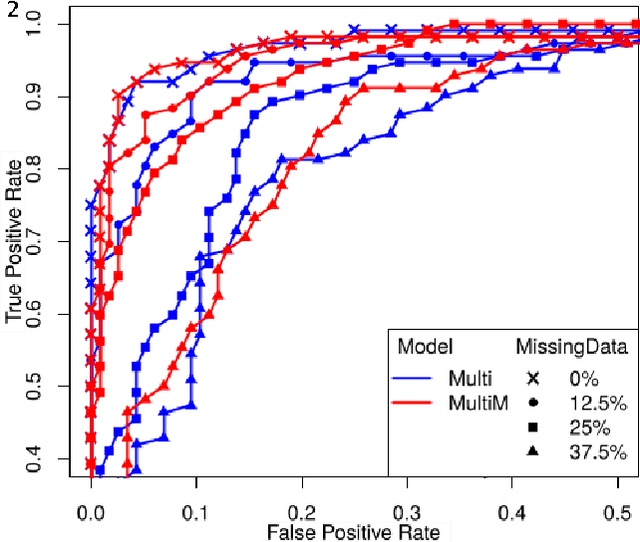

Predicting Brain Degeneration with a Multimodal Siamese Neural Network

Nov 02, 2020

Abstract:To study neurodegenerative diseases, longitudinal studies are carried on volunteer patients. During a time span of several months to several years, they go through regular medical visits to acquire data from different modalities, such as biological samples, cognitive tests, structural and functional imaging. These variables are heterogeneous but they all depend on the patient's health condition, meaning that there are possibly unknown relationships between all modalities. Some information may be specific to some modalities, others may be complementary, and others may be redundant. Some data may also be missing. In this work we present a neural network architecture for multimodal learning, able to use imaging and clinical data from two time points to predict the evolution of a neurodegenerative disease, and robust to missing values. Our multimodal network achieves 92.5\% accuracy and an AUC score of 0.978 over a test set of 57 subjects. We also show the superiority of the multimodal architecture, for up to 37.5\% of missing values in test set subjects' clinical measurements, compared to a model using only the clinical modality.

Next Priority Concept: A new and generic algorithm computing concepts from complex and heterogeneous data

Dec 20, 2019

Abstract:In this article, we present a new data type agnostic algorithm calculating a concept lattice from heterogeneous and complex data. Our NextPriorityConcept algorithm is first introduced and proved in the binary case as an extension of Bordat's algorithm with the notion of strategies to select only some predecessors of each concept, avoiding the generation of unreasonably large lattices. The algorithm is then extended to any type of data in a generic way. It is inspired from pattern structure theory, where data are locally described by predicates independent of their types, allowing the management of heterogeneous data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge