Karan Nathwani

Indian Institute of Technology Jammu

Computing Optimal Location of Microphone for Improved Speech Recognition

Mar 24, 2022

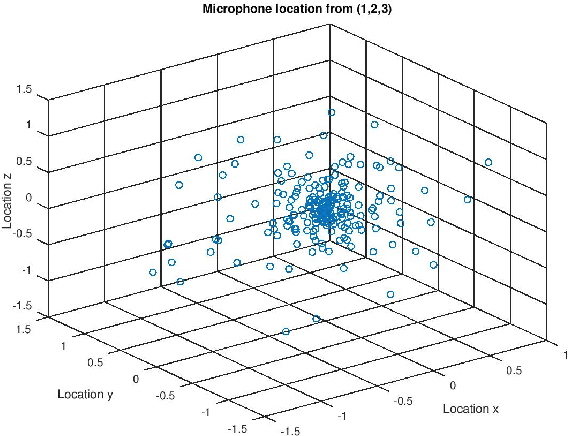

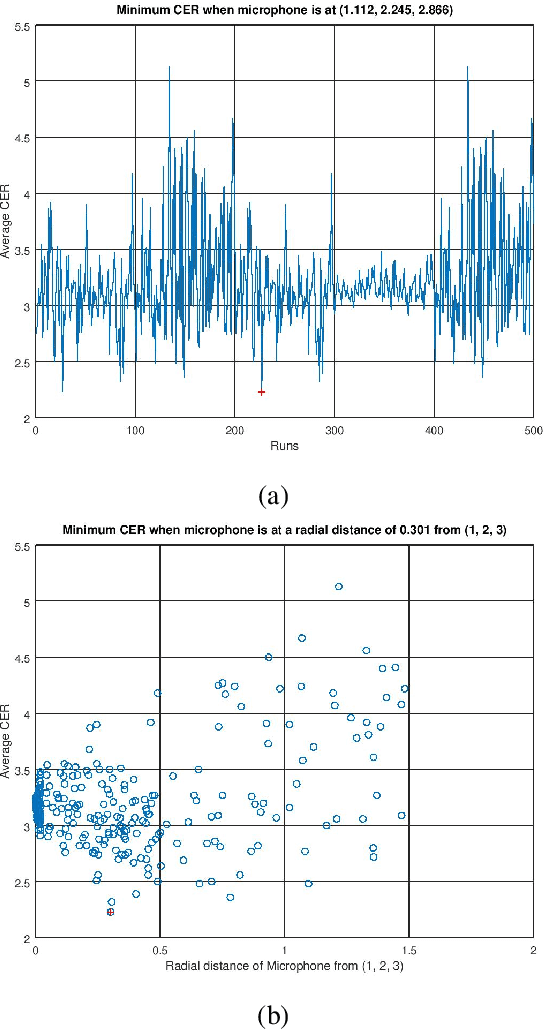

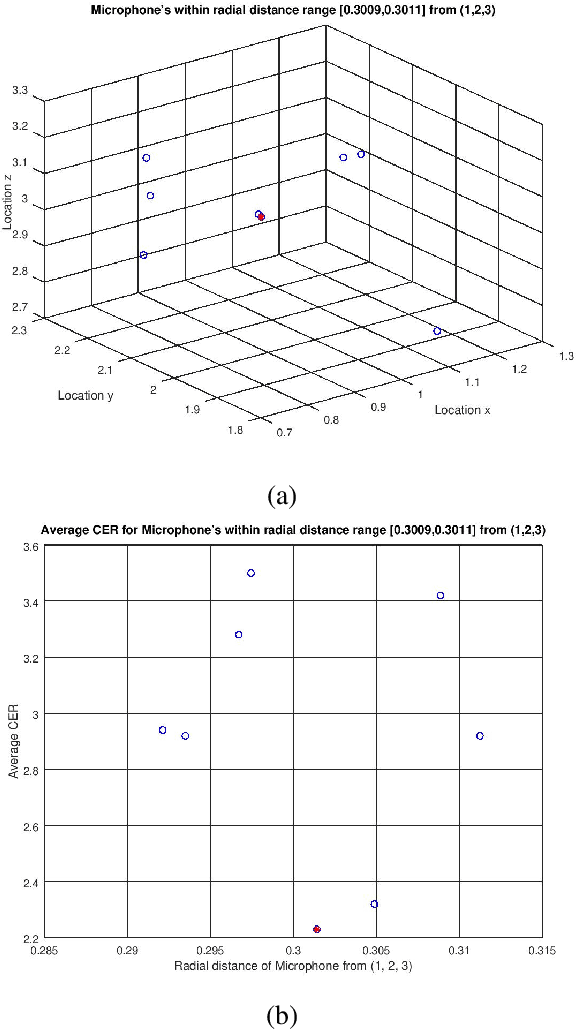

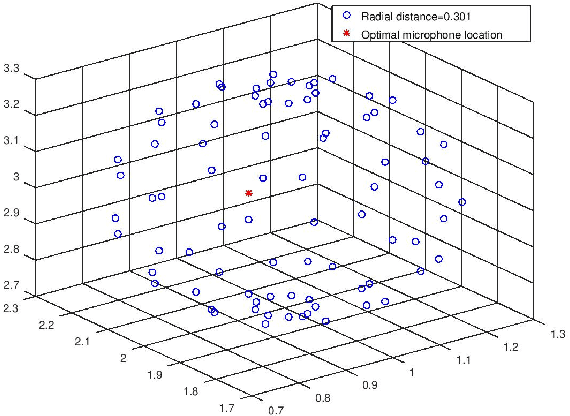

Abstract:It was shown in our earlier work that the measurement error in the microphone position affected the room impulse response (RIR) which in turn affected the single-channel close microphone and multi-channel distant microphone speech recognition. In this paper, as an extension, we systematically study to identify the optimal location of the microphone, given an approximate and hence erroneous location of the microphone in 3D space. The primary idea is to use Monte-Carlo technique to generate a large number of random microphone positions around the erroneous microphone position and select the microphone position that results in the best performance of a general purpose automatic speech recognition (gp-asr). We experiment with clean and noisy speech and show that the optimal location of the microphone is unique and is affected by noise.

Using Deep Learning Techniques and Inferential Speech Statistics for AI Synthesised Speech Recognition

Jul 23, 2021

Abstract:The recent developments in technology have re-warded us with amazing audio synthesis models like TACOTRON and WAVENETS. On the other side, it poses greater threats such as speech clones and deep fakes, that may go undetected. To tackle these alarming situations, there is an urgent need to propose models that can help discriminate a synthesized speech from an actual human speech and also identify the source of such a synthesis. Here, we propose a model based on Convolutional Neural Network (CNN) and Bidirectional Recurrent Neural Network (BiRNN) that helps to achieve both the aforementioned objectives. The temporal dependencies present in AI synthesized speech are exploited using Bidirectional RNN and CNN. The model outperforms the state-of-the-art approaches by classifying the AI synthesized audio from real human speech with an error rate of 1.9% and detecting the underlying architecture with an accuracy of 97%.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge