Kapil Vaswani

ExclaveFL: Providing Transparency to Federated Learning using Exclaves

Dec 13, 2024Abstract:In federated learning (FL), data providers jointly train a model without disclosing their training data. Despite its privacy benefits, a malicious data provider can simply deviate from the correct training protocol without being detected, thus attacking the trained model. While current solutions have explored the use of trusted execution environment (TEEs) to combat such attacks, there is a mismatch with the security needs of FL: TEEs offer confidentiality guarantees, which are unnecessary for FL and make them vulnerable to side-channel attacks, and focus on coarse-grained attestation, which does not capture the execution of FL training. We describe ExclaveFL, an FL platform that achieves end-to-end transparency and integrity for detecting attacks. ExclaveFL achieves this by employing a new hardware security abstraction, exclaves, which focus on integrity-only guarantees. ExclaveFL uses exclaves to protect the execution of FL tasks, while generating signed statements containing fine-grained, hardware-based attestation reports of task execution at runtime. ExclaveFL then enables auditing using these statements to construct an attested dataflow graph and then check that the FL training jobs satisfies claims, such as the absence of attacks. Our experiments show that ExclaveFL introduces a less than 9% overhead while detecting a wide-range of attacks.

Confidential Machine Learning within Graphcore IPUs

May 20, 2022

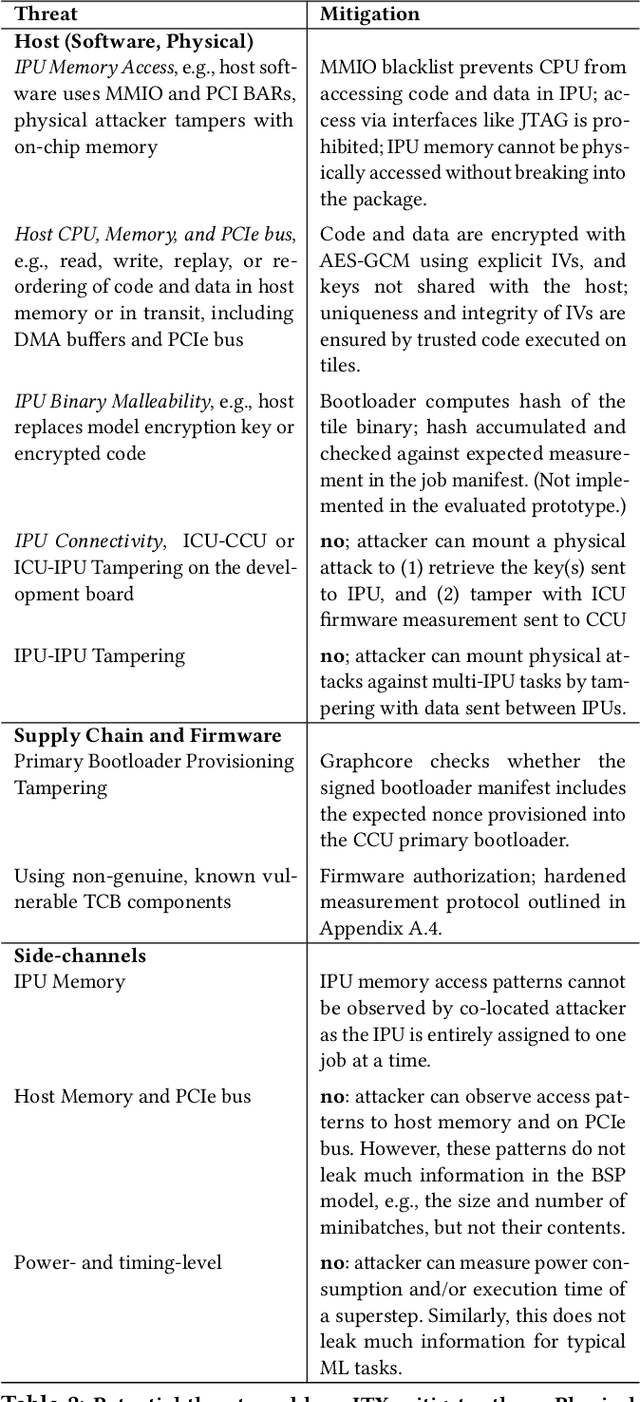

Abstract:We present IPU Trusted Extensions (ITX), a set of experimental hardware extensions that enable trusted execution environments in Graphcore's AI accelerators. ITX enables the execution of AI workloads with strong confidentiality and integrity guarantees at low performance overheads. ITX isolates workloads from untrusted hosts, and ensures their data and models remain encrypted at all times except within the IPU. ITX includes a hardware root-of-trust that provides attestation capabilities and orchestrates trusted execution, and on-chip programmable cryptographic engines for authenticated encryption of code and data at PCIe bandwidth. We also present software for ITX in the form of compiler and runtime extensions that support multi-party training without requiring a CPU-based TEE. Experimental support for ITX is included in Graphcore's GC200 IPU taped out at TSMC's 7nm technology node. Its evaluation on a development board using standard DNN training workloads suggests that ITX adds less than 5% performance overhead, and delivers up to 17x better performance compared to CPU-based confidential computing systems relying on AMD SEV-SNP.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge