Kangcheng Lin

A Holistic Approach to Interpretability in Financial Lending: Models, Visualizations, and Summary-Explanations

Jun 04, 2021

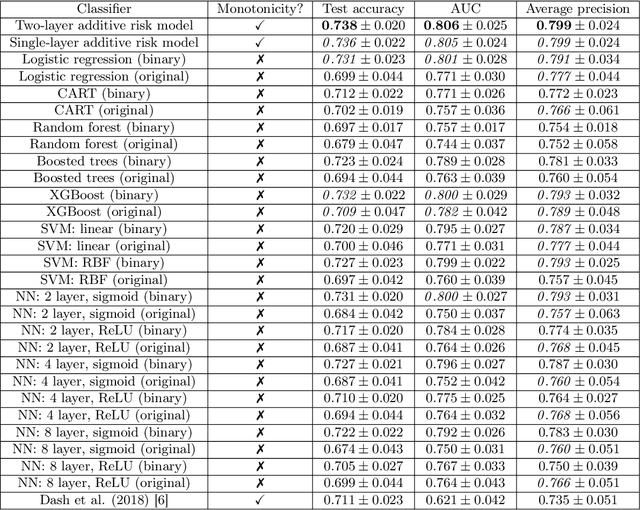

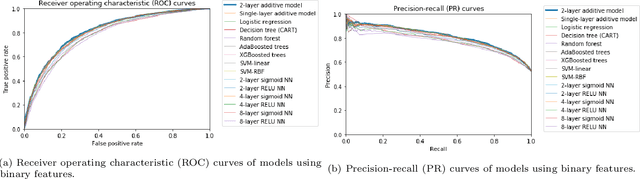

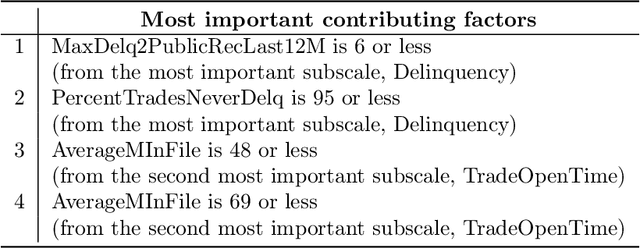

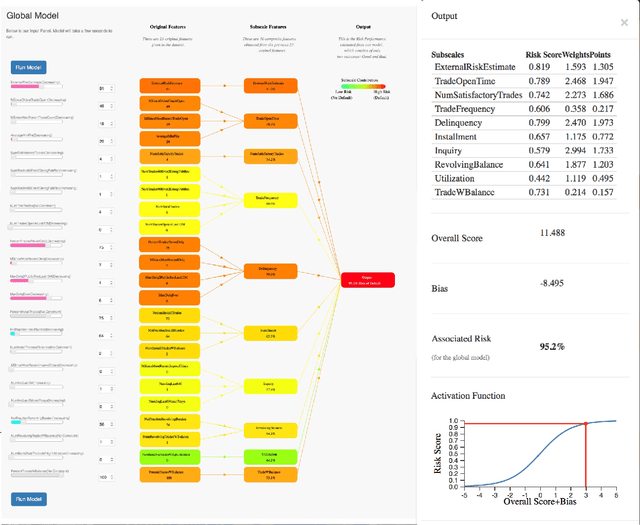

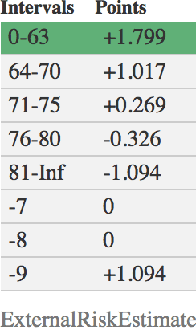

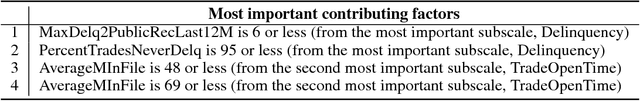

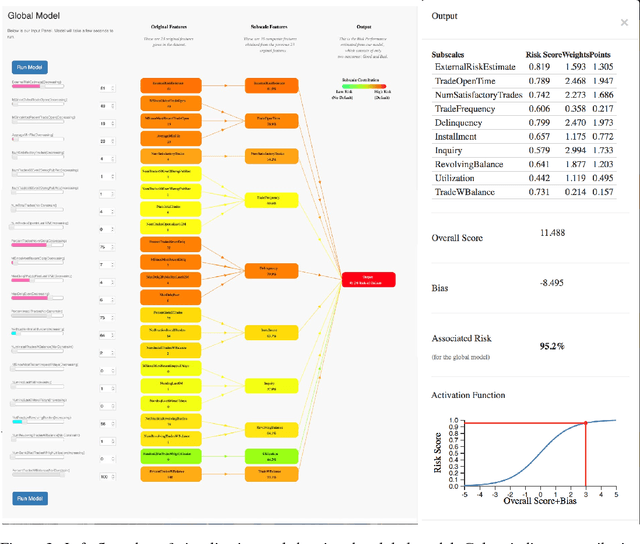

Abstract:Lending decisions are usually made with proprietary models that provide minimally acceptable explanations to users. In a future world without such secrecy, what decision support tools would one want to use for justified lending decisions? This question is timely, since the economy has dramatically shifted due to a pandemic, and a massive number of new loans will be necessary in the short term. We propose a framework for such decisions, including a globally interpretable machine learning model, an interactive visualization of it, and several types of summaries and explanations for any given decision. The machine learning model is a two-layer additive risk model, which resembles a two-layer neural network, but is decomposable into subscales. In this model, each node in the first (hidden) layer represents a meaningful subscale model, and all of the nonlinearities are transparent. Our online visualization tool allows exploration of this model, showing precisely how it came to its conclusion. We provide three types of explanations that are simpler than, but consistent with, the global model: case-based reasoning explanations that use neighboring past cases, a set of features that were the most important for the model's prediction, and summary-explanations that provide a customized sparse explanation for any particular lending decision made by the model. Our framework earned the FICO recognition award for the Explainable Machine Learning Challenge, which was the first public challenge in the domain of explainable machine learning.

An Interpretable Model with Globally Consistent Explanations for Credit Risk

Nov 30, 2018

Abstract:We propose a possible solution to a public challenge posed by the Fair Isaac Corporation (FICO), which is to provide an explainable model for credit risk assessment. Rather than present a black box model and explain it afterwards, we provide a globally interpretable model that is as accurate as other neural networks. Our "two-layer additive risk model" is decomposable into subscales, where each node in the second layer represents a meaningful subscale, and all of the nonlinearities are transparent. We provide three types of explanations that are simpler than, but consistent with, the global model. One of these explanation methods involves solving a minimum set cover problem to find high-support globally-consistent explanations. We present a new online visualization tool to allow users to explore the global model and its explanations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge