Kamil Khan

CAFEEN: A Cooperative Approach for Energy Efficient NoCs with Multi-Agent Reinforcement Learning

Oct 09, 2024

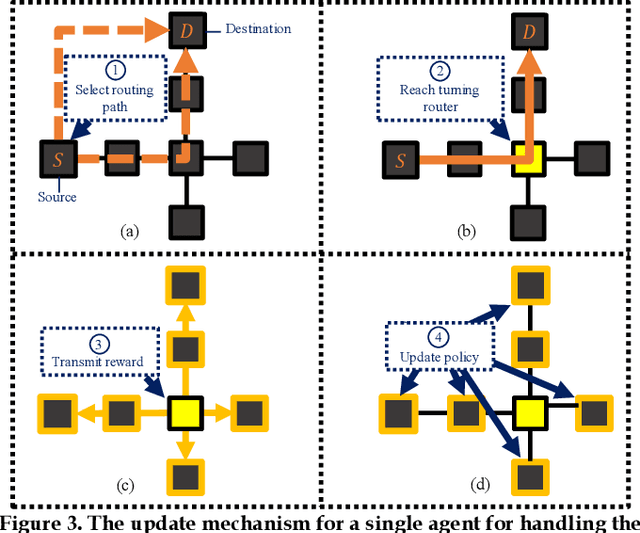

Abstract:In emerging high-performance Network-on-Chip (NoC) architectures, efficient power management is crucial to minimize energy consumption. We propose a novel framework called CAFEEN that employs both heuristic-based fine-grained and machine learning-based coarse-grained power-gating for energy-efficient NoCs. CAFEEN uses a fine-grained method to activate only essential NoC buffers during lower network loads. It switches to a coarse-grained method at peak loads to minimize compounding wake-up overhead using multi-agent reinforcement learning. Results show that CAFEEN adaptively balances power-efficiency with performance, reducing total energy by 2.60x for single application workloads and 4.37x for multi-application workloads, compared to state-of-the-art NoC power-gating frameworks.

RACE: A Reinforcement Learning Framework for Improved Adaptive Control of NoC Channel Buffers

May 26, 2022

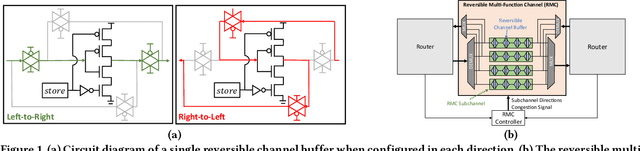

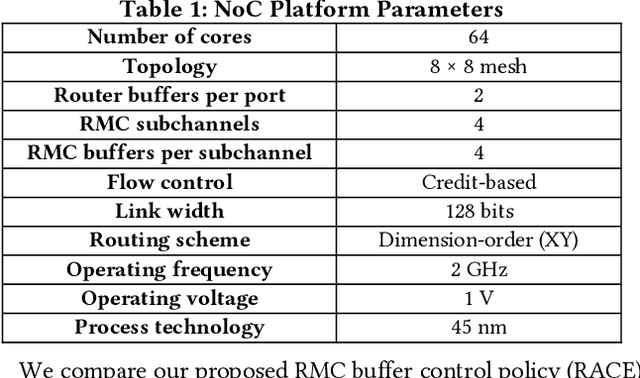

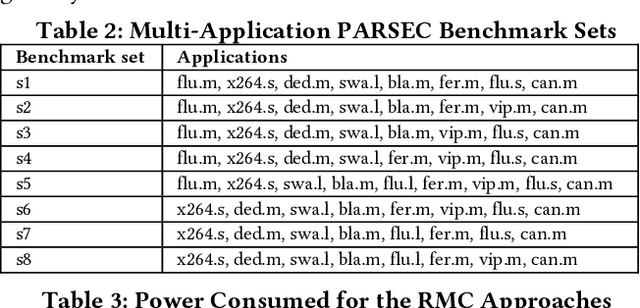

Abstract:Network-on-chip (NoC) architectures rely on buffers to store flits to cope with contention for router resources during packet switching. Recently, reversible multi-function channel (RMC) buffers have been proposed to simultaneously reduce power and enable adaptive NoC buffering between adjacent routers. While adaptive buffering can improve NoC performance by maximizing buffer utilization, controlling the RMC buffer allocations requires a congestion-aware, scalable, and proactive policy. In this work, we present RACE, a novel reinforcement learning (RL) framework that utilizes better awareness of network congestion and a new reward metric ("falsefulls") to help guide the RL agent towards better RMC buffer control decisions. We show that RACE reduces NoC latency by up to 48.9%, and energy consumption by up to 47.1% against state-of-the-art NoC buffer control policies.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge