Kailash A. Hambarde

Rectifying Geometry-Induced Similarity Distortions for Real-World Aerial-Ground Person Re-Identification

Jan 29, 2026Abstract:Aerial-ground person re-identification (AG-ReID) is fundamentally challenged by extreme viewpoint and distance discrepancies between aerial and ground cameras, which induce severe geometric distortions and invalidate the assumption of a shared similarity space across views. Existing methods primarily rely on geometry-aware feature learning or appearance-conditioned prompting, while implicitly assuming that the geometry-invariant dot-product similarity used in attention mechanisms remains reliable under large viewpoint and scale variations. We argue that this assumption does not hold. Extreme camera geometry systematically distorts the query-key similarity space and degrades attention-based matching, even when feature representations are partially aligned. To address this issue, we introduce Geometry-Induced Query-Key Transformation (GIQT), a lightweight low-rank module that explicitly rectifies the similarity space by conditioning query-key interactions on camera geometry. Rather than modifying feature representations or the attention formulation itself, GIQT adapts the similarity computation to compensate for dominant geometry-induced anisotropic distortions. Building on this local similarity rectification, we further incorporate a geometry-conditioned prompt generation mechanism that provides global, view-adaptive representation priors derived directly from camera geometry. Experiments on four aerial-ground person re-identification benchmarks demonstrate that the proposed framework consistently improves robustness under extreme and previously unseen geometric conditions, while introducing minimal computational overhead compared to state-of-the-art methods.

VReID-XFD: Video-based Person Re-identification at Extreme Far Distance Challenge Results

Jan 04, 2026Abstract:Person re-identification (ReID) across aerial and ground views at extreme far distances introduces a distinct operating regime where severe resolution degradation, extreme viewpoint changes, unstable motion cues, and clothing variation jointly undermine the appearance-based assumptions of existing ReID systems. To study this regime, we introduce VReID-XFD, a video-based benchmark and community challenge for extreme far-distance (XFD) aerial-to-ground person re-identification. VReID-XFD is derived from the DetReIDX dataset and comprises 371 identities, 11,288 tracklets, and 11.75 million frames, captured across altitudes from 5.8 m to 120 m, viewing angles from oblique (30 degrees) to nadir (90 degrees), and horizontal distances up to 120 m. The benchmark supports aerial-to-aerial, aerial-to-ground, and ground-to-aerial evaluation under strict identity-disjoint splits, with rich physical metadata. The VReID-XFD-25 Challenge attracted 10 teams with hundreds of submissions. Systematic analysis reveals monotonic performance degradation with altitude and distance, a universal disadvantage of nadir views, and a trade-off between peak performance and robustness. Even the best-performing SAS-PReID method achieves only 43.93 percent mAP in the aerial-to-ground setting. The dataset, annotations, and official evaluation protocols are publicly available at https://www.it.ubi.pt/DetReIDX/ .

DetReIDX: A Stress-Test Dataset for Real-World UAV-Based Person Recognition

May 07, 2025Abstract:Person reidentification (ReID) technology has been considered to perform relatively well under controlled, ground-level conditions, but it breaks down when deployed in challenging real-world settings. Evidently, this is due to extreme data variability factors such as resolution, viewpoint changes, scale variations, occlusions, and appearance shifts from clothing or session drifts. Moreover, the publicly available data sets do not realistically incorporate such kinds and magnitudes of variability, which limits the progress of this technology. This paper introduces DetReIDX, a large-scale aerial-ground person dataset, that was explicitly designed as a stress test to ReID under real-world conditions. DetReIDX is a multi-session set that includes over 13 million bounding boxes from 509 identities, collected in seven university campuses from three continents, with drone altitudes between 5.8 and 120 meters. More important, as a key novelty, DetReIDX subjects were recorded in (at least) two sessions on different days, with changes in clothing, daylight and location, making it suitable to actually evaluate long-term person ReID. Plus, data were annotated from 16 soft biometric attributes and multitask labels for detection, tracking, ReID, and action recognition. In order to provide empirical evidence of DetReIDX usefulness, we considered the specific tasks of human detection and ReID, where SOTA methods catastrophically degrade performance (up to 80% in detection accuracy and over 70% in Rank-1 ReID) when exposed to DetReIDXs conditions. The dataset, annotations, and official evaluation protocols are publicly available at https://www.it.ubi.pt/DetReIDX/

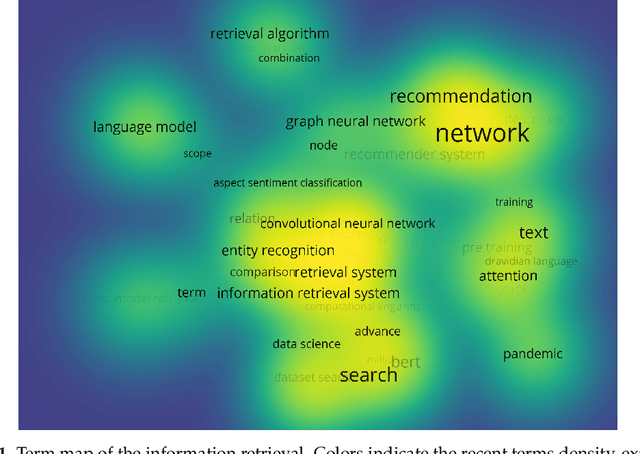

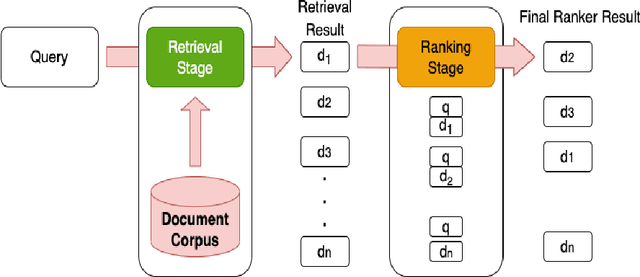

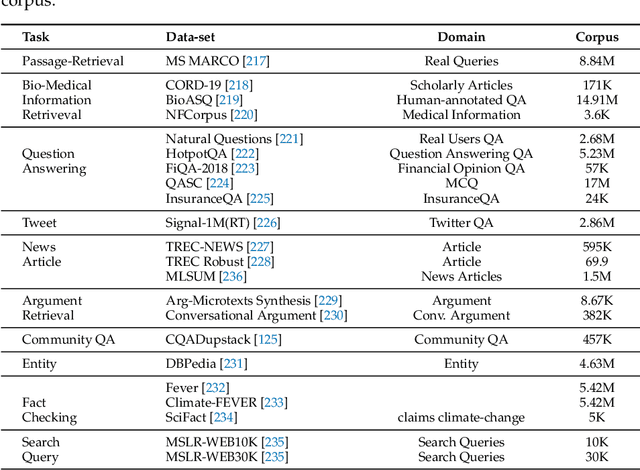

Information Retrieval: Recent Advances and Beyond

Jan 20, 2023

Abstract:In this paper, we provide a detailed overview of the models used for information retrieval in the first and second stages of the typical processing chain. We discuss the current state-of-the-art models, including methods based on terms, semantic retrieval, and neural. Additionally, we delve into the key topics related to the learning process of these models. This way, this survey offers a comprehensive understanding of the field and is of interest for for researchers and practitioners entering/working in the information retrieval domain.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge