Justin Jose

RLInspect: An Interactive Visual Approach to Assess Reinforcement Learning Algorithm

Nov 13, 2024

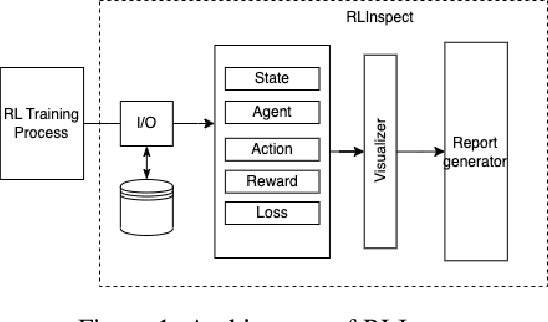

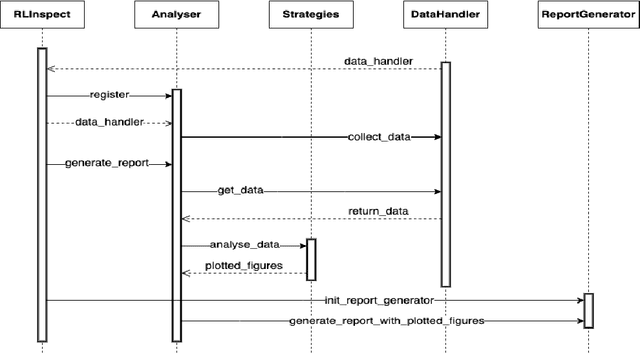

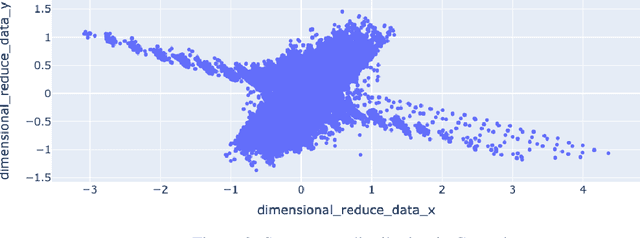

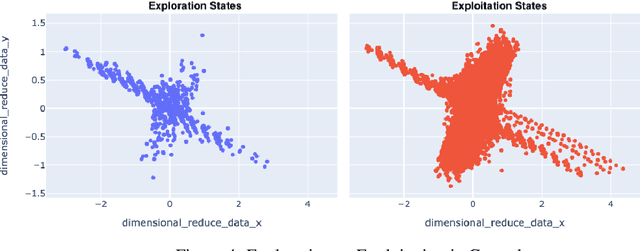

Abstract:Reinforcement Learning (RL) is a rapidly growing area of machine learning that finds its application in a broad range of domains, from finance and healthcare to robotics and gaming. Compared to other machine learning techniques, RL agents learn from their own experiences using trial and error, and improve their performance over time. However, assessing RL models can be challenging, which makes it difficult to interpret their behaviour. While reward is a widely used metric to evaluate RL models, it may not always provide an accurate measure of training performance. In some cases, the reward may seem increasing while the model's performance is actually decreasing, leading to misleading conclusions about the effectiveness of the training. To overcome this limitation, we have developed RLInspect - an interactive visual analytic tool, that takes into account different components of the RL model - state, action, agent architecture and reward, and provides a more comprehensive view of the RL training. By using RLInspect, users can gain insights into the model's behaviour, identify issues during training, and potentially correct them effectively, leading to a more robust and reliable RL system.

MotorEase: Automated Detection of Motor Impairment Accessibility Issues in Mobile App UIs

Mar 20, 2024

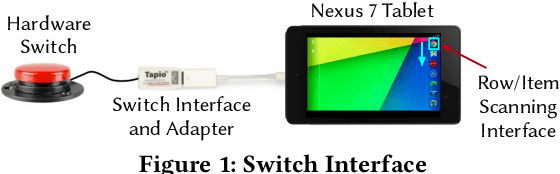

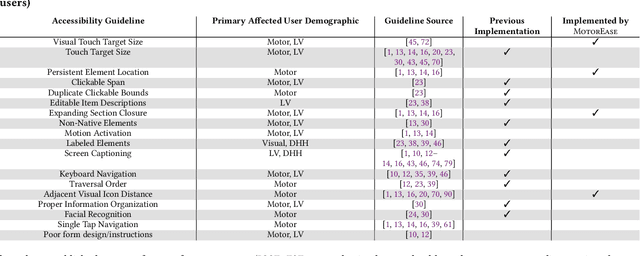

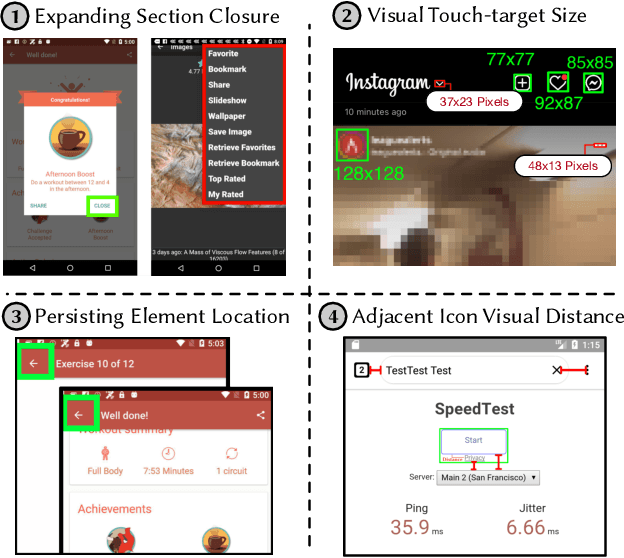

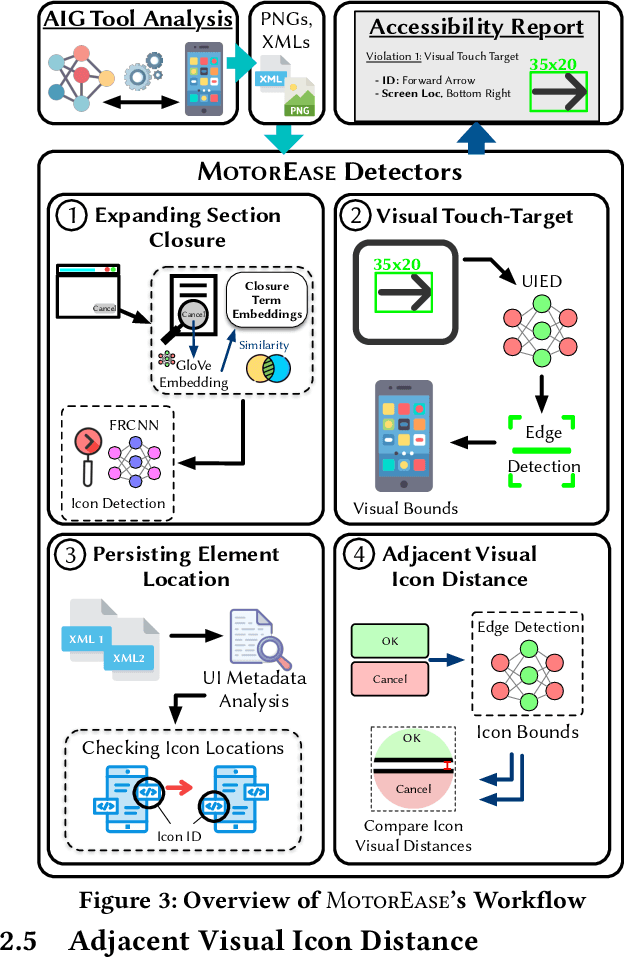

Abstract:Recent research has begun to examine the potential of automatically finding and fixing accessibility issues that manifest in software. However, while recent work makes important progress, it has generally been skewed toward identifying issues that affect users with certain disabilities, such as those with visual or hearing impairments. However, there are other groups of users with different types of disabilities that also need software tooling support to improve their experience. As such, this paper aims to automatically identify accessibility issues that affect users with motor-impairments. To move toward this goal, this paper introduces a novel approach, called MotorEase, capable of identifying accessibility issues in mobile app UIs that impact motor-impaired users. Motor-impaired users often have limited ability to interact with touch-based devices, and instead may make use of a switch or other assistive mechanism -- hence UIs must be designed to support both limited touch gestures and the use of assistive devices. MotorEase adapts computer vision and text processing techniques to enable a semantic understanding of app UI screens, enabling the detection of violations related to four popular, previously unexplored UI design guidelines that support motor-impaired users, including: (i) visual touch target size, (ii) expanding sections, (iii) persisting elements, and (iv) adjacent icon visual distance. We evaluate MotorEase on a newly derived benchmark, called MotorCheck, that contains 555 manually annotated examples of violations to the above accessibility guidelines, across 1599 screens collected from 70 applications via a mobile app testing tool. Our experiments illustrate that MotorEase is able to identify violations with an average accuracy of ~90%, and a false positive rate of less than 9%, outperforming baseline techniques.

A Dual Band Printed F Antenna using a Trap with small band separation

Jul 18, 2023Abstract:Trap antenna is well known method and has many applications. With this method, trap(s) are used on antenna to block currents of some frequencies and so electrically divide the antenna into multiple segments and thus one antenna can work on multiple frequencies. In this paper, trap antenna method is used to design a dual band Sub GHz printed F-antenna. The antenna is printed on FR4 board to achieve low cost solution. The two bands are 865-870 MHz and 902-928 MHz. The challenge of this design is that the frequency separation of the two bands is very small. In this case, and also the extra section for low frequency band is too small. Then, the influence of trap LC component variation due to tolerance to the two resonant frequencies is big, and so it is difficult to achieve good in band return loss within the LC tolerance. This is the main difficulty of this design. The problem is solved by placing the low band section away from the end of the antenna.

* 5 pages

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge